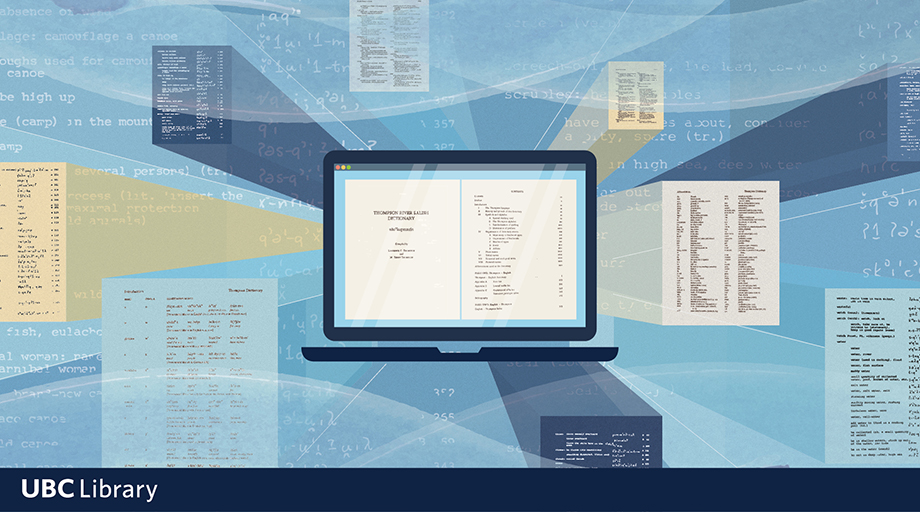

UBC Library is excited to announce the official opening of the Chung | Lind Gallery showcasing the Wallace B. Chung and Madeline H. Chung Collection and Phil Lind Klondike Gold Rush Collection. The new exhibition space in the Irving K. Barber Learning Centre on UBC’s Vancouver campus brings together two library collections of rare and culturally significant materials from Canada’s history.

The complementary collections explore the economic and social growth of early B.C. and the Yukon through exhibits that reveal stories about the Indigenous experience and the experience of Chinese immigrants to B.C. The gallery will provide faculty, students and the public with direct access to two significant Canadian cultural properties.

“We’re thankful to everyone who made the Chung | Lind Gallery a reality, after many years of planning and effort to create this remarkable space. Displayed together, these two outstanding collections will create a new focal point for historical research, teaching and learning at UBC, and in time become a magnet for scholars across Canada who wish to view these rare materials first-hand,” said university librarian Dr. Susan E. Parker.

“We are thrilled to have the opportunity to bring together these two avid and dedicated collectors—Dr. Chung and Mr. Lind—who share such a passion for history and material culture. And by putting their collections in dialogue with each other, we’ve discovered unexpected resonances. Now being displayed together publicly in the new Chung | Lind Gallery, we know the collections will continue to enrich and inform each other, providing new and exciting possibilities for learning and scholarship,” said Katherine Kalsbeek, head of rare books and special collections.

The Chung | Lind Gallery includes approximately 292 square metres of display space on the second floor of the Irving K. Barber Learning Centre. The space has been renovated to meet Canadian Conservation Institute and department of Canadian heritage guidelines and requirements for displaying, protecting and preserving heritage collections. Public and Page Two provided design support for the space.

The Chung | Lind Gallery. Credit: UBC Library Communications and Marketing

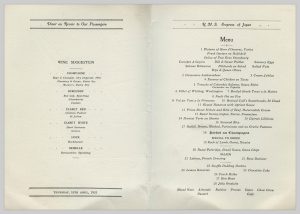

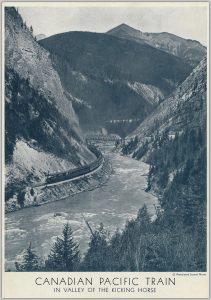

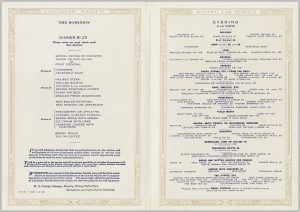

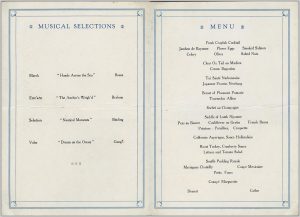

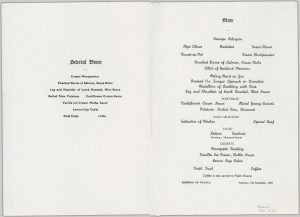

An achievement in visual storytelling, the Chung | Lind Gallery features both rare treasures from the collections and modern marvels. At the entrance, a large-scale model of Canadian Pacific Railway (CPR) company steamship, the Empress of Japan, restored by Dr. Chung, rides the waves over a virtual ocean designed by Dutch Igloo. Further into the gallery, a miniature log cabin comes to life with projected scenes from the Klondike era.

Funding for the gallery renovation was generously provided by Phil Lind, the UBC President’s Priority Fund, the London Drugs Foundation, donors to the library, and by the Canadian government through the department of Canadian heritage’s Canada Cultural Spaces Fund.

“Libraries play a pivotal role in preserving and sharing Canada’s history and heritage, providing invaluable access to historically significant materials. They are custodians of collective wisdom, where every book can transport us to a moment in time and every shelf offers boundless opportunities for discovery. Thanks to the newly opened Chung | Lind Gallery, now and in the future, you can learn more about important moments in our country’s history and take in rare collections being showcased. Congratulations to the University of British Columbia and everyone involved in turning this dream into reality!” said Pascale St‑Onge, minister of Canadian heritage.

The Chung | Lind Gallery. Credit: UBC Library Communications and Marketing

The Wallace B. Chung and Madeline H. Chung Collection, donated to UBC Library in 1999, contains more than 25,000 rare and unique documents, books, maps, posters, paintings, photographs, tableware and other artifacts that represent early B.C. history, immigration and settlement, particularly of Chinese people in North America and the CPR. Items from the Chung Collection were previously on display at RBSC. The new gallery space will bring this collection further into the public eye and provide new opportunities for community engagement.

The Chung Collection has been designated as a national treasure by the department of Canadian heritage’s Canadian Cultural Property Export Review Board (CCPERB), and has been named to the Canadian Commission for UNESCO’s Canada Memory of the World Register.

“This collection started with an interest in my neighbourhood. My family was confined to Chinatown, and I became curious about the history of the people that lived there. Many people do not know how difficult things once were for early Chinese migrants. While the world has changed, this was only possible through first understanding the past. Our future is tied to history; to move forward, we must forgive the ills of the past, but we should never forget,” said Dr. Wallace Chung.

The Phil Lind Klondike Gold Rush Collection is an unparalleled rare book and archival collection dating from the Klondike Gold Rush, donated to UBC Library in 2021 by UBC alumnus and Canadian telecommunications icon Philip B. Lind, CM. The gift included $2 million to support the collection and the gallery renovation.

The Chung | Lind Gallery. Credit: UBC Library Communications and Marketing

The Lind Collection includes books, maps, letters and photos collected by Lind in honour of his grandfather Johnny Lind, a trailblazer and prospector who operated and co-owned several claims on Klondike rivers and creeks. The Lind Collection has been designated as a cultural property of outstanding significance by the CCPERB.

“The Lind family is honoured to have the Phil Lind Klondike Collection housed at UBC Library. Our father’s collection stemmed from a fascination for his grandfather, John Grieve Lind, and grew into a passion that followed him through his life. He wanted to share this underrepresented history with the academic community and for future generations to enjoy. It is truly unique to share this space with The Chung Collection, bringing together two disparate histories of west coast Canada from the turn of the century that are integral to the formation of the notion of the west,” said Jed Lind.

The Chung | Lind Gallery was unveiled with a special ribbon cutting ceremony on Friday, April 19, attended by members of both the Chung and Lind families and UBC president and vice-chancellor Dr. Benoit-Antoine Bacon. The gallery will open to the public on May 1.

Explore photos of the Chung | Lind Gallery.

Media contacts

Erik Rolfsen

Media Relations Specialist

Tel: 604.822.2644

erik.rolfsen@ubc.ca

Anna Moorhouse

Manager, Communications & Marketing, UBC Library

Tel: 604.822.1548

anna.moorhouse@ubc.ca