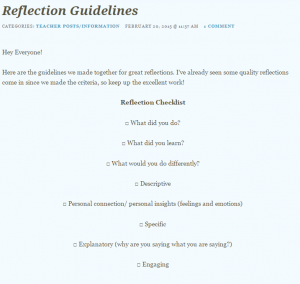

Throughout my practica experiences, I had opportunities to both assess and evaluate student work. I experimented with several forms of ongoing assessment to check for student understanding, provided opportunities for students to act on written feedback, and promoted transparency of the assessment process through the co-construction and active revision of criteria.

During class time, I found it effective to use physical strategies such as the “five finger test” and “thumbs up, thumbs to the side, or thumbs down” to rapidly check the overall level of comprehension in the class. I found that this process provided me with immediate feedback about whether I needed to clarify something, or whether I could proceed to asking specific questions about the concept or task to further substantiate understanding. During this combined process, I came to realize that asking higher order questions to check for understanding were the most effective, but that I needed to prepare those questions ahead of time to best promote student thinking. I used the prepared higher order questions in a whole class setting, in smaller groups, or sometimes as exit slips in their reflection journals (Wassermann, 1992).

There are several other strategies that I would like to try in the future, such as the use of “traffic lights,” and I would like to further explore the use of gallery walks as a means of checking for understanding. I used gallery walks twice during my practica to get an overall sense of student understanding about several ideas at once. I found that the inherent anonymity of student responses was both beneficial and detrimental to my awareness of their overall understanding. Some students were more willing to write a response when their names were not attached to their comments, however the activity did not clarify if, how many, or which students were having trouble with the concept. Ultimately, I liked that the students could read their peers’ contributions so they could get a feel for their own level of understanding about the concept/topic, and perhaps learn from others’ contributions or question the validity of the contributions.

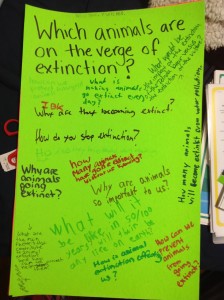

“I showed the class several examples of inquiry questions and asked them to tell me whether the question was a “good” question or a “bad” question (for the purpose of an inquiry project), and explain why. We then came up with criteria about what makes a question “good” or “bad” for an inquiry project. The class was confident identifying good and bad questions and explaining their rationale, however, when I asked them to turn a bad question into a good question, they found this much more difficult. This may be an area that I will have to work on more with them. Perhaps a gallery walk exercise where I have bad questions around the room, and they take sticky notes and put their good question underneath it.” December 4th 2014

When the students participated in the gallery walk the next class, they fed off of each other’s ideas and came up with many suggestions to improve the posted questions. They were able to take the “bad” (closed) questions and turn them into several “good” (open-ended) questions. After the gallery walk, each table group was assigned one of the posters, and asked to discuss the contributions of their peers. They were asked to evaluate the contributions based on our co-constructed criteria, and then choose the best three questions on the board and explain their selections to the class. The activity generated a lot of discussion, and I was able to confirm that almost all of the students now not only knew the difference between the “good” and “bad” questions, but could actively generate good questions themselves.

Including the students in the criteria process was very effective, especially when the criteria was actively constructed through the examination of successful and unsuccessful exemplars. I found that the criteria the class proposed for “good” inquiry questions included not only all of the points I had wanted to include, but also valid points I hadn’t yet considered. I repeated the process of co-constructing criteria with students on two other occasions with similar results, and found the process had a positive impact on the quality of work the students submitted.

Including the students in the criteria process was very effective, especially when the criteria was actively constructed through the examination of successful and unsuccessful exemplars. I found that the criteria the class proposed for “good” inquiry questions included not only all of the points I had wanted to include, but also valid points I hadn’t yet considered. I repeated the process of co-constructing criteria with students on two other occasions with similar results, and found the process had a positive impact on the quality of work the students submitted.

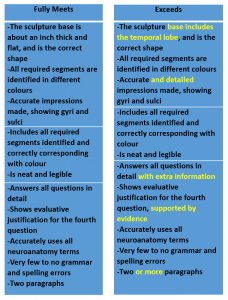

Similarly, I found that including the students in the criteria process in other ways also contributed to higher quality submissions. When I had pre-generated the criteria for a project, discussed revisions with the class, and actively highlighted the differences between an assignment that meets expectations and an assignment that exceeds expectations, the majority of the students aimed to exceed expectations (Student Work Example 1, Example 2).

Overall, the ongoing informal formative assessments helped me adapt my lessons based on student understanding, and the transparency of creating or examining criteria with the students led to much higher quality assignment submissions. I believe that both of these practices enhance overall student learning and motivation. I plan to continue with these practices and explore others in the future.

Follow

Follow