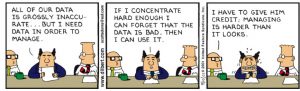

I approached the exercise with the mindset of being an impartial judge. I first noticed the lack of information behind the prosecution’s recommendation. Did the defendants have prior convictions? Were they influenced by the risk assessment? What was the algorithm behind based on? On a side note, the use of colour was influential UX-wise; a lot red/yellow was definitely eye-catching and I was curious is visuals play role when data is presented in real life, but I digress.

After reviewing two cases my plan was to base detention on the following criteria, in order of importance:

- Severity of the crime (assault justified detention vs. less “dangerous” crimes such as drug possession. My concern was safety to the public.

- Risk: Violence (I would detain red and yellow if paired with another violence indicator)

- Risk: Flight, if red and paired with “dangerous” crime

- Defendant’s comment and their age. I wanted to hear their voice and the age could possibly provide a clue about priors if paired with the type of crime. For example; a robbery charge over 30 year old might indicated that it is not the first one.

- Prosecution’s comment.

- Risk: Commit a crime

I recognized my own biases: gender and race. My studies in race inequality and systemic discrimination might lead me to be more lenient with people of colour and women. I also realized that my perceptions of “dangerous” were subjective, as were the indicators that I was using and how I was pairing them. With all this in mind, I tried to be as fair as possible and used this method for the next 5 cases.

This made me uneasy as without an actual points value I was pretty much just winging and feeling more unsure as I went along. I questioned whether I was trying to avoid detaining and/or deliberately opposing the prosecution’s recommendation and should reassess my method. Then I got hit with the news clipping and this changed everything. Suddenly my release “mistakes” began influencing me and I became less conservative with the DETAIN button; Risk of Flight and the prosecution’s recommendation moved to the top of my list. It reminded me of the Crime Machine podcast, as it was no longer about the cases themselves, but about MY Fear/Jail Capacity numbers, my reputation and the burden on the public.

For the last 10 defendants I shifted again and relied almost solely on the prosecution’s recommendation and the assessment despite my instincts. I notice my mindset changed and I found comfort in relinquishing my responsibilities and being backed by the data, the weight of the implications and outcomes was no longer just on my shoulder. What struck me is how easily I converted and how I was able to talk myself into it and justify it as “well they must know better”.

After the exercise I felt unsettled with the decisions I made and the consequences they might have if it was not a simulation. The two cases that stuck with me the most are that I released a mother, arrested for drug possession and woman arrested for reckless driving, mainly based on the prosecution’s recommendation and the risk assessment. Did I act on misinformation based on more misinformation? Were my biases the same ones that were used to create the assessment and also influence the prosecution. Additionally, my definition of “dangerous” to the public no longer made sense. Reckless driving was definitely dangerous for the public, and I can’t bare to overthink the drug possession arrest of a mother.

Dr. Cathy O’Neil defined “Weapons of Math Destruction” as having the following properties:

- Widespread and important

- Mysterious in their scoring mechanism

- Destructive

She noted that recidivism algorithms are limited to particular types of data. For a real bail hearing risk assessment, the algorithm might only focus on a person’s record of arrests and convictions and their responses to a questionnaire about crime in their neighborhood and/or in their family, she called them “proxies for race and class”. Risk assessment scores are then used by judges to determine bail, sentences, parole etc. O’Neil believes that these score should be used to help defendants, not against them.

To challenge these type of assessment, ProPublica followed up on over 7000 people arrested in Broward County, Florida, in 2013 and 2014 to see how many were charged with new crimes over the next two years, as per their the COMPAS risk assessment. They found the assessment were unreliable and only 20 percent of the people predicted to commit violent crimes went on to do so. They also showed that these same risk assessments predicted that black defendants were twice as likely to commit future crime.

This was definitely a startling task. In 15 minutes, my idealistic convictions succumbed to the pressures of getting those cases out with infrastructure approval and a complete disregard of the impact on defendants and their dependants. And all this based on flawed and concealed information. The expression that Amanda Levendowski used in the Enron podcast to describe biases that are encoded now echoes in my mind: “Garbage in, garbage out.”

References:

McRaney, D. (n.d.). Machine Bias (rebroadcast). In You Are Not so Smart. Retrieved from https://soundcloud.com/youarenotsosmart/140-machine-bias-rebroadcast

O’Neil, C. (2017, July 16). How can we stop algorithms telling lies? The Observer. Retrieved from https://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies

Garbage in, garbage out (computer science). (2021, October 7). Wikipedia. https://en.wikipedia.org/wiki/Garbage_in,_garbage_out

ProPublica, Angwin, J., Larson, J., Mattu, S., & Kirchner, L. (2016, May 23). Machine Bias. ProPublica; ProPublica. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing

Addams, S (2001). ‘Bad Data’. In United Features Syndicate (Ed.), www.dillbert.com