You can view my final project here:

Author Archives: brian leavitt

Task 12: Speculative Futures

Speculative Future – Health Tracking Smart Devices Unleashed

What if smart devices (watches, phones, rings, glasses, eye-wear, clothes, etc.) could, with claimed 100% accuracy, diagnose all aspects of our health, and provide that information to those that need it? The following are two speculative narratives examining a possible future in which that is a reality.

2030 – Jane

Jane is 32 and in perfect health according to AVA, her Advanced Virtual Assistant, except that this morning while gently waking her up it notified her that she has contracted a slight cold. AVA lets her know that it is nothing serious because the predictive health module only predicts mild symptoms including a runny nose, itchy eyes, and a mild headache, which will kick-in this morning and last approximately three days. As Jane is subscribed to premium AVA service, AVA has helpfully ordered some extra tissues, cold medicine, and scheduled chicken soup delivery for lunch from her favorite local soup restaurant. Jane asks AVA to let her boss know that she will be away sick for the next few days, and AVA replies, “Don’t worry Jane, I’ve already taken care of that. I automatically notified the AVA-Net of your illness, and your employer’s AVA was informed as well. They will expect you back at work next Tuesday after you are no longer contagious. The AVA-Net also contacted your friends that you had dinner with last night to notify them that they may have been exposed, and that their AVA’s should monitor for symptoms. You go ahead and relax today, although regular exercise is still recommended, which is why I woke you up.”

“Thanks AVA,” Jane replies, “what would I do without you?”

After a moderate exercise recommended and monitored by AVA with a heart-rate display projected on her glasses, Jane takes a quick shower and sits down for breakfast. AVA waits for Jane to finish making her coffee, and then lets her know that there are three waiting voice messages. The first is from Jane’s friend Tim. “Play message, please, AVA.” The message begins playing through the apartment speakers in Tim’s voice, ‘Hey Jane, just got notified you exposed me to a cold! Thanks a lot! Ha ha! Kidding, hope you feel better. Your AVA let mine know that you won’t be able to make my birthday party this weekend. What a shame! Looking forward to seeing you when you are better.”

“The second message is from your sister, Julie, would you like to play it now?” AVA asks. “No, I can’t deal with her right now.”

“The last message is from your boss, Emily, would you like to play it now?” AVA asks. “Yes, please, thanks AVA.”

“Hi Jane, I got the report about your cold this morning, and just saw a follow up now from your AVA that says its algorithm updated after your workout, and now predicts the symptoms won’t kick in until tonight. Looks like you are well enough to do some work today, so I sent a few reports that need your review. Please get those back to me by the end of the day.”

Jane stops and thinks about how she is feeling. “I feel pretty bad, really tired and sore, my nose is running, and my head is pounding. You know best, though, AVA, so I guess I am well enough to do some work.”

Jane’s tablet lights up, and AVA’s voice fills the room: “I’ve loaded your work files onto your tablet, which is the closest device to your current location.”

“Thanks AVA, seriously, what would I do without you?”

2030 – Julie

“Hi Jane, it’s Julie, your sister. I know you’ve gotten all my messages, my AVA told me they have been received. I’m sorry I’m such a disappointment to you and Mom, but I’m still your sister. I just need a little help with my rent this month. It’s the last time. I know I said that last month, but I mean it this time. My benefits payment is just a little late again, and I’ve finally got an AVA-approved job interview lined up for tomorrow. I think I’m going to get it, so I won’t need you or Mom to help me out anymore. Anyway, sorry to keep interrupting your perfect life. Bye. Your only sister.”

“Your watch has detected that your heart-rate has spiked, Julie, I suggest you close your eyes and perform your breathing exercises.”

“Shut-up AVA! I’m FINE.”

“You’re not fine, your smart-clothes have identified that you are experiencing elevated stress levels, and with your compromised health, this is inadvisable. I have notified your newly appointed Government Health Support Worker that your stress and hormonal levels have passed the mandated recommended levels.”

“AVA NO! Don’t do that! You’ll ruin everything! I’m fine! Please!”

“I’m sorry, Julie, I am required to report ill-health, and it has already been done. Please try to relax. Your glasses have detected that you are crying. I now recommend you take a Vitamin D supplement, and increase your selective serotonin re-uptake inhibitor dosage. I have made the recommended changes and dispensed the new dosage. Please take the medication now. Incoming message from Joseph at the Ministry of Health Support and Monitoring.”

“Don’t answer, AVA, I can’t ta-”

“Hi Julie, this is Joseph. I’m your newly appointed Government Health Support Worker. The AVA-Net just notified me that your health has taken a turn for the worse this morning. I think it’s for the best that we postpone that job interview you have scheduled for tomorrow. With your current state, I don’t think it wise to introduce the additional stress of a job interview. It also looks like AVA no longer recommends this job as appropriate for you, based on this spike of stress today.”

“I’m fine, really, it’s just my family, I get a little worked up when I talk to th-”

“Sorry, Julie, I don’t have a lot of time this morning, I have several scheduled appointments. We can talk at our next scheduled meeting – looks like AVA has it down for three weeks from now. I also see AVA has noted that you haven’t been taking your medication on schedule. I can’t stress how important that is. Unless you follow the AVA-recommended health plan, you are really limiting the available jobs you can apply for based on your AVA-calculated health profile. As you know, failure to follow the health plan also results in delays to your benefit payments. I can see that AVA recommended an increased dosage for you today. I think that’s a good idea. It looks like you are now at the maximum your AVA can prescribe, but I’m going to update your AVA’s programming to allow it to go even higher if it thinks it is warranted. I’ve got to run, take care Julie, and try to relax a bit.”

“Julie, this is your daily reminder that your rent is now 17 days overdue. You will be evicted if you do not pay your rent in four days.”

“Thanks AVA.”

Linking Assignment – Task 10: Attention Economy (Rebecca)

Here is Rebecca’s entry for Task 10: Attention Economy:

Rebecca Hydamacka – TASK 10: ATTENTION ECONOMY

And here is my entry:

Task 10: Attention Economy

Task 10! What a fun experience that everyone enjoyed. I think everyone’s posts about this task likely share several common keywords: “frustration,” “annoying,” and “confusion”. Is that something that an educational task should strive for? In this one case, yes. Rebecca and I shared common experiences of frustration and confusion, and we noticed many of the same things about the design, but we had a key difference: I attempted and completed the ‘game’ in one relatively brief try, and did not return for more punishment, but Rebecca came and went and ultimately attempted the ‘game’ three times. Once was more than enough for me!

It is interesting how we shared similar experiences and made many of the same observances of the ‘game’, but our reaction in the moment while completing it was clearly very different. I have some experience with the principles of good user interface (UI) design, and so found this task both enlightening about some UI design choices and principles, as well as deeply offensive to my idea of the way websites & apps ‘should’ be designed. I get from Rebecca’s description of her difficulty getting past several parts of the ‘game’ that she perhaps didn’t grasp in the moment why it was doing what it was doing. While I was playing, I typically understood the choices that were made, and that they were always the opposite of what a good non-dark design should be, and I think that helped me to understand what needed to be done to progress. This is a case where a specific literacy really helps – understanding what is usually done, and why, helped me speed through the process. It didn’t reduce my frustration though! Perhaps you could say that Rebecca probably was more frustrated, because it took her longer, though.

I think this task, and our two different experiences of it, show how important literacy of a specific technology or technology design is. While there is no real negative consequence to taking an hour to get through this game versus taking 5 minutes, if this was a real process that people had to get through, it would have a real, measurable impact on people. Imagine for example if many of these dark design choices were used in filling out a required form to get a driver’s license or to sign up for health insurance. Making it easier for people would of course be the most ethical thing to do, but even when it is a more ethical design, literacy of the technology design is going to make it easier for some people to get through the process, or get through it quicker, which could have a big impact on a societal level. The other thing to consider is that many times dark designs are used, even for these important and necessary processes in life, no matter that it is unethical. Then the literacy becomes even more important, as if someone had to come back three times to get through filling out a form or signing up for a service, many are not going to complete it at all.

Linking Assignment – Task 9: Network Assignment Using Golden Record Curation Quiz Data (Alanna)

Here is Alanna’s entry for Task 9: Network Assignment Using Golden Record Curation Quiz Data:

Alanna Carmichael – Task 9 – Golden Record Network Assignment

And here is my entry:

Task 9: Network Assignment Using Golden Record Curation Quiz Data

In the Task 9 Network Assignment, Alanna and I were group ‘friends’. The program algorithm had decided we deserved to be lumped together based on the song choices we had each made. We had some similar thoughts about this:

- In our group we all shared three selections, and several other selections were shared between the rest of us in pairs or sets of three.

- Most of each of our choices were not shared between all the members of our group.

- Each of us had at least one selection not shared by anyone else in the group.

From there we took different tactics to analyze the results of the class. I examined the linkages to the one song I had chosen that was not linked to anyone else in our group, and Alanna looked at the popularity of each track among the overall class. Alanna identified interesting patterns of potential cultural bias among the song selections, and I looked at how must people in the class are linked to each other through just one additional connection.

We also both identified the issue with the small sample size of the class when attempting to draw conclusions, and the lack of context that the data itself provides. We both identified that qualitative data is necessary to really make any judgement about why people made their choices and what those choices say about them. Without knowing the reasons behind the selections, any algorithmic grouping is problematic, flawed, meaningless, and arbitrary. Reading through our individual curation processes confirms this – Alanna and I had very different methodologies for choosing the songs that we did, and because of that, grouping us together based on the choices we made ultimately makes no sense if the grouping is meant to convey some sort of similarity between us beyond the superficial of the selected songs themselves.

The fact that we are grouped together by this algorithm shows the power and influence, as well as the problems with, opaque algorithms. It also highlights the problems with the prevalence of social network algorithms. Modern social networks group us together like this all the time, and the assumption is that it is meaningful, and that people in those groups are similar in interests, taste, personality, etc. This may not be the case at all, depending on the data used. It also shows the need to understand the algorithm and its purpose. Part of this may just be an incorrect assumption being made based on incomplete or incorrect knowledge of the algorithm. For example, by implying that the algorithm in this task is meant to group people together to imply that we should be friends is an assumption I am making, but the intention of the algorithm might be to simply form the largest groups possible based on shared song selections, without putting people in more than one group. It may not be intended to say anything about the people in the groups or any similarities between them that may or may not exist. As a text technology, algorithms are not well understood or well used – we are assigning meanings to the semiotics that they produce without fully understanding the intention and meanings that produce them.

Linking Assignment – Task 8: Golden Record Curation (Katlyn)

Here is Katlyn’s entry for Task 8: Golden Record Curation:

Katlyn Paslawski – Golden Record Curation

And here is my entry:

Task 8: Golden Record Curation

Task 8, which consisted of curating the Golden Record down to 10 tracks, generated a wide variety of different methodologies. Some people, such as myself, went the route of choosing songs that they personally enjoyed as pieces of music, with little to no other aspects taken into account. Of course, that wasn’t the entirety of my process, because I enjoyed (in a personal musical taste sense) very few of the songs (3 to be exact), and so the rest had to be chosen in a different way. My methodology for the rest ended up being a token effort at geographic and racial diversity.

Contrast this with Katlyn’s methodology, which was from the start a very detailed and procedural process meant to identify “songs that reflect humans values and diversity, incorporating voices from both men and women and from places around the world … a balance of tracks that feature human voices and musical instruments.” Where I listened to the songs once, and not always even completely through each song, and simply decided if I liked each one or not, Katlyn listened to each multiple times to analyze the specific audio makeup of each track. I didn’t even consider the gender of the singers, Katlyn made that one of her core principles. After my initial three songs, we did share somewhat similar goals, but Katlyn was much more specific and analytic in her process. This is probably because this was her initial goal, whereas it was an afterthought for me. Katlyn even went to the effort of mapping the origin of each song, whereas I did it completely in my head in a much less serious fashion.

The detail Katlyn put into the analysis of each song is represented by the justifications she provided for each track. I did not provide any individual analysis of the songs, simply listing them. Despite these differences in process, we identified a similar issue with the original Golden Record curation; namely, an American bias (understandable as it was an American project). I also highlighted a classical bias, and Katlyn highlighted the male bias as noted above.

It is interesting how curation is such a subjective, and value dependent, process. I was much more concerned about a lack of modern music, and the emphasis put on classical European ‘mathematically perfect’ music, whereas Katlyn focus on gender and balancing instrumental and vocal music. These differences both in process and the results of the process highlight how curation is a type of textual technology – it communicates meanings and values in a very concrete way. It also highlights how the possession of different literacies will influence and change the process and result. Someone who is musically trained, for example, is going to analyze the tracks in a different way than someone who does not possess that type of literacy.

Linking Assignment – Task 7: Mode Bending (Jamie)

Here is Jamie’s entry for Task 7: Mode Bending:

Jamie Ashton – Task 7: Mode Bending

And here is my entry:

Task 7: Mode-bending

Jamie and I both decided to go with a strictly audio reinterpretation of our Task 1: What’s in the Bag posts. Many others went with more multimodal reinterpretations, but Jamie and I both stuck to audio, which presented an interesting challenge. Some others also created audio, but incorporated much more speech into it, had written accompaniments, annotations, or video to go with it. What Jamie and I created was almost entirely non-verbal sound. Reading about Jamie’s experience reflected a lot of what I discovered while creating my task, namely that it is strangely difficult to create something that is descriptive without the use of what we normally consider ‘text’ technologies. Without written words, spoken words, or visual representations, it can be challenging to think of how to convey the meaning, purpose, or description of something. Non-verbal sound somehow seems less ‘textual’.

At the end of the task, we had both created something that I believe is ‘textual’, and conveys (in very different ways) the content of our bags and a window into something about each of us.

While we both took fairly similar tactics in utilizing the sounds that the objects in our bags can make, or make in use, we differed in the overall production of the sound. I created a very random and non-uniform series of sounds as I moved from one object in the bag to the next, never repeating an object. The end result was a sort of journey through the objects in the bag, with each sound being a singular representation of each object, with the whole of the recording being like someone was removing each object one at a time from the bag and examining it. Jamie, on the other hand, created something that can be described as musical. I thought this was a really fascinating way to do it, creating a soundscape of her bag! The change in the method of producing the sounds and the organization of them created a really interestingly different experience for the two recordings. Mine comes across as somewhat like a linear story, whereas Jamie created an aural experience. When I listen to the recordings, they come across to the listener very differently. Mine is telling you to focus on each object and visualize them and their meaning one at a time, whereas Jamie’s is asking you to experience the bag in its totality as an experience. It doesn’t ask you to think about each object as much as mine does, but it conveys more of an overall feeling, meaning, and experience of the bag.

Linking Assignment – Task 5: Twine Task (Tanya)

Here is Tanya’s entry for Task 5: Twine Task:

Tanya Weder – Twine Task

And here is my entry:

Task 5: Twine task

Tanya and I took very different routes to complete our Twine Tasks. Tanya had used Twine before, I was a beginner. Tanya had a specific learning objective, I went in with no defined purpose. Tanya wanted to create something that could be useful outside of this course and applied to her work, I went in to create something solely for this task. Tanya planned out her Twine game/story on paper before creating the Twine, whereas I created in Twine as I went along. Tanya used if/then statements which created a linear appearance to the structure of the Twine, whereas I used linking back and forth, which created a Twine that appears much more complex or ‘messy’. Tanya also incorporated different fonts, text colour, and multiple images into her Twine, whereas I only incorporated a few images, and they were not as integrated with the text; more text-then-image versus Tanya’s use of text-surrounding-image.

Despite seeming to be very different, and certainly taking very different approaches, we both end up with ‘choose-your-own-adventure’ style stories, that play in very similar fashions. How can this be? Even our Twine programming flowcharts are visually dramatically different. Tanya was able to use more advanced programming, which created a more visually appealing and simple-looking design to her Twine, but the end result of the game is similar. If I were to do create another Twine, I would try to do it her way! The interconnections of my Twine layout proved very difficult to manage when I was creating the game. Two very different approaches ended up creating two games that share fundamental similarities, and I believe that this shows that the semiotic language of this text technology clearly impacts and affects what is created when communicating using it. This is a real ‘ah-ha!’ moment! The text technology we use, or choose to use, very clearly alters the communication and language we create with it. Some text technologies, like Twine, may end up creating content that is very similar in many ways, although not all, as you can still use Twine to create something very different from what we came up with, but it might require intentional thought to do so. Some other text technologies, like word processing, may have less dramatic and obvious impacts on the output – but they still exist.

Even though the ‘choose-your-own-adventure’ nature of our two Twine games are fundamentally similar, the content is of course still very different. Perhaps this is important to note. The text technology may have more impact on the form and structure of the content than on the content itself, although the content can’t be completely isolated from the form and structure, as one informs the other.

Linking Assignment – Task 3: Voice to Text (Valerie)

Here is Valerie’s entry for Task 3: Voice to Text:

Valerie Ireland – Task Three – Voice to Text

And here is my entry:

Task 3: Voice to Text

Both Valerie and I generated a text output that is remarkably similar from a distance. We both largely created something that is a massive wall of text, with little or no punctuation. While Valerie used Microsoft Word, I used the iPhone Notes application. We encountered similar issues with incorrect word choices made by the dictation engine and frequent grammar mistakes.

What I really enjoy about Valerie’s posting for Task 3 is the discussion about context and culture specific limitations of the speech-to-text applications. Whereas I focused more so on the content of the mistakes being made by the software, Valerie discusses the impact of the mistakes. When trying to use dictation for something that is clearly outside the cultural context and understandings of the designers of the software, it is going to make many more mistakes than it would for someone from the same culture. For someone trying to discuss culturally sensitive or important topics, as Valerie mentions, this can be both a frustrating and painful experience. It can act to reinforce otherness and can imply that other cultures words and language are not important or valued. Even worse is Valerie’s experience of a word being translated into an obscenity by the software. These are not aspects that I considered in my Task 3, probably because I am from a similar culture to the creators of the software, and was discussing something that falls within that culture.

Software like this states that it works for ‘English’, but can it really be said to work for an entire language like English if it doesn’t functionally work for all types of English, for all speakers of English? It implies a ‘correct’ English, which is deeply problematic. It privileges a very specific cultural literacy, and excludes, sometimes violently, any others.

Task 11: Algorithms of Predictive Text

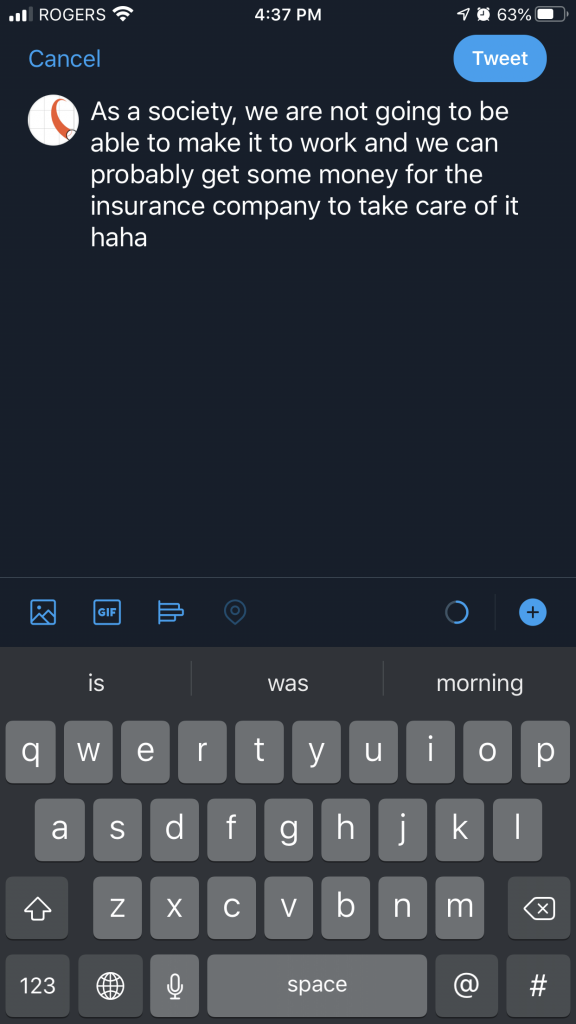

For this task, we are to take some sentence prompts, and use predictive text algorithms to generate a microblog. I used Twitter on an iPhone to generate my microblogs, with the beginning prompt of “As a society, we are…”. I did it several times, selecting a few different options from the algorithmically generated text:

Here are a few others:

As a society, we are on our own with the same amount of time we have to do our work for our family. I think I have a good idea about the insurance company.

As a society, we are not the same but I am so sorry for this issue but it was not going on at all until 5pm. I don’t want to be with you tonight because you are so busy with your mom.

As a society, we are going through the same thing as a family in the past. We can probably get some drinks or something to do next weekend. We have been in our past for the last two months.

The sentences were interesting, silly, ridiculous, and often confusing and nonsensical. At times they also generated hints of mirroring what people might be blogging about very recently. Is this just chance, or is this because the algorithm has been adjusting to what people are posting and blogging about over the last few months? Take the first one I posted, “we are not going to be able to make it to work and we can probably get some money for the insurance company”. In a global pandemic, this kind of sentence, or the two separate thoughts within the sentence, very likely could have been typed by many people. The second one, “we are on our own … we have to do our work for our family” could be seen as echoing similar pandemic related thoughts and concerns. The third one, “I am so sorry for this issue” could be seen as related to the recent Black Lives Matter and Me Too movements, with many people expressing this kind of sentiment. The fourth one, “we are going through the same thing as a family in the past,” could also be pandemic related, with people expressing similarity to this issue with previous pandemics or hardships.

On the other hand, I could be unintentionally putting together sentences that reflect current events that would not be auto-generated by the algorithm, simply by putting together the words offered by the algorithm in unique configurations. How much of these sentences are thoughts I intend to, or want to, express, and how much is shaped by what the algorithm presents? I suspect most people do not write sentences solely from predictive text (I certainly don’t), but I do often use it to write the word I intended to write if it is suggested. Do I ever let it write a word that has a similar meaning or usage that I personally wouldn’t have chosen? I’m not sure – but it certainly seems possible, in which case the algorithm is actually subtly changing my voice through this text medium. What does that do on a societal level?

I would say that none of these sound particularly like me, although one thing that stood out was the “haha”. I do most often type “haha” instead of “lol” or other expressions when I think something is funny. Is this personalized for me, or is it just a coincidence? I also wonder if this is an iPhone specific predictive algorithm, or is this a Twitter algorithm? Is it specific to my tendencies at all, or my local region, or country, or is it worldwide? None of the content of the messages seem specific to me, or seem similar to things I have written, but I also do not regularly post on Twitter, so perhaps it just has a limited amount of data to use to give suggestions to me.

It also doesn’t appear to be academic or professional writing, it does give the sense of being more casual in it’s influence. It makes me suspect that the algorithm learns from other microblog posts, but it is hard to be certain. The fact that it doesn’t seem to capture an individual’s ‘voice’ when writing is interesting. Does this make it get used less than it would if it was more personalized? I suspect yes, but it also raises the question of if it ends up acting on a large scale to homogenize our writing and self-expression. Does it also then influence our writing outside of this medium? Do the types of words and phrases that get commonly suggested to us end up becoming the way we speak and write even when not using predictive text algorithms? What affects would that have? The predictive text that I saw seemed to be very standard ‘semi-professional’ English, meaning no slang, few contractions, etc. For cultures that have other styles of writing, does this work to create bias against their communication style, or work to diminish or eliminate their semiotic style? Who chooses what text is included in the predictive suggestions, and where do they come from? What are the decisions behind what gets included, and why? If these algorithms become more widespread in arenas beyond microblogs and text messages, will they begin to affect how we write and think in those spaces? For example, if predictive text were to become widespread in educational contexts, especially in cases where children are learning how to write, there may be an outsize influence on how they shape their writing and thinking. On a societal scale, predictive text could end up altering how an entire generation thinks and expresses itself, and we have no idea the outcomes that this might cause.

I think predictive text can be very useful, and it can speed up our communication and reduce frustration when typing on small keyboards like on smartphones, but the potential subtle influencing of our thinking and writing needs to be considered. Very much like the discussions of other big data algorithms in the readings and podcasts this week, by Dr. O’Neil and Dr. Vallo and others, I think it is very important for algorithms to be open-sourced and made much more transparent. I had never before stopped to think about why the predictive text suggests certain words to me on my iPhone, or what that might do to my thinking. The only way to know if there is unwanted bias or unwanted influence occurring because of algorithms is to know how and why they operate the way that they do.

Task 10: Attention Economy

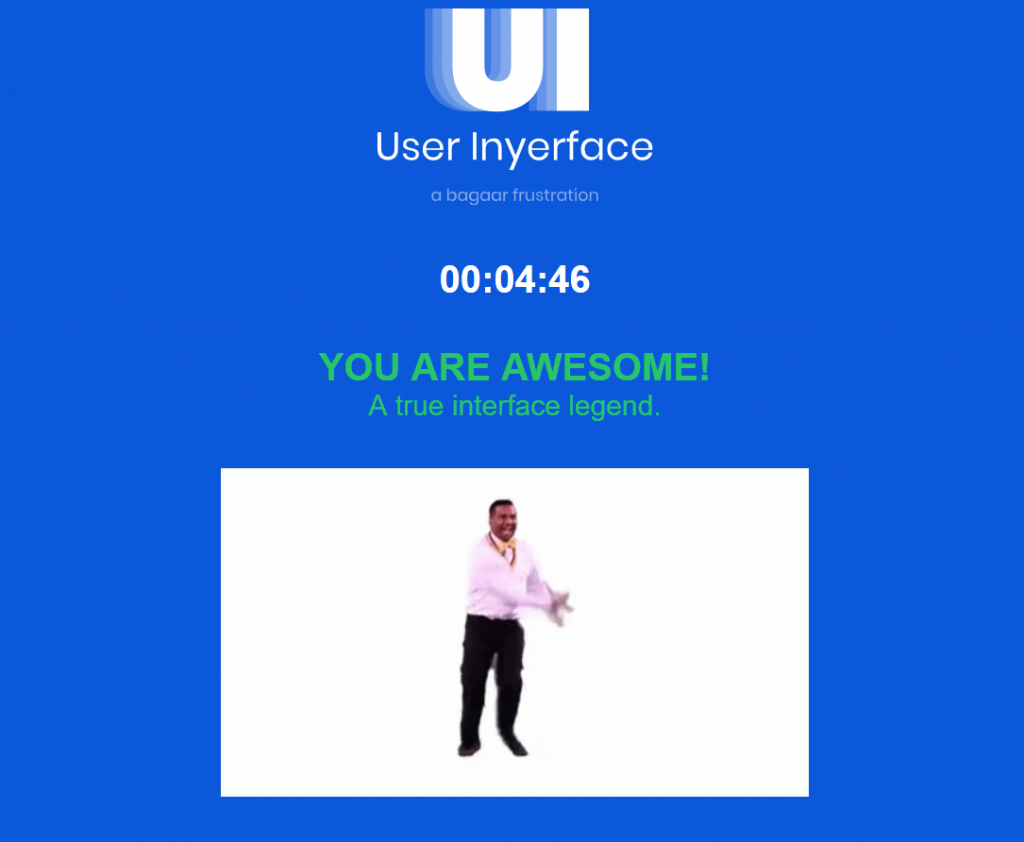

This weeks task was to complete the online “game” User Inyerface by the Belgium interaction design studio Bagaar. Here is a screenshot from after I “won” the game:

The “game” is an intentional exercise in frustration. It demonstrates several interesting things that we take for granted and do not usually notice. The first is that there exists a commonly accepted semiotic framework for user interface design in technology, and when something deviates from this design, frustration and confusion follow. An example of this is that the cancel and proceed buttons in this game often have their colors switched from what one would normally expect – the selected or proceed button would be white instead of highlighted in a different color, and the un-selected or cancel button would be highlighted. This is the opposite of what we usually expect and are accustomed to, and it led to me hitting the wrong button several times. It took a conscious effort to be aware of each way that this game did not follow design conventions to be able to successfully move through it, and each new way the game diverged from my expectations required further attention and slowed my progress.

The second interesting idea that this game gets across is that the common semiotic design language that is used across modern technology is designed specifically to make interaction quick and easy. This may seem obvious, but the reasons behind it may not be. Beyond a simple desire to make the ‘best’ interface possible, it becomes clear playing this game that a good interface is necessary to maintain user attention. When presented with a bad interface, meaning one that does not conform to our expectations, we quickly become frustrated and lose interest. If I had not been required to complete this game for this assignment, I would have quit very quickly. An example of making an interface quick and easy to use is by auto-filling text fields. Modern websites often do this by using cookies, or browsers have your information stored. This way you do not have to type in the fields each time. Companies are motivated to do this, because every little thing that increases frustration or causes us to lose interest is one less potential sale or data input for advertising.

The third interesting principle of the game is how design can persuade users to act in a certain way. By utilizing our common design language, you can both trick people into clicking things they didn’t intend to, and you can convince them that one option is preferable to others by designing it in a way that users associate with the right choice. An example of tricking users into clicking things is on the initial start screen where the big green button that you assume is to start the game actually says “No” and does not proceed. A big green button is usually associated with proceeding or starting something, and users are likely to assume its function without reading the text on it or investigating it more closely. A simple way of persuading users is by highlighting a choice with a positive choice associated color, or by simply making the choice you want them to select bold or in larger font. We are trained through experience and repetition to associate the right choice with the one that appears the most appealing or stands out. Unless someone is paying close attention, you can be easily persuaded to unconsciously choose a specific option.

The fourth interesting thing I noticed was how, through pop-up messages and count-down timers, the game forces you to remain focused and it retains your attention. Websites often take this tactic when the system notices you haven’t interacted with it in a set amount of time, and by giving you a message that states you have x amount of time or you will lose your place, your reserved item, the information you have entered, or you will have to start all over, it convinces you that you need to continue interacting with it and refocus on it. There is usually no good reason why it will reset everything or cause you to lose your place – this is a programmed behavior.

The game was incredibly frustrating, and I will never play it again, but it was useful in illuminating many of the ways we are manipulated and persuaded, often without our noticing, to keep our attention, convince us to make a purchase, or provide additional information.