It was Nobel Laureate Herbert A. Simon, who first articulated the concept of the Attention Economy when he proclaimed that, “a wealth of information creates a poverty of attention”. This topic reminded me of a poem I came across in another ETEC module that so aptly illustrated the idea of how our attention is being diverted to activities that see a change in the very behavior we use to define human existence.

Our daily lives are now so intertwined with the devices we use that we spend on average a third of our waking hours engaged with mobile technology alone. With the millions of apps out there it is not surprising that so many different techniques have been developed to try and fight to keep our attention. I think what the task this week highlighted so well for me though was exactly how accustomed (or is the correct word really “trained”) I’ve become to these embedded features or design elements. By employing an alternate design, the game we were tasked to play purposefully had me recognize what I am typically used to engaging with on these interfaces. The task also gave rise to a pretty strong emotional response. I became increasingly frustrated and even anxious by not being able to complete the tasks required of me in a timely manner. Everything took a second try or even a third before I got it right and was able to move on to the next screen.

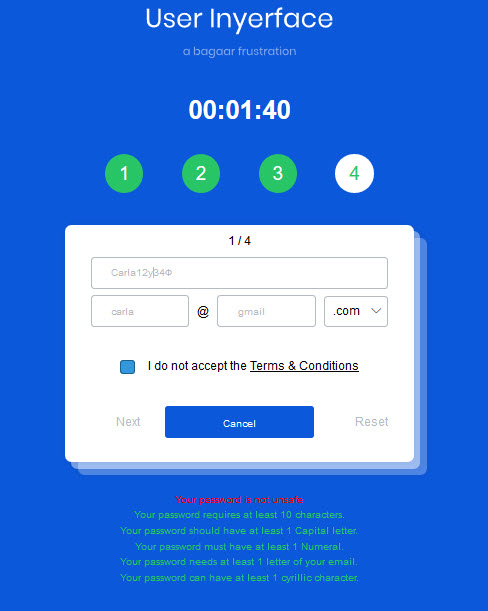

Right on the very first page, I had to remove the filled in text before adding in my details to “register”, I had to Google a Cyrillic letter to add to my password, match letters to my email address, use annoying drop down lists for the domain name and go into the terms and conditions to accept them. The deathly slow scrolling rate to go through the terms and conditions along with the ticking clock and pop up window reminding me that time is a limited resource was the beginning of my anxiety-filled experience. On top of that it took me some time to figure out that to progress I had to click on next which was placed rather oddly on the left hand side of the window (as opposed to a more natural central or right orientated position which would be in-line with the convention I am used to).

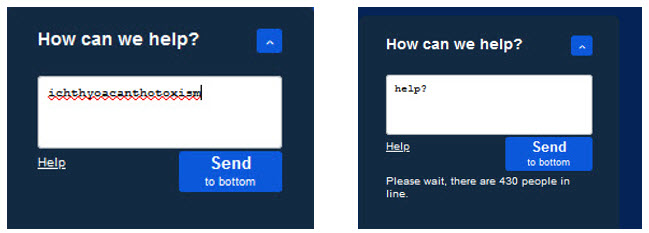

Window after window came filled with similar annoyances (why have an age scale that goes to 200? Who lives that long?). I even wanted to ask the chat bot for help- a cheat for the game… I was naive but hopeful (okay, maybe a little desperate). Whatever I typed ended up being complete gibberish. Another time, it told me to wait because there were 430 people in line. I came to accept that I was truly alone.

I made it in the end but ended up feeling a little emotionally drained thereafter. I was overstimulated and felt exhausted by the amount of effort I had to put in to progress between the screens.

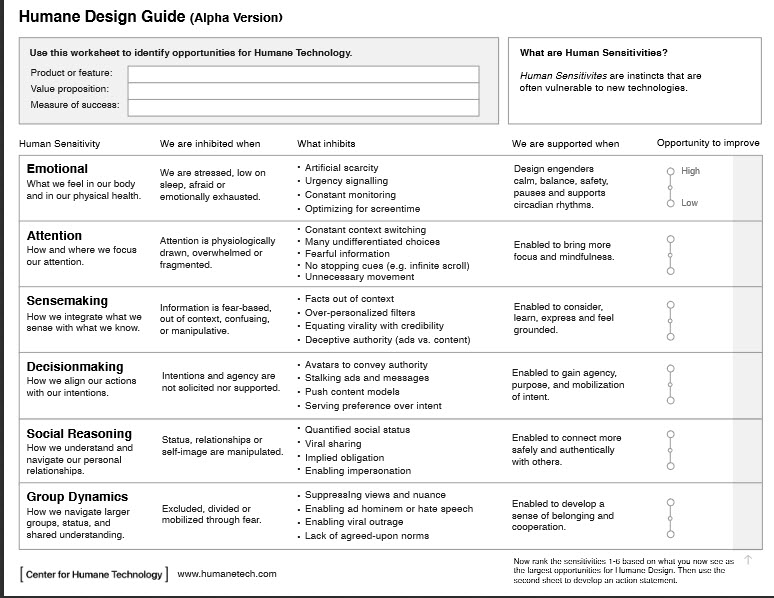

What I take from the experience of playing this game was the impact that digital interactions have on me. The very design of a website clearly has the ability to affect me emotionally whether positive or not. I think this disillusioned me from the idea that these spaces are neutral ground in some way. They contain a lot of elements that have been purposefully used to elicit some kind of response in me (whether that is for my benefit or theirs). Is there an alternative though? On the site for the Center for Humane Technology (spearheaded by Tristan Harris), there is a suggested design framework for developers to help them take into account six human sensitivities to counter strong responses elicited through the interaction with technology in order to create more balanced/ neutral spaces. Take a look at the snapshot below of this framework.

To conclude, I watched a talk this week by an expert in XR data privacy and it seems that so many concerns that were highlighted in this week’s module regarding data collected and the design of social media apps/ websites to manipulate our behavior is being transferred into the Virtual and Augmented reality spaces too. Just take a look at this article to gain some insight on this. I’m afraid that these are problems that simply cannot be ignored and will require each of us to educate ourselves on design practices being used to manipulate our attention, demand more transparency from tech. companies on how they operate and to pressure governments to institute and uphold laws that will see more protection of our rights and information.