Implications of AI-informed decision:

“Software has framing power: the mere presence of a risk assessment tool can reframe a judge’s decision-making process and induce new biases, regardless of the tool’s quality.” (Porcaro, 2019).

I had no idea of the extent of AI being already used in the Justice system, arrests, and the computing of criminals/arrested individuals. As much as I can understand how AI can be helpful in analyzing legal/safe/unsafe situations on public streets, I feel that each arrest should be determined based on the current situation and live bodycaming. As quoted from Porcaro, AI also reframes legal decision-making as risk assessment tools.

I feel that I cannot make a true final decision on AI-informed decisions because I would’ve liked to study statistics that are focused on Canadian criminalization cases, jailing, arrests, and violence. I am not a professional in the legal sector, therefore, I cannot simply imply that AI has not saved lives in extreme circumstances. AI can be either negative or positive towards law-enforcement decisions.

Consequences that AI-informed decision making brings to certain aspects of life:

In terms of the Podcast, “Reply All,” crime, crime rate, hate crime, drugs, corruption, all meld in one pot and is its own beast specifically in NYC. We cannot compare these statistics to the general stats of America and/or Canada. Therefore, cities with high-risk levels of crime need the support of AI technology. With that being said, constant instructional, experiential, training against AI-informed decision-making should be crucial to work towards that ethical, logical, and safe response.

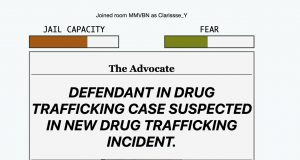

Regarding the simulation, I was constantly reminding myself of jail capacity. In which I prioritized the possibilities of repeated offense, violence, and/or a danger to society. I feel and can see how AI algorithms may serve society and at the same time, it is not a technology we should depend on but rather one that can generate (hopefully fair) background information.

References:

Porcaro, K. (2019, January 8). Detain/Release: simulating algorithmic risk assessments at pretrial.Links to an external site. Medium.

Vogt, P. (2018, October 12a). The Crime Machine, Part I (no. 127) [Audio podcast episode]. In Reply All. Gimlet Media.

Vogt, P. (2018, October 12b). The Crime Machine, Part II (no. 128) [Audio podcast episode]. In Reply All. Gimlet Media.

Your insights regarding the use of AI in the justice system are quite thought-provoking. Prior to this module, I was not aware that AI is being used in the justice system. The impact of AI on decision-making processes is nuanced, as highlighted by Porcaro. Though AI plays a complex role in arrests and criminal profiles, there is still a need for human involvement in decision-making. The lack of specific data concerning AI and Canadian criminalization cases is a valid concern. As Dr. Shannon Vallor discussed in her talk, AI is a reflection of who we are, our reality, our values, and everything else. I completely agree with the idea of tailoring AI solutions to specific needs. Your cautious approach towards AI integration aligns with responsible implementation.

References

Santa Clara University. (2018, November 6). Lessons from the AI Mirror Shannon Vallor [Video]. YouTube.

Hey Nisrine, thanks for your comment. I feel that being clear and to critically engage in Canadian statistics and politics is very important especially in a time of political and cultural awareness. This module connects deeply to media convergence which I found very interesting.