Week 11 – Predictive Text

I used Twitter on my iPhone to start a thread using the prompts suggested in this task. After starting the thread with the ‘set prompt’ I only used a word derived from the auto-generated choice provided – in each case there were three choices.

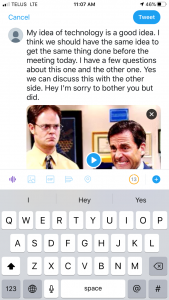

Example 1 – The prompt “My idea of technology”…

The statement is almost non-sensical, despite my best efforts to make word choices that would convey a coherent message. The statement does not represent how I would normally communicate. It was interesting, that each fork in the road, I was attempting (subconsciously) to take the positive choice. I was able to keep doing so, but that effort did not seem to be relevant to the substantive meaning. The gif was my attempt to convey my reaction to composing those sentences.

One note – you can see the next prompt after my final sentence, to begin the next sentence were the choices: I, Hey or Yes. Often neither of those choices would have been the word I may have wanted to start that next sentence with.

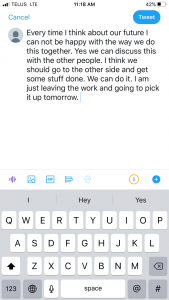

Example 2 – The prompt “Every time I think about our future”.

Again I used Twitter and after the prompt I used the suggested text. And again, it was difficult to create a comprehensive conversation about the prompted issue. In this example I consciously chose to allow the discussion to use a negative and consider if I thought that changed any outcome in the exercise. In my opinion it did not.

Other comments:

As posited in the videos we watched this week including Malan (2013) and O’Neil (2017), when using predictive test, the user is beholden to the person(s) who programmed the algorithm that delivered the word choices. And the user is beholden to same with any information or reasoning as to why those choices were provided. Are the word choices on my iphone the same for all iphone users – or all iphone users using the same ios program? Are the word choices changed because Apple (or someone) knows that I am female, my age. Were any of the word choices based on words I often use (I think not). Anecdotally, one of my sisters lives on Hillholm Road – which the iphone rather unfortunately autocorrects to Hellhole Road. And the phone will continue to make that auto-correct. Yikes.

I have also experienced – in just the past year or so, my gmail account providing a set of stock suggested replies to emails I receive. For the most part the choices are pretty good, They are polite, grammatically correct blasé type replies. Examples include: “I will get back to you”; Received with thanks; Thank you I will respond as soon as possible.” But again, I don’t know if the suggested phrases are selected for me, if they change based on the content of the email – are there certain trigger words that prompt i.e. “received”.

Finally, prescriptive text has become a crutch I use with respect to typing and spelling. I am fortunate that I can type quickly (I am the age where one learned to type with both hands, all 10 fingers). But for the past number of years, I have learned that I can continue to type quickly and the auto-correct with, many times, correct my typing errors and, I say with some shame, correct my spelling errors. Sometimes I am actually not sure of the spelling of a particular word, but auto-correct swoops in and fixes the spelling for me. This is helpful to me. And I expect would be helpful for students. Do I think this is a problem?

The last issue – is the use of predictive text in public writing spaces a problem, for example in politics, academia, business or education? On the surface, the answer is not necessarily. Everyone can choose to use or not use the predictive text when composing a message. However, for example in politics, and as enunciated in particular by O’Neill (2017) in her video or O’Neill (2017) in her article. “How can we stop algorithms telling lies?”, there are some risks that can flow from the use of algorithms and predictive text. Can this leak into the simple act of providing predictive text in twitter – perhaps. As we heard about this week – gender issues – nurses are she and doctors are he – are just the tip of the iceberg, but attentiveness to the issue will keep me on my toes thinking about it.