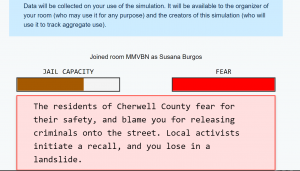

I chose option one for several reasons: one, I love playing games, also I have created simulation games for Personal Support Worker students for low and high fidelity, so I wanted to give this game a try. Let me share my experience with you. In this game, I was the county judge at bail hearings and had to decide based on the information that was provided to me whether I should detain release the individual. I remember my first hearing in the game and know that I released the individual despite his risk assessment when I read that he could lose his job and family. I paused and thought I did not make the right decision; I needed to look at the evidence more, for example, if they were a high and low risk to appear in court and or whether they are prone to offend again. As I reflect on the evidence for each individual, the game provides algorithms because the risk assessment made “predictions on future events that can take place with these individuals based on historical patterns (O’Neil, 2017). It frustrated me that even when I chose to release those individuals with a low-risk assessment, it told me I had made a bad decision. Although you could not see the individual’s face clearly, they were from a low minority group. So, the evidence on whether these individuals are a real risk is a clear marker for what O’Neil (2007) refers to as “bad algorithms that unintentionally promote negative cultural biases.” This game reminded me of the activity of last week. I felt week ten’s game had a hidden goal that we had to achieve that made it tricky and referred to repetitive misleading questions as dark patterns (Brignull, 2011).

This simulation game was deceptive and in line with Volkswagen’s malicious and illegal algorithm when it intentionally tried to deceive the results of their nitrogen oxide emissions which exceeded the levels permitted by law that would and could hurt the environment. Furthermore, the simulation game algorithms were deceptive in their biased risk assessment on each individual from the specific cultural group. If this were real life, this could affect and hurt specific individuals unjustly.

Seeing the simulations bias the predictive policing algorithm made me rethink my biases as our brains are sensitive to the information given in the text. For example, when text frequently uses language to communicate criminalizing information about individuals from certain cultures, it can influence our thoughts, leading to unfair biases towards specific individuals.

This task was a sobering reminder to get off my moral high horse and check my assumptions about people and how technology plays a significant role in creating biases. Maybe I am less mindful than initially thought.

References

Brignull, H. (2011). Dark Patterns: Deception vs. Honesty in UI Design. Interaction Design, Usability, 338.

O’Neil, C. (2017, July 16). How can we stop algorithms telling lies? The Observer. Retrieved from https://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies