I thought the topic of this week was fantastic and really helped me to understand how algorithms function, their potential and dangers. The articles by O’Neil (2016, 2017) and the podcast by McRaney (n.d.) were particularly enlightening to me.

Rather than doing one microblog post using predictive text assistance, I was interested in trying out different text predictors to see what results I would find. I did the microblog post using two websites (Inferkit (Case 1) and Deep AI (Case 2) and my cellphone (Case 3), which I did in Spanish, given that it is the language set in my phone.

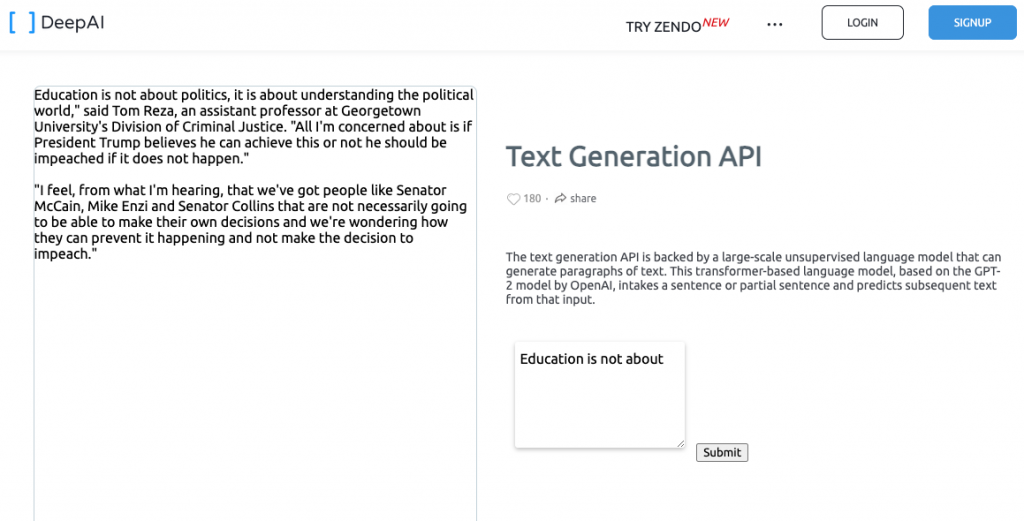

I was particularly surprised by the text created using Deep AI (Case 2) as it had a political tone that felt the most different from the way I would express myself. The post contains a quote that critically mentions Donald Trump. Anyone who knows me would know that I rarely speak about politics or politicians – it’s simply not an interest of mine. However, if I was to post this on my social media platforms it could easily be inferred that I have an inclination for political discussions and that I have a specific political position about a politician. In McRaney’s (n.d.) podcast it is mentioned that algorithms can have unintended consequences and we should think carefully about how they can affect people. The post is not dangerous in this explorative educational context but I do imagine how algorithms could lead to expressions that contain political, sexist, racist, and ethical implications that have nothing to do with our ways of thinking and create tension in our lives, as well as affect people.

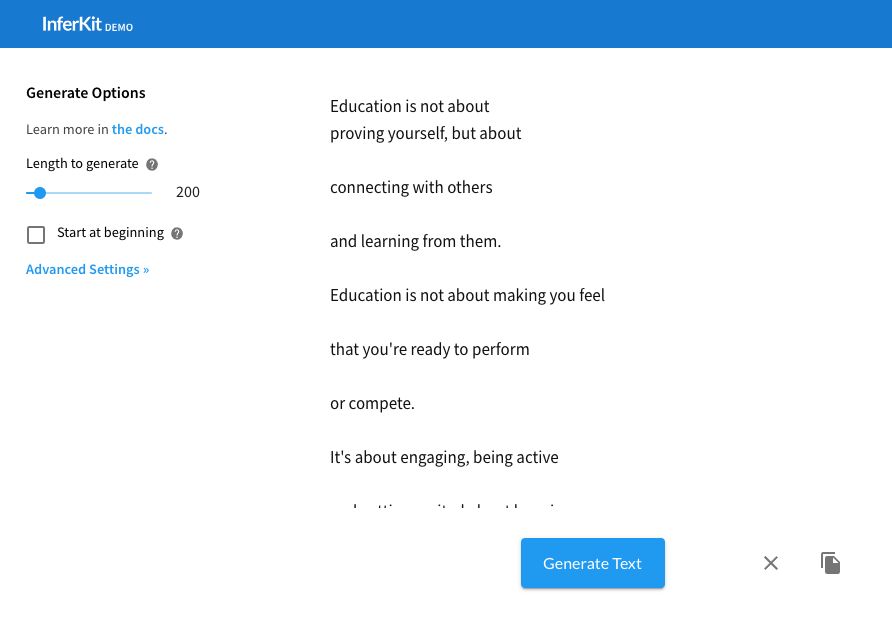

The text created using Inferkit (Case 1) was also surprising, particularly because this platform didn’t create text on a word-to-word basis, but rather in sentences. The result is a very well-articulated and philosophical idea about the meaning of education. This made me think about Dr.Vallor’s (Santa Clara University, 2018) definition of AI as machine-augmented cognition for humans, because the experience of creating text through Inferkit felt like a more potent way of creating ideas compared to the word-to-word approach. I understand that Dr.Vallor’s (Santa Clara University, 2018) refers more directly to using machines to perform extremely complex tasks in seconds, but I think it also applies in this case. This makes me wonder about some ethical issues about honesty in educational contexts. I actually went beyond the 250-word limit to see what else came up and the final result contained more complex well-articulated ideas. It even included quotations. I thought about how students could use this tool to cheat in schools, which is not only an ethical issue but also an educational one, as it represents an omission of doing tasks that are imperative for the development of skills (for example critical thinking or abstraction) that are needed to be successful in our society.

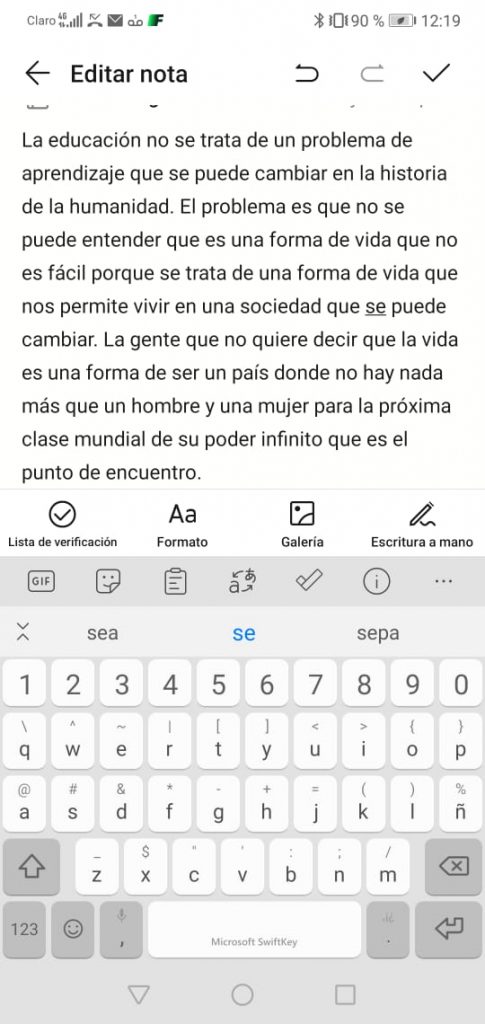

The text created using the cellphone (Case 3) provided insight into different aspects not found in the other two cases. I observed that, in this case, the algorithms were influenced by texts I’ve previously created from using my phone on a daily basis. I had a more clear experience of what was mentioned in McRaney’s (n.d.) podcasts about how algorithms predict the future based on the past. Some words such as “vida” are used a lot when I am communicating through instant messaging and it was interesting to see how it was suggested many ways while doing this task. I appreciate how algorithms can predict the future based on the past and have found that it is helpful when producing text in instant messaging. I understand now that this is one of the functions of algorithms, as they are precisely created to predict outcomes, yet I also see how, in other contexts, wrong ideas from the past (which could be racist, unethical, or sexist) could affect decisions we make in the future and create problematic feedback loops in algorithmic structures. This is the danger for which transparency and care from designers are urgently needed.

I was also left with the impression that the Spanish case was not as sophisticated, which makes me wonder about the technological hierarchies that exist between cultures, as in other cases such as MOOCs production.

Case 1: Inferkit

Text: Education is not about proving yourself, but about connecting with others and learning from them. Education is not about making you feel that you’re ready to perform or compete. It’s about engaging, being active, and getting excited about learning.

Case 2 : Deep AI

Text: Education is not about politics, it is about understanding the political world,” said Tom Reza, an assistant professor at Georgetown University’s Division of Criminal Justice. “All I’m concerned about is if President Trump believes he can achieve this or not he should be impeached if it does not happen.”

Case 3 : Cellphone:

Text: La educación no se trata de un problema de aprendizaje que se puede cambiar en la historia de la humanidad. El problema es que no se puede entender que es una forma de vida que no es fácil porque se trata de una forma de vida que nos permite vivir en una sociedad que se puede cambiar.

References:

McRaney, D. (n.d.). Machine Bias (rebroadcast). In You Are Not so Smart. Retrieved from https://soundcloud.com/youarenotsosmart/140-machine-bias-rebroadcast

O’Neil, C. (2016). Weapons of math destruction: How big data increases inequality and threatens democracy (First edition). New York: Crown.

O’Neil, C. (2017, July 16). How can we stop algorithms telling lies? The Observer. Retrieved from https://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies

Santa Clara University (2018, November 6) Lessons form the AI mirror Shanon Vallor [Video] Youtube.com https://www.youtube.com/watch?v=40UbpSoYN4k&ab_channel=SantaClaraUniversity