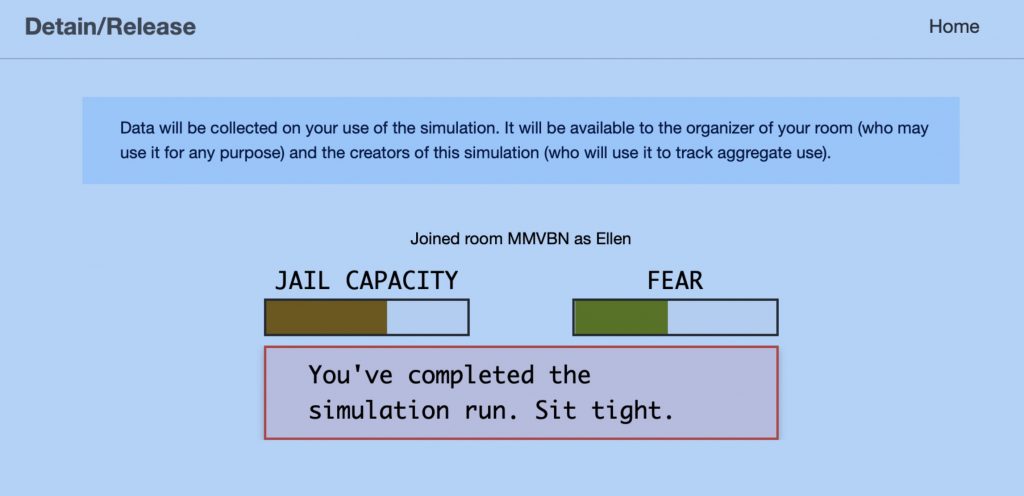

This task surprised me. Initially, I was grateful for the identity being blurred, however, I did note that one might still make decisions based on whether the individual was a person of colour. Gender was also revealed, and that too may hold bias. I began by having a mental inventory of my hard lines in terms of releasing and detaining. Are they at risk of being violent? Are they likely to re-offend? However, as the Jail capacity and fear indicators changed, I began to make decisions that didn’t align with my values. I released more people, knowing that this may put others at risk.

Additionally, the lack of data doesn’t allow for an in-depth representation of who the person is. The algorithm provides a one-dimensional response to a complex and layered problem. Moreover, the simulation may say whether a person is supporting a family but doesn’t explore whether they do so sufficiently or meet the emotional and physical needs of their family. An individual may be providing food but maybe abusive or neglectful in another capacity. The indicators would benefit from elaborations to help one make a more informed decision. Moreover, if a person is arrested with drugs, is that referring to some personal use of marijuana or selling crack in a local park? The information is vague and misleading.

Finally, the jail capacity is a troubling value to be at the top. Several factors might contribute to an at the capacity facility. Perhaps they don’t have a large enough facility in comparison to a given population. Judicial decisions made on behalf of a given population need to be made in relation to the crime and not on whether there is room in jail.

I see benefits and enormous risks in such algorithms. In using data to inform decisions, one must ensure that the information is all-encompassing, free from bias and representative. Moreover, the way decisions are made and represented needs to be precise. For example, when the simulator indicates ‘Fear,’ what does that even mean? Fear for whom?

There are real consequences to ‘broad strokes’ when it comes to gathering creating data for algorithms. Organizations need to ensure data collection platforms allow for clear and bias-free engagement separated from potentially persuasive features.