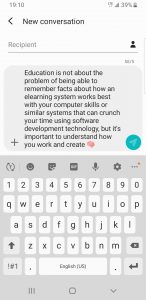

Auto-complete predicts many possible paths for sentence construction and contrived meaning. Except for the brain emoji that I deliberately selected at the end of the micro-blog, how much of the decision-making process was mine? How much of the auto-complete was predicted based on my past activity texting, writing email, searching with Google in the Chrome mobile app, and across other mobile apps that share data? Would that make this auto-complete micro-blog more my creation based on selection over time (Darwinian?), or is it still more machine application based on hints that it fed to me according to my LinkedIn profile (Connect with me!) and ad opt ins? Am I an autonomous agent, or might there be agentive and biased algorithms at work here?

Education is not about the problem of being able to remember facts about how an elearning system works best with your computer skills or similar systems that can crunch your time using software development technology, but it’s important to understand how you work and create

Education is not about the problem of being able to remember facts about how an elearning system works best with your computer skills or similar systems that can crunch your time using software development technology, but it’s important to understand how you work and create ![]()

At first glance this seems like a poor browser-based translation and benign, and if there are agentive algorithms at play they are clearly appropriately appealing to my educational, personal, and professional interests as evidenced by hundreds of dozens of searches, responses, posts, opt-ins, plus adjacent hyperlinked explorations. It is not my voice, but not so far off to be alarming. I recently posted a data set with Google Data Studio and received an AI prompt to translate my work into Danish. Perhaps a translation to Danish and back would generate this auto-complete sentence. Algorithms originally developed with human intent are now crunching big data sets and are no longer managed with human understanding. There is something not quite right. Does opting in blindly to all app services to allow full surveillance make the loss of authorship ethical? Are my texts to colleagues, friends, and family authentically mine?

My online activity may be measured and counted, but I am not a victim of algorithm bias as described by Cathy O’Neil (2017). O’Neil describes a scenario whereby a useful software tool is intended to help solve crime, and whilst performing as intended it targeted neighbourhoods with prior cases of physical crime and avoided crimes like financial fraud in nearby privileged communities. O’Neil calls this predictive tool a weapon of math destruction, “math-powered applications that encode human prejudice, misunderstanding and bias into their systems.”

Just how biased is unjust? Do you know what a CEO looks like? What is your first impression? Using Google Search in a Chrome browser, type CEO and look at the images. Internet algorithms search personal profiles, job descriptions, and images. The majority of top 100 images for CEO are white males with short hair in the 45-55-age range wearing blue suits. Are search results similar in more populous and technologically advanced countries like India and China, and can women become chief executive officers?

Auto-complete favours my browser search and professional networking preferences but not my syntax, most-used vocabulary, or key terms in related email, text, and LinkedIn activities. It is certainly networked, but that network is not quite right. For example, if you paste the auto-complete statement into Google Search in Chrome you will find a host of elearning systems, ads for Learning Management Systems (LMS), and related blogs and technology news. And because I am enrolled in UBC, I now often find posts from Stella Lee associated with my elearning, LMS, and AI searches. I do not mind. Stella’s writing style is succinct and the information is relevant (for me). Timely and opportunistic is that search: a relevant article from 2020 Inside Higher Ed by Peter Herman about the future of online learning. Rather, Online Learning Is Not the Future. Search alternatives in Chrome are very much like ads on LinkedIn for an LMS, where strengths and weaknesses for compliance training are outlined in pro and con statements. And in 2021 I have regularly ‘liked’ posts by Robert Luke from eCampusOntario where a focus on LMS, micro-learning, and digital credentials are core components of their networked services. There is no co-incidence, only design. My contributions, searches, and likes will be selected to support online learning in Ontario and in turn offer me industry-related information that I seek and will validate with my professional opinions, rankings, and ratings and potentially lead to my next job.

Herman, P. C. (2020). Online Learning Is Not the Future, Inside Higher Ed June 10, 2020. Retrieved from https://www.insidehighered.com/digital-learning/views/2020/06/10/online-learning-not-future-higher-education-opinion

O’Neil, C. (2017). Justice in the age of big data. Ideas.Ted.Com, April 6, 2017. Retrieved from https://ideas.ted.com/justice-in-the-age-of-big-data/