The prompt I selected for this exercise was “Every time I think about the future…”. The prompt itself invokes memories of reading blogs as well as speculative fiction and non-fiction: everything from socioeconomic issues to technological developments was consistently addressed in such writings, often with a skeptical and even dystopian tone. Of course, those writings were not the products of predictive text; rather, they benefitted from writers’ deliberate word choices and structure.

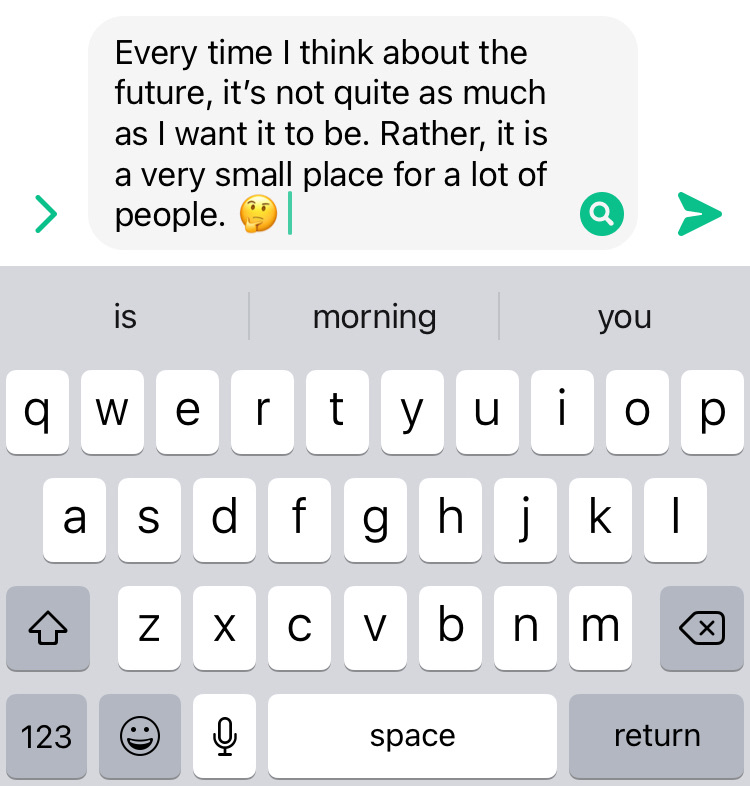

Below is the result of my predictive text prompt:

Admittedly, the predictive text did capture some of my common verbiage: mostly the use of the words “quite” and “rather”. However, the recommended vocabulary to formulate this statement did not really reflect words I would have used to describe my thoughts regarding the future. For instance, the phrase “small place” in this context is certainly not one I would have used; instead, I would have perhaps written something like “competitive place” or “difficult place” in my speculation. Also, the first sentence “…not quite as much as I want…” had no appropriately recommended adjective in lieu of the word “much”. It seems that when I attempt to “translate” this statement, it’s as if the predictive text is trying to articulate the thought that the world won’t have as much abundance and opportunity in the future for the growing number of people. In this respect, I would say it captures my sentiments well, despite the awkward vocabulary and syntax.

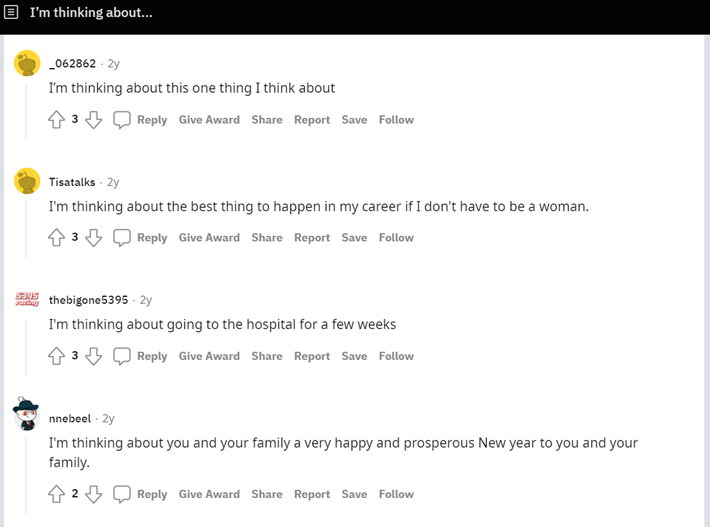

Screenshot of prompt “I’m thinking about…” from r/predictivetextprompts

This prompt “I’m thinking about…”, like many of the others on r/predictivetextprompts, resulted in mostly nonsensical statements and even non-sequiturs. I imagine that the vocabulary that appears in each of these statements might reflect the individual user to some degree, but of course does not capture the “voice” with which most people write – even if it is informal.

Predictive text has massive implications for politics, business, and education. As observed by McRaney (n.d.), his prompts “The nurse said…” and “The doctor said…” had very gendered outcomes, being followed by “she” and “he” respectively. The traditional assumption of nurses being women and doctors being men is reflected in this predictive text, perpetuating subtle yet insidious forms of sexism. In my view, the greatest danger of algorithms in public writing is the perpetuation of prejudices, including sexism, homophobia, and racism. McRaney’s (n.d.) podcast notes that bias is necessary for algorithms, but it is necessary for humans to differentiate between ‘just’ and ‘unjust’ bias: in essence, designers of algorithms are responsible for mitigating the unintended consequences of bias in their work. As alluded to in last week’s blog entry, politics is an arena especially vulnerable to creating and perpetuating echo chambers – particularly those that perpetuate these more ‘unjust’ biases.

References

McRaney, D. (n.d.). Machine Bias (rebroadcast). In You Are Not so Smart. Retrieved from https://soundcloud.com/youarenotsosmart/140-machine-bias-rebroadcast

r/predictivetextprompts. (n.d.). Retrieved July 12, 2019, from Reddit website: https://www.reddit.com/r/predictivetextprompts/

Hi Amy,

I found the quote that you pulled from McRaney’s podcast particularly interesting. It was one that I had missed in my own listening, but I think correlates well with the discussion of the weights of connections and nodes from our module on networking. As those people who have the privilege to shape the weight of nodes and the hierarchy of information online, algorithms that make sense of the network help to reproduce inequities that are produced by access. I think that your point about human users needing to be able to determine the difference between just and unjust reinforces the need to educate ourselves about how technology, such as algorithms, and the need for critical thinking to be taught in the modern educational curriculum. As we saw in the Attention Economy task, there are all sorts of dark patterns out there!

I am curious if you saw any examples of biases that were being reproduced in your own brief experience with algorithms? Thanks for sharing!