Resisting the Attention Economy. (2020)

The “Attention Economy” has become a major issue in the world and it is affecting billions of people. It effects how people use the internet, how they communicate, how they shop, what news they receive, how they vote, and how they think, etc. In Tristan Harris’ 2017 TED Talk he says “What we don’t talk about is a handful of people working at a handful of technology companies through their choices will steer what a billion people are thinking today.” (Harris, 2017, 00:41) This is a major problem and many people in the industry are waving the red flags. In most cases, it is not as a result of poor intentions but as a result of the business models and algorithms that are currently in place with the single goal of making the most profits as possible. When most web or UX designers build websites they are most often done with good intentions but it is not always true. Some individuals and groups have been able to use these algorithms in bad faith to manipulate and take advantage of people to acquire some sort of monetary or power gain. Users may not even know that they are being manipulated until the damage it done. Also, sometimes the results of good intentions are actually not good at all either. There are many ways to design websites that grab our attention, alter our behaviors, and manipulate us into clicking or entering different websites. These can sometimes be wanted or sometimes unwanted by users. Most often users are unaware that these things are being done to them behind the scenes. Therefore, it is important for the word to get out, so that users can be aware of this and limit the chances of these unwanted results.

“User Inyerface” is a web based game that was designed to show how manipulative/tricky Graphical User Interfaces (GUIs) can be. It contains web design elements that are meant to draw a users attention and/or to steer them in a certain direction. Our task this week was to play the game and reflect on our experience. At first, when I went to the website, I thought it just had a bunch of “bugs” which prevented it from working correctly, but then realized that it was designed to fool the user. For example, on the first page there is a big green circle button with the text “No” inside. In normal circumstances, this green button would be a button that you would click to start a game because the general public knows it stands for “Go”. However, in this case when I clicked on it, the site didn’t do anything. Again, I was drawn to this green button as it really stood out but it had no use. Also on the first page, it said “Please click HERE to GO to the next page“. Normally underlined or highlighted words are where users click, but in this case I had to click on the word “HERE” to go to the next page. Even at the beginning of the game I was confused and frustrated. What if clicking on these buttons or words took me to an unwanted site or exposed some of my personal data? I would have no way of knowing.

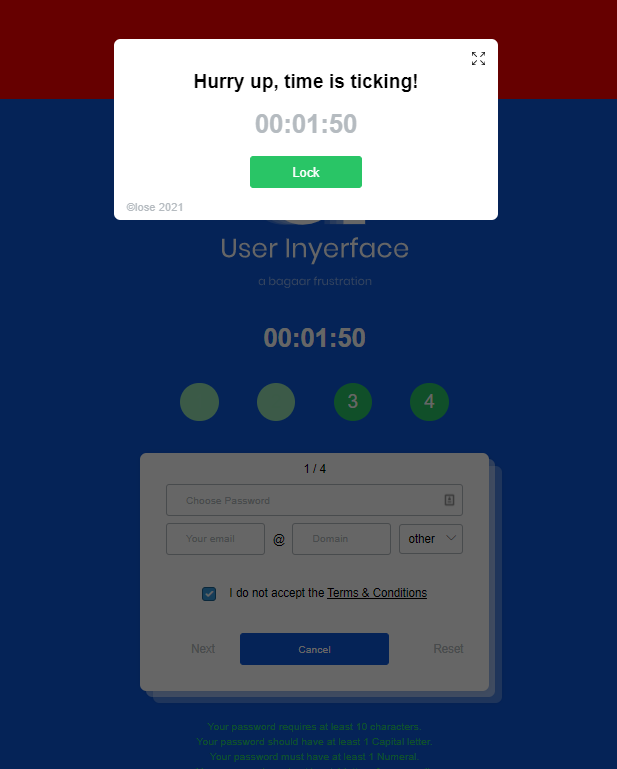

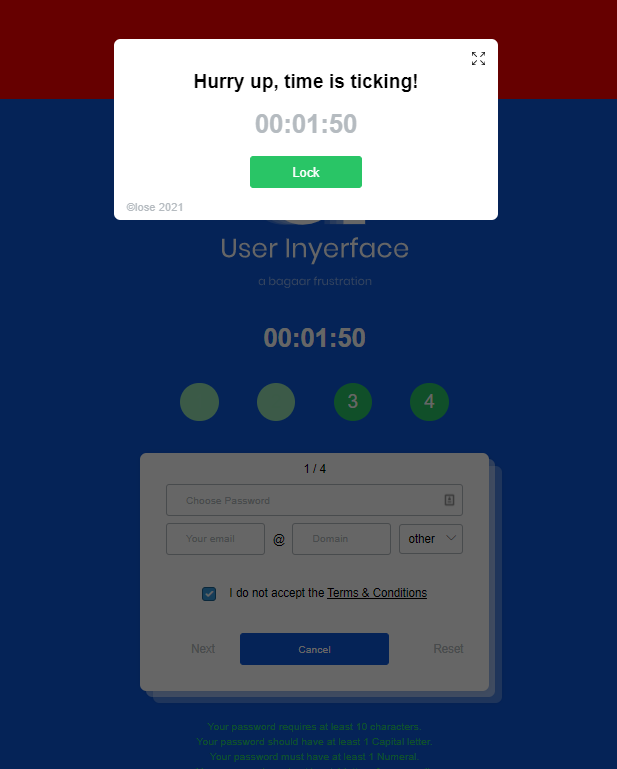

Once I got to the next page, I had to enter a password, email account, and accept the terms & conditions to continue. This brought up a few issues for me, one being that I was weary of providing my email address to an unknown website. So instead of using my own email address I just made one up. Another problem for me here was there was a timer that counted how much time it was taking me to fill out the form and below the timer there were flashing numbers. This immediately changed my behavior and affected how I was playing the game by speeding up my typing and making me a bit nervous. Unfortunately, I was not quick enough and a warning window popped up . See below.

This warning pop-up was confusing as well because I couldn’t get back to the main page. I thought if I clicked the green button that said “Back”, it would take me back to the main page but this didn’t do anything. I had to actually click on the word “close” which was in small text and written in the bottom corner of the window. If I didn’t try to click on the word “close”, the game would have been over for me. To make it even more frustrating and nerve-racking, the popup had a message that said “Hurry up, time is ticking!”

As I continued on through this game, there were more and more confusing forms and prompts. The way the toggles, checkboxes, and buttons worked were not how they normally work on websites. The way this website game was designed definitely affected my behaviour through persuasion and manipulation. But this goes to show how easily others can be persuaded and/or manipulated to do certain things on the web. It is the architecture and algorithms behind the scenes that allow the website to do this. See a video of my gameplay below.

This week’s module was very eye-opening for me even though I have watched “The Social Dilemma” and know about these issues of the attention economy, online privacy, and persuasion algorithms. In Zeynep Tufekci’s 2017 TED talk video she talks about how the same algorithm architecture that is used for advertisements to persuade us to consume, is that same algorithm architecture that is being used to manipulate our thinking and politics (Tufekci, 2017). She says “As a public and as citizens, we no longer know if we are seeing the same information or what anybody else is seeing. And without a common basis of information, little by little public debate is becoming impossible.” (Tufekci, 2017, 15:28) She also says “The algorithms do not know the difference. The same algorithms that are put upon us to make us more pliable for ads are also organizing our political, personal, and social information flows.” (Tufekci, 2017, 18:35). Every time I hear about this it makes me frustrated and a bit angry yet I still continue to use the web and some of these controlling/manipulative social media platforms. It shouldn’t have to be this way. The web shouldn’t be a place for deception or manipulation just so organizations and companies can gain profit, power, and control. I am hopeful that the world is waking up to these issues and that citizens will continue to push back. Individuals should have the right to the their privacy, their own data, and the same factual information.

References

Harris, T. (2017). How a handful of tech companies control billions of minds every day.

Retrieved from https://www.ted.com/talks/tristan_harris_the_manipulative_tricks_tech_companies_use_to_capture_your_attention?language=en

Tufekci, Z. (2017). We’re building a dystopia just to make people click on ads. Retrieved from (Links to an external site.) https://www.ted.com/talks/zeynep_tufekci_we_re_building_a_dystopia_just_to_make_people_click_on_ads?language=en (Links to an external site.)

User Inyerface. (2019). User Inyerface – A worst-practice UI experiment [Image]. https://userinyerface.com/

Zigmond,D. (2020). Resisting the Attention Economy [Image]. Tricyle.org https://tricycle.org/magazine/jenny-odell-attention-economy/