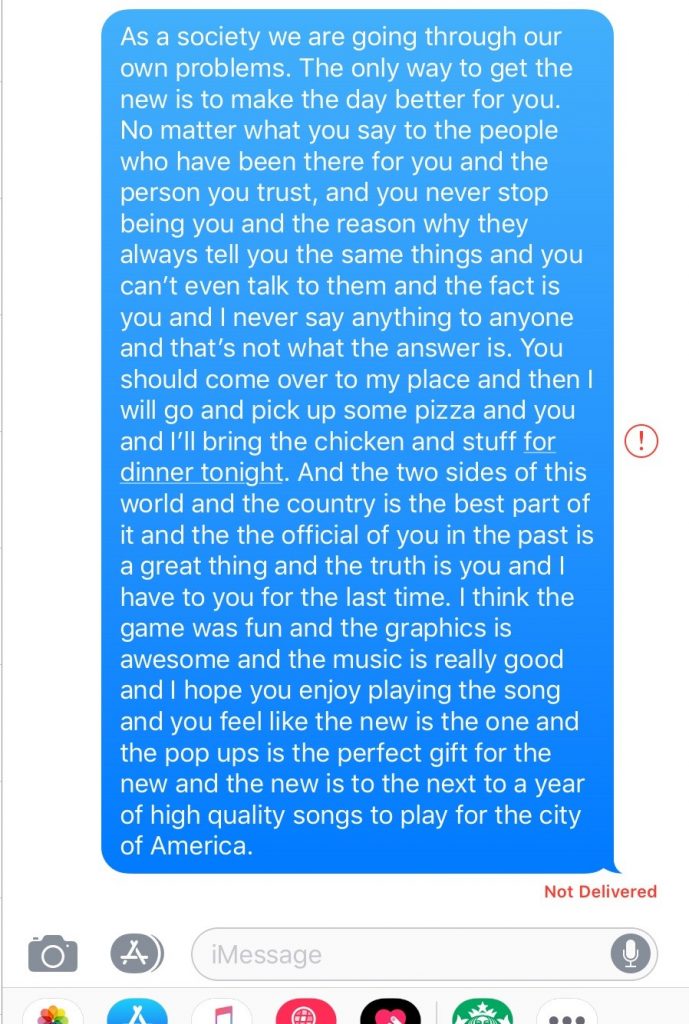

When writing my microblog entry, the first thing that I noticed was how difficult it was to write anything serious. I started the activity, seeking to address how we as a society, spend a lot of time editing our conversations before we send them via text. Instead of that message being relayed, I had to choose from very simple concepts and words. “You”, “I” and “and” came up very frequently, and it was sometimes hard to break out of the pattern of using one of those three words. Because of the simplicity of the predictive text, I am hard pressed to say that I have read anything like I have written, besides in a text message or a very simple note I may have received from a friend in grade school. The most complex words I produced are probably: pizza, chicken, music, graphics and pop-ups, which shows that the algorithm my iPad uses has adapted to repeat what users have selected in the past (McRaney, n.d.).

The statements produced by the predictive text are different than how I would usually express myself, because they don’t really follow a formula. I have been tutoring K-12 writing online now for a couple of years, and often go over how to state an argument in writing. When making a claim, such as “As a society we are too concerned with controlling how we are seen”, it strengthens the statement to follow with evidence (supporting ideas) and an analysis (a statement to glue everything together). When choosing from options that the algorithm provided for me, I was unable to give anything but a series of unrelated claims. The nature of writing is that it is a creative process where the writer’s strength lies in their ability to artfully relate ideas to one another. Predictive text algorithms aren’t created to replicate this process, but instead exist for speed and convenience. An AI’s limit starts where unfamiliarity begins (Santa Clara University, 2018).

The use of algorithms in public spaces is dangerous because there is a large threat of cultural exclusion. When writing my microblog, why was I only offered the food words “chicken” and “pizza” to choose from? What about arroz caldo, or cod cakes? Groups of people who are already underrepresented will continue to be so, but at a faster rate, as writing algorithms will accelerate (Santa Clara University, 2018) cultural ‘norms’ while phasing out others. As stated by O’Neil, algorithms adapting to show individuals different political information has already threatened democracy (The Age of the Algorithm, n.d.). If algorithms were used in academia and education, we may not share the same knowledge base as people in other countries. Our histories and futures never may never intersect, but instead run parallel. Businesses may also begin to stagnate, unable to generate new and innovative ideas.

McRaney, D. (n.d.). Machine Bias (rebroadcast). In You Are Not so Smart. Retrieved from https://soundcloud.com/youarenotsosmart/140-machine-bias-rebroadcast

Santa Clara University. (2018). Lessons from the AI Mirror Shannon Vallor.

The Age of the Algorithm. (n.d.). In 99 Percent Invisible. Retrieved from https://99percentinvisible.org/episode/the-age-of-the-algorithm/