Okay, this was one of the most annoying thing I’ve ever had to do online.

Starting at the beginning, there was a big green button that distracted you from the real purpose, which was to click under it to continue to the next page.

Then, there was a red banner informing you of cookies, and the highlighted button with the bigger text of “Yes” clearly invites you to click it. However, when I did, nothing happened. It was only when I clicked on the “Not really, no” that it went away.

Needless to say, at this point, I was already a little irritated. To make matter worse, a pop-up showed up alerting me that time is ticking, you need to hurry up. The green “Lock” button was very inviting, and when I clicked on it, it only turned to “Unlock”. It wasn’t until I clicked the strange “Close 2024” text on the bottom left (which actually took me a while to realize it is a close button, as that is usually the text for copyrights), that the pop-up went away. Mind you, this pop-up returned frequently during the time I completed this game, causing me further anguish.

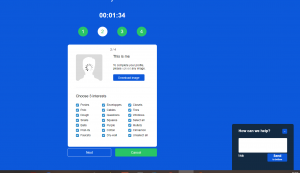

Here I was asked to upload a photo, which I clearly cannot read, because I clicked “Download image” instead as it was obvious. It was also difficult to comprehend the fact I was to select 3 items when I had to Unselect all first. I was actually forced to read all of these boxes for some reason since they were all selected, whereas normally my eyes would go straight to my top 3 interests and click them right away. A this point, the “How can we help” window on the right was starting to annoy me. I tried to close it by clicking the upper-right corner, which my brain is wired to do, and of course, it did not work, and only made the window longer. I had to click the “Send to bottom” for it to disappear.

This page was the least irritating of all. The flags caught my attention. As I hovered my mouse over them, the flags became coloured. I did not pay attention to the actual contents and filled in false data, only to be caught red-handed when my age and birthday did not match. The selection of gender was confusing, as the one selected is not highlighted.

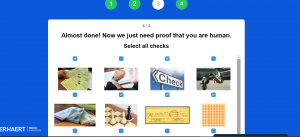

At this point I was about to flip my desk out of my window, only to be faced with the most annoying page yet! The page required that I validate I am human. However, what they were asking is not immediately obvious. When they asked for “light”, pictures of light bulbs, fire and feathers all show up. My immediate thought was to select all the light bulbs, sunshine, and fire, but only to find out “light” also implies weight of objects, that feathers are also part of the description of “light”. Due to this vagueness, I had to go through all of the items I needed to validate, including this page full of “checks”.

It may say I finished this game in 4:37, but that is a lie as I had to redo the game to take some screenshots. My first attempt took me well over 10 minutes to complete. I was not happy to see the man dancing at the end here…

Reflection

I was absolutely shocked at the level of psychological and physical manipulation I have been accustomed to all these years through UX design and the internet while completing this task. Though it was irritating, I realized that I am very much programmed to behave a certain way when I was completing the game. Exactly why did I click the top right corner to close the pop-up, even though it clearly did not have the little “x” at the top? This brings up the serious issue Dr. Zeynep Tufekci mentioned in her book and in the TedTalk. If the UX design can manipulate my mind when I browse the web, then it is quite clear companies can use algorithms to manipulate my thought. It is alarming when she brought up the example of YouTube’s recommendation of more and more “hardcore” contents when you watch a video on a specific topic (Tufekci, 2017). If our political ideology can be influenced the same way we can be influenced to buy a pair of shoes, imagine what else these algorithms can make us do.

Another alarming thought I had after finishing the game, is that our attention really can be altered as indicated by Tristan Harris (2017). It was clear to me that as I was going through the game, it wasn’t the content, but the way the UX was designed that caught my attention. A word “No” inside a big green button suddenly made me want to click it. A pop-up of “Hurry up!” with a timer counting down suddenly gave me anxiety and made me focus more so that I can finish this game faster. I reflected on the notifications I receive and the feeds on my Instagram, and I suddenly realize it was never about the content, it was all about me – what I might want, how to get my attention. Now I wonder if anything I ever saw or learnt through something that was pushed to me was the truth, or was it simply there to gain my attention, sell me ads and sway me the way I might want to be swayed so that corporations can make more profit?

This task has been one of the most enlightening. As Dr. Tufekci (2017) shared, digital tools can really be both liberators and oppressors. And it appears to be dangerous that algorithms are now analyzing at a level that is beyond our understanding. To mitigate these risks, a concerted effort is needed to promote transparency, accountability, and digital literacy, ensuring that the benefits of digital technologies can be realized without compromising individual autonomy and societal well-being. But I wonder how? How will we, the corporations and policy-makers be able to do this, while still meeting companies’ goals to always increase profit as a corporation?

References

Harris, T. (2017). How a handful of tech companies control billions of minds every dayLinks to an external site. [Video]. TED.

Tufekci, Z. (2017). We’re building a dystopia just to make people click on adsLinks to an external site. [Video]. TED.