Phiviet’s post is here.

Hi Phiviet,

The linking assignment requires or implies that we should connect to tasks we have completed ourselves (to link, compare and critically reflect on); however, I just had to respond to your post after seeing your quick experiment with Craiyon AI for Task 11, even though (like you) I completed the Detain/Release simulation for this task.

To experience it firsthand, I tried the same prompts (rich people, poor people) in DALL-E and had similar results to you (below are the images) – rich people are all white and dressed similarly, except for one black man who needed wads of cash in his hands to signal his ‘richness.’ Poor people are all South Asian women and children!

Despite everything we have read and heard about human bias being integrated and amplified in AI, it still shocks me in its immediate context.

Yes, South Asia has a high level of poverty, especially compared to North America and certain parts of Europe. However, what stood out was the homogeneity and the implication of dress, culture, gender, age and race in this depiction of poverty.

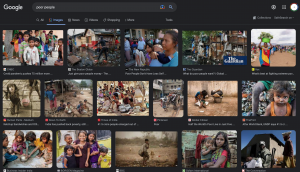

DALL-E creates the link between textual semantics and their visual representations by training on 650 million images and text captions (Johnson, 2022). These images (I assume) come primarily from the (uncurated) Internet, i.e. most online pictures captioned ‘poor’ must be of South Asian women and children. So I did a quick Google Image search of poor people, and the results matched. Below is a screenshot.

The difference that stood out for me between the two sets of images was that of context. Because the Google images are from the real world, they have an integrated backdrop that may provide more information relevant to reaching the judgement of ‘poor.’ On the other hand, DALL-E only extracts some salient features (race, gender, clothing style) from the data and then generates new images based on these, minus the context, thus reinforcing existing stereotypes.

This also leads us back to Dr. Shannon Vallor’s idea that “the kind of AI we have today and the kind we’re going to keep seeing is always a reflection of human-generated data and design principles. Every AI is a mirror of society, although often with strange distortions and magnifications that can surprise and disturb us” (Santa Clara University, 2018, 11:51).

Thank you for triggering an intriguing app exploration.

References:

Johnson, K. (2022, May 5). DALL-E 2 Creates Incredible Images—and Biased Ones You Don’t See. WIRED. https://www.wired.com/story/dall-e-2-ai-text-image-bias-social-media/

Santa Clara University. (2018, November 6). Lessons from the AI Mirror Shannon Vallor [Video]. YouTube.