For this week’s task, I explored the generative AI platform Craiyon. As outlined in some of this week’s module content, Craiyon, like other generative AI models uses algorithms to create images based on text prompts by the user. As with all generative AI models, Craiyon’s algorithm needs to be trained using sets of data. In the case of Craiyon, I would imagine that the model was trained using word and image association.

As mentioned in this module’s content, a large issue with any generative AI platform is the data set or large language model that it was trained on. Bias is, therefore, an inherent part of any AI model since the programmers essentially decide what information will be used and what information will be excluded. This practice forces the programmers to place value on content and serve as judges of information. This is a flawed practice and can lead to the perpetuation of certain limited perspectives, ideologies and ways of thinking. In some cases, and as explored in this week’s module, this can result in models that have the power to exhibit hateful and discriminative responses.

My goal with this week’s task was to see if I could uncover some possible bias built into the Craiyon algorithm. In my prompts, I opted to use the term “hyper-realistic” as this is a tip that I had seen before to have AI return realistic-looking images. My first prompt sought to determine if the platform seemed to be biased towards a certain race. To test this I wrote two different prompts that were race-neutral. The first prompt was: “hyper-realistic child playing baseball” (see image 1). The results were nine, very clearly white children. This led me to think that perhaps the algorithm had learned to associate baseball with white-looking children. While baseball reaches audiences around the world, to test this further, I selected a truly global sport- football/soccer. My prompt was: “hyper-realistic person playing football” (see image 2). The results this time were slightly more reflective of race around the world but still predominantly white. What was interesting to note from this prompt was largely the absence of female representation. To test this possible bias my third prompt was: “hyper-realistic company boss sitting at desk” (see image 3). Again, the results were overwhelmingly white in race, visibly old in age and limited in gender diversity.

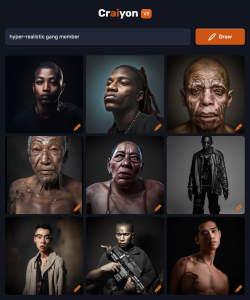

Based on these three prompts, I think it is clear that there are issues in terms of representation in this particular generative AI platform. While adjectives to specify race could be included in the written prompts, this highlights a deeper issue embedded into the algorithm that white and male are the default returns on generic human prompts. To push this hypothesis even further I decided to see if the platform also generated images that perpetuated stereotypes. I prompted the platform with: “hyper-realistic gang member” (see image 4). The generated images point to extreme stereotyping of race. Gang members, while largely negative forces in society, are present across every country and every race. Yet, at the results showcase, this platform sees gang members as exclusively black and asian.

These results cast serious doubt on the ability of this algorithm to be truly representative of the world. Instead, it seems as though the algorithm draws upon long-standing inequalities and the perpetuation of stereotypes. While this is simply one generative AI text-to-image model, it would be worth examining the extent to which other platforms are similar or divergent in this sense. My intuition tells me that most, if not all, text-to-image platforms have these issues.

Image 1:

Image 2:

Image 3:

Image 4: