OVERTURE

Being an English teacher, I jumped at the opportunity to write a narrative. Typically, I am the one teaching the narrative elements to my students, but I never truly have the opportunity to write creatively myself. I’ve also been an avid follower of Yuval Noah Harari’s writing throughout the years, and was thrilled to use his article Reboot for the AI Revolution as the basis of inspiration for my narrative.

Set in the distant future, my narrative warns of the authoritarian type experiences AI could produce on a dystopian speculative basis. It takes Harari’s idea of the “useless class” and magnifies what that might truly look like in a neo-Marxist type future. In this speculative future, the rise of AI algorithms and automation has essentially eliminated the middle class, leaving only the ‘haves’ and the ‘have-nots’. While one struggles to accumulate the basic necessities for survival, the others grapple with determining what living truly means: A new age proletariat vs bourgeois story, so to speak. You may notice the influence of a number of course elements thoughtfully integrated in the story.

There are also thematic reflections on the role of text, technology, and education throughout the piece – I was intentional in highlighting the shift in the fundamentals of education of the time, ironically contrasting the return/ importance to naturalistic and ‘primitive’ types of knowledge despite being set in a hyper technologized world (ie- foraging, hunting, farming). I attempted to also make clear that use of ‘high technology’ was dominated by the ‘high class’, and that the divide between the two was immense and immeasurable. At the center of the narrative is the idea that the human capacity to create algorithms in AI technology needs to continue to develop on an ethical level, but more importantly, to be utilized for the right reasons and the right people. I also went to some lengths to direct some attention to the damage done to the environment due to the lack of action on climate change; an area where I truly think we need to turn our attention to especially when it come to enabling technology to solve problems. Enjoy…

_________________________________________________________________________

PART 1

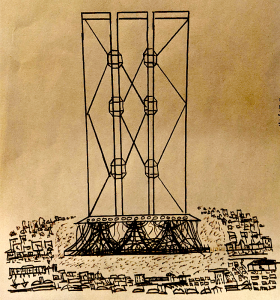

Centered within a ramshackled skyline, three tall towers rose above all else.

Their peaks brushed the ceiling of the sky while the base of the buildings dispersed into multiple purple and silver tubers solidly planted deep within the cold, hard ground. Most of The People referred to them as Roots. The Roots ascended to great heights, at least ten stories Ava had heard people say. They reminded her of the large mangrove trees she’d learned about while watching a video using her friend’s stolen internet connection; trees that were long extinct now. “Imagine a coast line” the video began. It was difficult for her to conjure up accurate images. Ava had never seen a coast line. “The mangroves straddled two worlds… not only do they adapt, they create a sanctuary for an extraordinary range of creatures”. Ava couldn’t help feel like the haven these Roots purported to protect, felt more like a prison, an immensely twisted metallic jail cell.

At a certain point, the Roots culminated in a gnarled wire tangle where a thick plateau of steel rested and served as the base for the countless stories above. Each tower seemed to disappear into the clouds and each were made of the highest quality SmartSpecs, TechnoSteel, and IntelliFibres. All three towers were fenced off with cable fencing, every corded wire measuring at least three feet in diameter and supercharged with 7000 volts of electricity. The sound of buzzing electricity was constant. A series of enormous metal spheres sat at regular intervals as Ava’s eyes climbed the towers, serving as the only common structure that kept them all connected.

Nobody had ever seen the Paragons come in or out of any of the towers, but everyone knew they were in there.

Ava Carlton had been walking home from Dr. Howard’s schoolhouse, close to the towers, when she had been jolted by the sight of a small pack of coyotes digging through heaps of garbage. With the surrounding region becoming so environmentally bare, the animals that once lived wild and free were forced into the city’s encampments to find food, and in some cases, people. Avian species were one of the organisms left completely extinct, and sardonically, small drones roved through the skies in their place, watching. The forests that once stood at the edge of the city had been cut down long ago and large metallic cylinders emerged from the ground, branching off high into the sky. Some said they were the same tubules that made up The Roots, connected in an underground maze meant to harvest various energy sources for the Paragon’s usage. Some conspired to dig, but nobody had ever found anything.

The ocean ice had long melted and sea levels had increasingly rise year after year. Summer’s were extraordinarily hot, and those who didn’t die of hunger, thirst, disease, rabid animals of succumbed to the hands of nomadic bandit tribes, died of heat exhaustion or dehydration. Salination levels fell to an all time low, and if the chemical breakdown of plastic within ocean water didn’t kill the aquatic life, it was the fact that sea creatures simply couldn’t survive in water that diluted. There was talk every year that the ‘big storm’ was coming; a rain so intense and so long that it would flood the earth like in Biblical times.

The pandemics had wiped out roughly 60% of The People, and those who did survive found that the food supply would quickly run out. The Paragons, on the other hand, were largely unaffected having been injected far in advance with innovative biotechnology they nicknamed BloodBots; nano-robots designed to fight off disease, discomfort, and all sorts of pain. How can you be human, if you can’t feel any pain, Ava thought to herself.

The People did not have the means to afford this technology shortly after The Divide. They were relegated to “the old ways”, using the land in some capacity to survive. Planting crops in arid land, hunting and foraging in barren forests in hopes of some semblance of a decent harvest. An ironic full-circle approach to a world once filled with promise of technological opportunity for the underprivileged. Those who chose not to adhere to the old ways resorted to thievery, destructive violence, and generally reckless nihilism. Danger lurked in every corner. Ava picked up her pace and weaved her way through a series of narrow alleys until she bolted safely through the front door or a dilapidated apartment building, clicking closed the four padlocks her father had installed.

Devin sat nervously at the tiny wooden table in the kitchen. He had been reading the Daily Bulletin on his tablet, trying to make out the words through the fractured and splinted screen. Most books had been used in the early days to fuel fires. The textbooks went first. Devin put down his tablet and walked painfully over to embrace Ava, limping heavily with every step. He hadn’t eaten properly for months and had been nursing leg wounds sustained from hunting. He insisted that most of the food he was able to scavenge went to Ava, and there were no conversations to have about it. His hunting and foraging skills were a far-cry from his civil engineering job years ago. He had been pushed out of the industry by the rise of A.I powered algorithms that could produce higher quality projects at a faster rate. This was a common occurrence to many when The Divide happened.

Their neighbour, Don, was a military man but was discharged after the ComBats took over as the main vehicle for wartime combat. Marianne lived across the hall within the commune. Once a doctor, she lost her job after CyberMed AI Systems replaced many of the medical professionals at hospitals, walk-ins, and private clinics. It was common to find ruined apartment buildings housing groups of useless people working together just to survive. Once Ava arrived home, both Don and Marianne made their way over to Devin’s unit, where Ava taught them what she had learned throughout the day. They’d been working on this for a year now, and there wasn’t much time left to complete it.

She knew that doing so put her in grave danger.

___________________________________________________________________________

PART 2

Dr. Howard sighed heavily as his legs walked him into his domicile. He stepped out of his exo-skeleton frame and dropped heavily into his anti-gravitational chair. All Paragons wore their OssoXO suits throughout the day as a way of fully embodying their fundamental belief that ‘technology was made to serve us’, a belief that Dr. Howard despised. I’m certainly capable of using my legs myself, he thought.

Because basic human movement had been crafted for their technological counterparts, the muscles of all Paragon’s had atrophied over the years. Despite the loss of muscular vigor, Paragons were in peak physical and intellectual health, primarily due to the infusion of nanotechnology within their bodies. BloodBots collaborated with blood cells, helping to fight off novel diseases and block pain receptors from reacting in the brain. CereSynap chips were implanted in their brains, allowing new information to be uploaded to their memory as if one were uploading a photo to a device. Some Paragon’s injected DNChain technology into their bodies, allowing them to modify their DNA in various ways, while others replaced limbs with robotic prosthetics. All Paragons believed that this was the next step in the evolutionary ladder: human and technological integration.

A faint buzzing sound had begun emanating nearby. A drone-like object, no bigger than a human hand, had appeared quickly and flooded Dr. Howard’s face, torso, and legs with yellow beams of light. EARL, an acronym for Electronic Algorithm and Response Lexicon, was a standard issue companion drone meant to monitor and serve each Paragon user.

“Good evening Doctor.” The drone spoke as if it were human. AI voice had come a long way since its inception. “Our research algorithms suggest there are multiple disease variants on the horizon. It is advised that you upgrade your bio-protection system with the following nano-bots”

The drone produced a tiny vial filled with a clear liquid and a small syringe. What fun it is to be human when you don’t feel any pain, thought Dr. Howard sarcastically as he jabbed himself with a needle and pressed hard into his tough skin.

The drone spoke again – “Secondarily, there are indications that the global deluge is on pace to arrive at our current location no later than Friday next. All systems in the Towers are operational and our data suggests we should have no problem withstanding the projected damage.” There was a slight pause “My algorithms are indicating a higher than normal sense of stress, Doctor. Your blood pressure is high, and your brain waves indicate that you are on high alert. Is there something the matter?”

“Funny, EARL. I didn’t notice,” Dr. Howard said dismissively. But he did notice. Over the past year, Dr. Howard had set up a small inconspicuous school house in what most Paragon’s called the Filth, the surrounding area around the three spires. It was causing him deep anxiety. None of the other Paragons knew of Dr. Howard’s endeavours, for it went against their beliefs, and any Paragon who violated their hallowed customs paid a significantly lethal price.

“You cannot lie to me, Doctor. My biotech algorithms are flawless,” chided the machine.

“EARL, do you know why they called it The Divide?” Dr. Howard turned to face the floating drone.

“My global database suggests that in the year…” EARL began sputtering out information.

“I figured. You cannot know. You can only regurgitate the data that’s been provided for you. You claim that your algorithms are flawless. How do you then account for the population of people living down there!” Dr. Howard pointed out the SmartSpec glass window, which automatically untinted itself to provide a clearer picture for the viewer.

“No algorithm is flawless,” muttered Dr. Howard under his breath.

Most people weren’t aware why everyone referred to that time in history as The Divide. It was assumed that it simply stipulated an alarming divide between two major sects of society: the Paragons and the People. Although true, this was not what The Divide was meant to relay. In the late second millennium, Dr. Howard had developed a new algorithmic technology designed to assess the future potential of any individual on earth. It factored in a multitude of characteristics such as DNA genetics, Intelligence Quotient, previous and potential life experience, and geographic location among hundreds of other facets. The technology showed promise in identifying individuals who could be the next Einstein, Mozart, or Shakespeare. It could be used to ‘harvest’ these individuals and allow them to make meaningful and lasting change for the entirety of planet earth; to put the right people in the right positions. This is not how the story went.

Greed took over. The technology fell into the wrong governmental hands, and rather than use the algorithm to determine the people who could meaningfully impact the world, the Paragons were formed: an identified sect of society inherently more valuable than the other half, according to the AI. The Paragons formed their own society, with their own beliefs, customs and rules, harnessing the AI technology to perennially solidify their seat in the social hierarchy. Resources, food, energy, protection from the elements, animals, and disaster all went to the Paragons. The Divide did not only create two societal factions, it quite literally algorithmically divided the worthy and the unworthy, the living and the living dead.

In a personal act of penance for his grave misdealings, Dr. Howard had taken it upon himself to secretly rework the algorithm and use it to identify those in the lesser population who had the potential to comprehend Paragon knowledge and the skills necessary to construct and distribute technology to The People. He had been privately and secretly tutoring a small group of children, and young adults, providing them with the knowledges they would need to introduce various life-saving technologies to the people below. Ava was one of his brightest. He hoped that after a year’s work, she would be able to produce something relevant before next Friday. Before the deluge had destroyed every last one of the People.

A knock came at the door of Dr. Howard’s unit. A tall, black haired woman slowly paced into his room.

“Dr. Yael, to what do I owe this pleasure!” Dr. Howard said with delight.

Yael was the chief medical engineer within the Towers. She had the important job of programming, engineering, and managing the manufacturing of all medically related AI technology within the Towers. With AI taking over the medical industry, the only vocations left were the one’s who created the machines. There was a grim expression on her face. She held a flat metallic remote in her hand. Dr. Howard knew what was about to happen.

“Doctor, I’m sorry. There has been speculation about your whereabouts recently. It’s given rise to an internal investigation. We know you’ve been associating with… them”. Dr. Yael moved closer to Dr. Howard, as if to ensure he couldn’t flee.

“Is that so” murmured Dr. Howard.

“I’m sorry Doctor, but I know you are aware of the protocols. We must ensure what we’ve established here lives on here forever. We can’t afford to change our…”

“Algorithms” Dr. Howard finished her sentence.

Dr. Yael bowed her head and took on a somber tone, “We owe a lot to you Doctor. I’m sorry.”

With that, she thrust the remote into Dr. Howard’s exposed neck. A quick flash. An electric buzz. A jolt of a body. Dr. Howard remained conscious, but wore a puzzled face. His eyes went from their vivid emerald green, to a silken black. His facial muscles relaxed. You could watch his memories being drained from his brain, as if a computer hard drive had been wiped of it’s storage files.

—————–

Anytime new members were inherited into the Paragons, typically through procreation, a new EARL drone is manufactured for their purposes. On rare occasions, Dr. Howard’s old algorithm was used on The People to determine if there were any worthy of Paragon life. In these cases, previously used EARL are given new assignments.

“EARL Series 3 Model xx91C – You’ve been assigned to a new Paragon,” a Paragon engineer directed to the drone, “Please report to domicile 932. You’ve been allocated to Dr. Carlton”

Dunne, A., & Raby, F. (2013). A methodological playground: Fictional worlds and thought experiments. In Speculative Everything: Design, Fiction, and Social Dreaming. Cambridge: The MIT Press. Retrieved March 18th, 2021, from Project MUSE database.

Hariri, Y. N. (2017). Reboot for the AI revolution. Nature International Weekly Journal of Science, 550(7676), 324-327 Retrieved from https://www.nature.com/news/polopoly_fs/1.22826!/menu/main/topColumns/topLeftColumn/pdf/550324a.pdf

Price, L. (2019). Books won’t die. The Paris Review. Retireved from https://www.theparisreview.org/blog/2019/09/17/books-wont-die/