Abstract

Due to the massive number of potentially enrolled students, the open-access nature for learners to participate or not participate, and lack of physical space on online platforms, massive open online courses (MOOCs) embody a particular learning experience independent of time and space (İnan & Ebner, 2020). With the heterogeneity of learners with varying learning motivations, this impacts whether or not learners complete the course. By identifying the characteristics that matter to learners, the learning experience of MOOCs can be improved and prioritized accordingly (Nanda et. al, 2021).

Based on the Exit Survey questions of edX-based UBC MOOC on Climate Change, two open-ended post-course survey questions were analyzed. A frequency analysis was conducted and visualized as a word cloud with learning analytic tool AntConc, revealing that learners found the video content most helpful, whereas the assignments, lack of flexibility in time and deadlines, and unguided peer evaluation were aspects that negatively impacted their learning experience.

Statement Question and Literature Review

Based on Nanda et. al’s (2021) study on analysis of open-ended feedback from MOOC learners, due to the heterogeneity of learners that enroll in MOOCs, it is important to identify the characteristics that are most important to the different learners and to prioritize them accordingly based on qualitative post-course surveys. In their study, they conducted latent Dirichlet allocation (LDA) topic model on 150000 MOOC learners from 810 MOOCs in different subject areas with the following three open-ended questions:

Q1) What was your most favorite part of the course and why?

Q2) What was your least favorite part of the course and why?

Q3) How could the course be improved?

From their qualitative analysis, the researchers identified characteristics that impacted learner’s experience in completion of the MOOCs: the quality of course content, accurate description of prerequisites and required time commitment in course syllabus, quality of assessment and feedback, meaningful interaction with peers/instructors, engaging instructor and videos, accessibility of learning materials and usability of the platforms.

Based on these studies, I would like to conduct a similar review on the edx-based UBC MOOC course on climate change, provide some data visualization and generate some discussion for further development of collection of data, and improvement of the course for future iterations.

Methods and Tools

For the scope of this project, I hope to center exploratory qualitative analysis from the Exit Survey as a starting point to further investigate learner data surrounding student demographics,engagement in course content, engagement with their peers within the course to extrapolate beneficial information and gain some insight as to what are the characteristics and aspects of the course that contribute to learner’s motivation to complete or drop out of the course.

First I familiarized myself with the quantitative and qualitative data from the MOOC, and operationally defined some of the different themes of student behavior and data that I wanted to investigate, namely student motivation, student familiarity (to subject matter), student identity/demographics, student engagement (broken down to four categories) and qualitative feedback.

| Table of Themes | |

| Motivation | |

| Climate Entry Survey | – Q2.1 What are the main reasons for taking the course?

– Q3.1 How many weeks do you plan to engage in the course? – Q3.2 How many hours per week on average do you plan to spend on this course? – Q3.3 How frequently do you plan to use course components? – Q4.2 How many MOOCs have you participated at least partially? – Q4.3 Think about the MOOC you were most engaged in, what best describes the level of engagement?

|

| Climate Exit Survey

|

– Q2.1 Were your goals for taking the course met?

– Q2.2 Are you likely to… – Q3.1 How frequently did you use each of the course components? – Q3.3 How many hours per week did you spend in this course? – Q3.4 What interactions did you have with course peers? – Q4.1 Which of the following components of the course were you very satisfied with? – Q4.2 How did you find the following modules?

|

| Familiarity (to Subject Matter) | |

| Climate Entry Survey

|

– Q.1.1 ~ Q1.3 Climate Knowledge

– Q4.1 Prior to this course, how familiar were you with the subject matter? |

| Climate Exit Survey | – Q.1.1 ~1.3 Climate Knowledge |

| Student Identity/Demographics | |

| Climate Entry Survey

|

– Q5.1 Which country were you born in?

– Q5.2 Which country do you currently reside in? – Q5.3 Main languages you speak – Q5.4 English proficiency |

| Student Engagement | |

| Person_course_day_cleaned.tsv | General (time spent)

– Avg_dt – Sdv_dt – Sum_dt Video Engagement: – Nevents – Nplayvideo – Ntranscript – Nvideos_viewed – Nvideos_watched_sec Forum Engagement – Nforum_reads – Nforum_posts – Nforum_threads – Nforum_endorsed – Nforum_threads – Nforum_comments Problems – Nproblems_attemped – Nproblems_answered |

| Qualitative Feedback | |

| Climate Exit Survey

– Q2.3 What did you like most about the course? – Q2.4 What did you like least about the course? |

|

Qualitative Feedback Analysis

I utilized text analysis software AntConc for frequency analysis and visualization via the word cloud function, as it would be the best way to understand the top 50 most frequent words from the text at a glance.

There were 200 responses for the “What did you like most about the course” question, and 216 responses for the “What did you like least about the course” question. To prepare the text for frequency analysis. Due to the smaller scope and sample size, I manually cleaned up the comments by normalizing to consistent lowercase, removing unicode characters and punctuation, removing common stop words, and then proceeded to stemming and lemmatization.

Findings

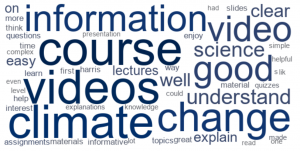

In the question “What did you like most about the course?”, below are some notable words that stood out.

61 students reflected that the “videos” were helpful to understand the course content. Alongside the word cloud with higher frequency are words with positive sentiment such as “good”, “clear”, “understand” and “well”.

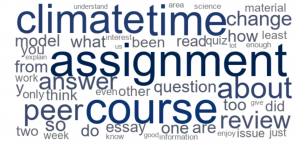

On the other hand, in the question “What did you like least about the course”, below are some notable words that stood out in the word cloud.

In the word cloud, the words “assignment”, “time” and “peer review” were some that I would like to mention. Having gone back and read the comments in detail, I found that they were somewhat related to each other. Many of the students reflected that they struggled with the assignments due to deadlines and personal time constraints, in addition to the peer review component that was required for completion of the assignment.

From the comments on assignments, 4 students shared that they came into the course with less background knowledge on the subject matter of climate change, feeling overwhelmed with the assignment and found “having to write essays on climate science before establishing a knowledge base was more than a little daunting.” There were also comments on how the assignments were rather demanding due to deadlines and personal time constraints, and would prefer “shorter or optional assignments” rather than the current structure.

Lastly, the assignment had a peer review component, in which 18 students voiced their dislike due to various reasons. Some students stated that “the rubrics for peer reviews were limiting and not quite matching the assignment” and had no guidance on how to provide constructive peer feedback, with varying degrees of effort put into peer evaluation such that many were in agreement that perhaps “it is necessary for staff review”.

Discussion and Future Development

One main limitation of this exploratory analysis of the qualitative analysis is only being able to conduct it after the course has ended and the surveys have been taken, and not in real-time as the course was in progress.

Aligning with Eriksson et. al’s (2017) identified three main factors influencing MOOC dropouts, which included mismatch between learner’s perception and actual course content and design; learner’s ability to manage time; and social aspects of learner community feeling.

Based on the findings of the frequency analysis as a starting point, I hope to discuss further potential investigation of data for further improvement and development of the MOOC course for future iterations based on some of the themes from the course survey comments.

Course Component, Learning Materials and Resources

Besides the positive sentiment towards utilization of videos in course delivery, I think it would be interesting to look further into the general utilization of course components, learning materials and resources. Based on the self-reported utilization of course components in the entry and exit survey, in comparison to the actual generated data of course component utilization, it would be valuable to investigate the difference to identify student expectations of what resources they think they will use versus the actual utilization of available resources, and whether there needs to be more development of certain materials for future iterations of the MOOC.

Peer Interactions and Peer Evaluations

In continuation of the findings for the negative aspects of the course, I think it would also be useful to look into the self-reported interaction with peers, and the actual generated data of peer interactions. With the utilization of Social Network Analysis, utilization of NodeXL for peer interactions can provide more insight on the socio-constructivist potential of MOOCs, Building rapport with the peers might also ease part of the negative comments on peer reviews, in addition to providing clearer rubric and guidelines for providing peer reviews, as “peer assessment techniques and exploiting peer support can revolutionize emergence of new pedagogical models in the MOOC approaches” (Yuan, Powell & Cetis, 2013).

Prior Experience with MOOCs and Time Management

In terms of students reflecting that there was lack of flexibility and deadlines, in addition to their personal time management and constraints, I believe Time Management should be a theme that I would further add to the Table of Themes. Some of the relevant questions that would help operational define this theme currently overlap with those in the Entry Survey for Motivation, and additional questions, such as whether or not they have full-time/part-time occupations, can be a proxy measure to how much time students may realistically have.

However, I believe some of these questions also overlap with another potential theme, namely Familiarity (with MOOCs) in terms of whether or not students have had previous pedagogical experience with MOOCs, assuming that those with previous experiences have a better idea on delegation of time and other metacognitive executive functioning. Having additional survey questions that target these themes might provide more points of data collection for generating a more well-rounded idea of the students motivations and capabilities.

Personal Reflection

One big challenge that I was faced with was learning to familiarize myself with the massive quantities of data that was generated from the MOOC course, and figure out ways to operationalize it in order to target what I wanted to investigate.

Another major challenge was the learning curve to learn how to use the different Learning Analytic Tools that were available. For Tableau, I had to go through their tutorial videos to understand how to input the data with each other to create the visualizations that I wanted. For AntConc, the tool itself was more straightforward due to the smaller scope of functions and settings, however, learning to format and prepare the comments for analysis was something new that I learned about. The dataset was manageable in terms of manually preparing for it, though should the scope increase, proper Python programming would be beneficial to complete the task. In retrospect, I believe NodeXL might have been a more robust text-analysis and social network analysis software to utilize for this project. Ideally, I would have liked to have more time to understand the data and the functions of the application to create more substantial visualizations that could provide more insight to the social interactions of students in the courses, especially when it comes to the socio-constructivist potential of crowdsourcing peer reviews and evaluation in MOOCs.

References and Literature

İnan, E., Ebner, M. (2020). Learning Analytics and MOOCs. In: Zaphiris, P., Ioannou, A. (eds) Learning and Collaboration Technologies. Designing, Developing and Deploying Learning Experiences. HCII 2020. Lecture Notes in Computer Science(), vol 12205. Springer, Cham. https://doi.org/10.1007/978-3-030-50513-4_18

Eriksson, T., Adawi, T., & Stöhr, C. (2017). “Time is the bottleneck”: A qualitative study exploring why learners drop out of MOOCs. Journal of Computing in Higher Education, 29(1), 133-146. https://doi.org/10.1007/s12528-016-9127-8

Khalil, Mohammad & Ebner, Martin. (2017). Driving Student Motivation in MOOCs through a Conceptual Activity-Motivation Framework. Zeitschrift für Hochschulentwicklung. 12. 101-122. 10.3217/zfhe-12-01/06.

Nanda, G., A. Douglas, K., R. Waller, D., E. Merzdorf, H., & Goldwasser, D. (2021). Analyzing large collections of open-ended feedback from MOOC learners using LDA topic modeling and qualitative analysis. IEEE Transactions on Learning Technologies, 14(2), 146-160. https://doi.org/10.1109/TLT.2021.3064798

Nawrot, I., & Doucet, A. (2014). Building engagement for MOOC students: Introducing support for time management on online learning platforms. Paper presented at the 1077-1082. https://doi.org/10.1145/2567948.2580054

Zhu, M., Sari, A.R. & Lee, M.M. Trends and Issues in MOOC Learning Analytics Empirical Research: A Systematic Literature Review (2011–2021). Educ Inf Technol 27, 10135–10160 (2022). https://doi.org/10.1007/s10639-022-11031-6