Creation of Open Education Resource Textbook with Interactive H5P elements for FREN1205 – French Conversation course in the Modern Languages Department at Langara College

Introduction

For the case of technological displacement, we were curious to explore the tendency and shift from physical textbooks to digital Open Education Resources (OERs) in higher education institutions. We were specifically interested in the tensions and opportunities that arose from the transition to online teaching and learning after the pandemic, especially with the normalization of online and hybrid e-learning.

We are grounding this inquiry of technological displacement in the case study of the creation of OER textbook with interactive H5P elements for a French conversation course at Langara College. In this assignment, we analyze the usability aspect of OERs from the instructor and student perspective, as well as explore the concerns of artificial intelligence, and issues surrounding digital labor in the process of creating OERs in higher education institutions.

Motivation and Background

The FREN1205 – French Conversation course at Langara College is offered in-person with the utilization of a digital OER textbook Le Français Interactif created by the instructor Mirabelle Tinio. To support our work, we had the opportunity to speak with the instructor to learn more about the case study. All case study context provided in this assignment came from this conversation. Below are some of the motivators for the creation of the OER textbook from both the students’ and instructor’s perspectives.

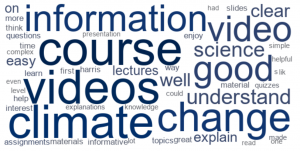

From the student perspective, the education landscape had been drastically transformed during the emergency transition to online teaching and learning during the beginning of the pandemic in 2020. The effects can be seen gradually resuming in-person teaching and learning once again in 2021, in which student surveys reflected that having additional supportive resources online available helped with their learning process and overall experience taking online courses. In addition, students reflected that physical textbooks were expensive and inaccessible, especially the ones that were ‘single-use’ for an individual course, and were less inclined to make such purchases.

From the instructor’s perspective, there were many factors that contributed to the transition of physical textbooks to a digital OER. The instructor that we interviewed had been teaching the French conversation course for the past at least 12 years. Though the original textbook they were using provided activities and exercises for everyday conversation scenarios, she found that the content was not up-to-date or culturally relevant enough for the students within the classroom. The instructor therefore found herself turning to other available language learning resources to patch together a curriculum plan that included vocabulary, grammar structure, and socio-cultural activities. The process was rather time consuming and she was never really satisfied with the existing resources.

With both students and instructor identifying that the current resources were not meeting their needs, it became clear that another resource should be introduced to solve the problem of learning resources for this course. Here, we can use the concept of technological utility to demonstrate, in part, why a tipping point occurred. Utility asks the question of if the technology fulfills the users’ needs or if it does what the users need it to do (Issa & Isaias, 2015, p. 4). Physical textbooks were not meeting the learners’ and instructor’s utility needs, therefore, a new technology needed to be introduced.

Simultaneously while working partially in the Educational Technology Department, there were many other instructors utilizing Pressbooks and other OER platforms to input resources into Brightspace, a learning management system. The existing integration of the learning management system and potential for further adaptation was an additional motivator for developing her own textbook as an OER for the class.

The Tipping Point

The opportunity and tipping point presented itself when BCcampus Open Education Foundation Grant for Institutions applications were open for project proposals for specifically utilizing H5P for Pressbooks in 2021. The grant was intended for British Columbia post-secondary institutions wishing to explore, initiate or relaunch open educational practices, resources, support and training on their campuses. Through this grant, the instructor was able to secure additional funding and support for creating the French Conversation OER textbook.

Benefits

Multi-modality, Interactivity and Flexibility

Learning languages is an activity that is inherently multimodal and incorporates a combination of multi-sensory and communicative modes (Dressman, 2019). The utilization of online OERs makes it possible to include multimedia and interactive H5P elements such that students can actively engage with the learning content, allows for more diversity in learning methods, as well as increasing the accessibility of course content.

Though the OER textbook included many different chapters and topics, each unit contained a similar format: the learning objectives, pre-test questionnaire, vocabulary, practice exercises, oral comprehension exercises, a post-test evaluation questionnaire, and self-reflection. This repeated format increases the OER’s usability because it is quickly learnable and memorable (Issa & Isaias, 2015, p. 33). The OER therefore creates a smoother user experience with less friction or frustration to navigate to the content than the physical textbooks, demonstrating again why this tipping point occurred (Issa & Isaias, 2015, p. 30).

The goal was to make the learning content accessible to both students and instructors with maximum flexibility and adaptability. Students could preview the units and prepare ahead of time before the classes; or review the units and practice on areas for further improvement, all at their own pace, with self-assessments available. Instructors can supplement the course delivery with additional resources, in-class activities or outing experiences, and utilize the textbook in a non-linear manner tailored to the needs and pace of the students in the classroom.

Living Texts

The content in the OER included resources that the instructor created and showcased content that previous students created as well, and can be seen as a co-created ‘living text’ (Philips, 2014) as a pedagogical tool, as well as a co-creation of knowledge within the classroom.

For example, in the activity “Interview a Francophone”, the instructor uploaded recorded interview videos of previous student’s work, as an exemplar of what the assignment would look like when current students approached the activity themselves, but also as an exercise for current students to practice their listening comprehension and understanding of French conversation in context. The instructor identified that this was to also make the students feel appreciated for their active contribution towards the course, and recognized students as part of the co-construction of literacy knowledge through this kind of interaction (Philips, 2014).

Creating an OER that operates as a living text supports increased usability because it allows for feedback to be implemented when offered by the learners (the users). A living text can push back against the challenge of “configuring the user”, where the designers imagine the “right way” for a user to engage with their technology instead of being open to how the users actually will engage with the technology (Woolgar, 1990). This OER as a living text can be adapted to user feedback and therefore there is not only one “right way” to use the resource. Instead, the OER can increase usability for a wider variety of users as instructors adapt it based on learner feedback. The instructor noted that keeping an OER like this up-to-date is very important. This is especially true if the OER is described by an instructor to learners as a living text that is responsive to their needs.

Equity, Diversity and Inclusion

As mentioned above, the multi-modality, interactivity and flexibility of the living texts contributes towards a classroom climate that reflects equity, diversity, and inclusion of the students that are currently taking the courses. This approach takes into consideration the positionality, lived-experiences, interests, and abilities of students within the classroom and their agency as an active participant in their own learning.

For example, taking the aforementioned activity of interview with a Francophone, with the crowd-sourced collaborative effort of the different interviewees, students are able to see the different kinds of ‘francophone-ness’ outside of the mainstream Eurocentric depiction of French speaking people, especially when it comes to the deep-rooted history of the French language as a tool of colonization.

By embracing inclusive pedagogical approaches and recognizing students’ diverse contributions, this approach to creating OER textbooks creates a supportive and accessible learning environment, fosters a sense of belonging, and affirms the value of students’ unique contributions to the learning process.

Challenges

Current Concerns: Teamwork Makes the Dream Work

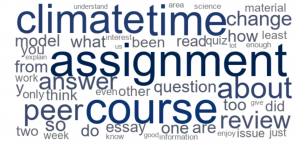

One major challenge that the instructor encountered during the creation of this OER textbook was the lack of support from the institutional level, especially when new technological adaptations require more incentive and supporting resources to push for incorporation and utilization within the college, and furthermore, across institutions. Though the instructor did collaborate with other language instructors from the Modern Languages Department and advisors from the Educational Technology Department, there is a strong suggestion for creating a community of practice across institutions to support this work’s sustainability. The production of a brand new OER like this (as as its ongoing maintenance) involves significantly more time and energy than maintaining the status quo of using physical textbooks. There is a risk that the instructor’s digital labor of producing this kind of resource might be unknown by the institution if it is unseen.

On a practical and logistical consideration, this ensures the articulation of courses are leveled and aligned across institutions, especially when it concerns the transferability of courses and credits for pathway programs, such as Langara College. On a more idealized and aspirational endeavor, this promotes the collaboration and commitment to sharing knowledge and resources, encouraging accountability, peer reviews and continuous development of teaching and learning practices, enabling the community to build on each other’s work and fostering a culture of openness and collaboration in education.

Future Concerns: The Rise of Artificial Intelligence and Impact of Digital Labor

Though the BCcampus grant did provide funding for the instructor to develop the OER textbook, there needs to be more support when it comes to compensation of the unseen invisible work that is added on to the already existing duties of a teaching faculty member. With increased digitization of instruction within higher education, comes an expectation of an accelerated pace of work (Woodcock, 2018, p. 135). There can be an expectation, even implicitly, within institutions that work becomes “easier” as a result of digital resources like this OER textbook. This can result in work pressures and time pressures expanding for instructors who have created digitized aspects of their work.

Another risk for instructors is the value that is placed on published work to push an academic career forward (Woodcock, 2018, p. 136). The motivation to pursue the creation of open access work can be reduced if the institution the academic is working within has rewards for published work. While an OER like the one described in this case is a different kind of open access work than a journal piece, its creation and upkeep exist within the same labour hours for an instructor. The instructor must be significantly committed to the creation of the OER if there is limited institutional support, as described in this case, and also if there is institutional pressure to spend time doing other, more valued work, such as publishing at a more prestigious journal.

Finally, there is a tension inherent in the use of artificial intelligence in relation to OERs. As with this case study, we know that producing and maintaining OERs can be time, labor, and resource-intensive. With the rise of large language models like ChatGPT in the past year, there is a potential to employ AI tools like this to support the creation of OERs. This might seem to reduce the human labour needed to create an OER like Le Français Interactif. However, we also know that AI tools like ChatGPT do not appropriately cite sources and can even ‘make up’ information. Uncited sources are problematic because they effectively steal intellectual property from other academics and false information is problematic because it diminishes the reliability and utility of the OER.

Even more concerning is that AI language models are trained with data that can be biased and produce content that is embedded with this bias (Buolamwini, 2019). With an OER project like this outlined in our case study, it could be counter to the desire to create more culturally-relevant and inclusive resources to produce them in “partnership” with an AI tool. More relevant to this case study, regarding language translation, AI tools like DeepL can be helpful but are not yet at the point where they can translate as effectively as a human who speaks multiple languages. For this reason, instructors might be wary of using AI tools as “co-authors” for OERs to ensure the quality of the instructional or learning resource remains high.

Conclusion

This case study demonstrates how the creation of an OER textbook for the FREN1205 – French Conversation course at Langara College exemplifies a pivotal shift in educational resources toward digital platforms. This tipping point is a response to the evolving needs of both students and instructors in the post-pandemic era of education. Ideally, an OER textbook offers learners enhanced accessibility, flexibility, and more inclusivity within their educational experience. However, challenges such as institutional support for digital labour and concerns surrounding the rise of artificial intelligence underscore the importance of institutional buy-in and ethical considerations as we integrate OER textbooks into the student experience.

References

Buolamwini, J. (2019, February 7). Artificial Intelligence has a problem with gender and racial bias. Time. https://time.com/5520558/artificial-intelligence-racial-gender-bias/.

Dressman, M. (2019). Multimodality and language learning. In M. Dressman, & R. W. Sadler (Eds.), The handbook of informal language learning (pp. 39-55). John Wiley & Sons, Ltd. https://doi.org/10.1002/9781119472384.ch3

Issa, T., & Isaias, P. (2015) Usability and human computer interaction (HCI). In Sustainable Design (pp. 19-35). Springer.

Phillips, L. G., & Willis, L. (2014). Walking and talking with living texts: Breathing life against static standardisation. English Teaching : Practice and Critique, 13(1), 76.

Woodcock, J. (2018). Digital Labour in the University: Understanding the Transformations of Academic Work in the UK. tripleC: Communication, Capitalism & Critique. Open Access Journal for a Global Sustainable Information Society, 16(1) pp. 129-142.

Woolgar, S. (1990). Configuring the user: The case of usability trials. The Sociological Review, 38(1, Suppl.), S58-S99.