I’ve participated in online activities that use predictive text for entertainment purposes a few times in the last few years. It always seemed as though the predictions made were based on recent conversations I’d had with friends over text or Facebook messenger. They seemed to be curated for me specifically, trying to mimic how I speak and what “they” think I am going to say. The issue is that they are always very nearsighted. The algorithm chooses the next word only based on the previous word or two and now on the idea being communicated. This is why they end up being so comical and entertaining (and nonsensical). They’re never accurate at reading into the sentence or idea being communicated. It is really just the combination of words that I may have used before.

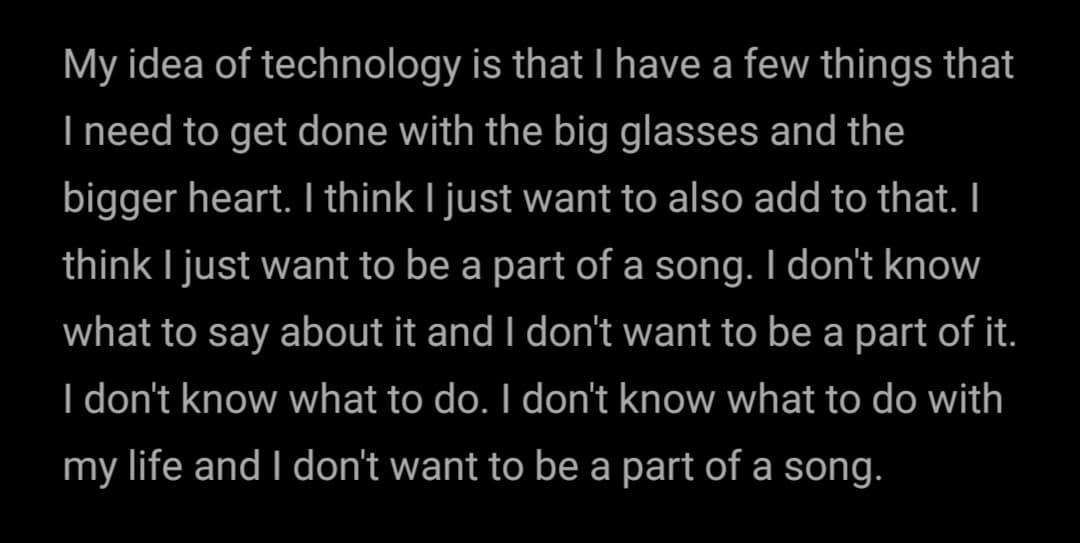

With that said, the following is the predictive text my phone came up with when using the “My idea of technology is…” prompt:

My reaction:

The first thing I noticed was “with the big glasses and the bigger heart” which was something I wrote in an Instagram post wishing my best friend a happy birthday in November! Secondly, the contradiction and indecisiveness made me laugh. I am actually often quite indecisive and I am sure I have said “I don’t know what to do” many times in conversations on my phone. I think the predictive text seemed to try to sound like me which makes me reflect on how indecisive I actually sound in real life. However, I think it didn’t do a very good job and seemed to almost go in circles. The deeper question: Isn’t that what indecisiveness and uncertainty is? Going in circles in your mind? LOL

In addition, I don’t think that I have ever mentioned that I did or did not want to be a part of a song… I assume that it had to have taken the words “of a” and predicted song… which is bizarre since I feel as though I would have actually said so many other words with that particular combination. I do love music and I do share songs with my friends, but it seems to a bit of an existential leap that I’ve ever admitted that I wanted to be a part of a song.

The podcasts and video talks we watched this week were particularly eye-opening for me. I knew, vaguely, what algorithms were and how they affected social media channels, but I did not know the extent to which they affect so many aspects of our lives including our justice systems, customer spaces and work environments! One of the most commonly discussed algorithms today are those found on social media and their impact on political polarization. I knew that if you actively engage with posts that represent certain views, you are continuously fed more posts on the same views, effectively creating dangerous echo chambers where you are constantly validated for one point of view. But the reality is that there are many points of view and we should be exposed to them so that we practice thinking critically and building empathy towards people who might think differently. Unfortunately, this point seems to have been lost when it comes to engaging on many online platforms.

Algorithms, in general, are meant to observe trends that can thereafter (try to) predict behaviour. After reading Cathy O’Neil’s article in the Guardian, “How can we stop algorithms telling lies?” it made me think about Task 9’s Networking Assignment and how it led us to reflect on how connections and associations observed may not always accurately reflect reality. They create assumptions. Algorithms are really the same. Connections are made, based on interactions and clicks which create trends that calculate predictions. It seems like they try to simplify human behaviour to assumptions made from data. I appreciate how O’Neil breaks down the issues with algorithms “telling lies” into layers that fall on the spectrum of unintentional to intentional, all of which create very harmful outcomes. It seems to me that the use of algorithms have become deeply systemic – or perhaps represent “the system” in many ways. Identifying the harmful outcomes algorithms reflect some of the biggest issues within our systems – systemic racism for example. In reality, the system, like many algorithms, seems to benefit only a certain percentage of our population. As with most systemic issues, I agree with O’Neil that addressing the issues need to start in political agendas before finding a technological solution. However, and not to be too pessimistic, it’s going to be incredibly challenging to change these issues when the biggest companies on the planet, like Google, Facebook and Amazon run the “algorithm” show.

References:

O’Neil, C. (2017, July 16). How can we stop algorithms telling lies? The Observer. Retrieved from https://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies