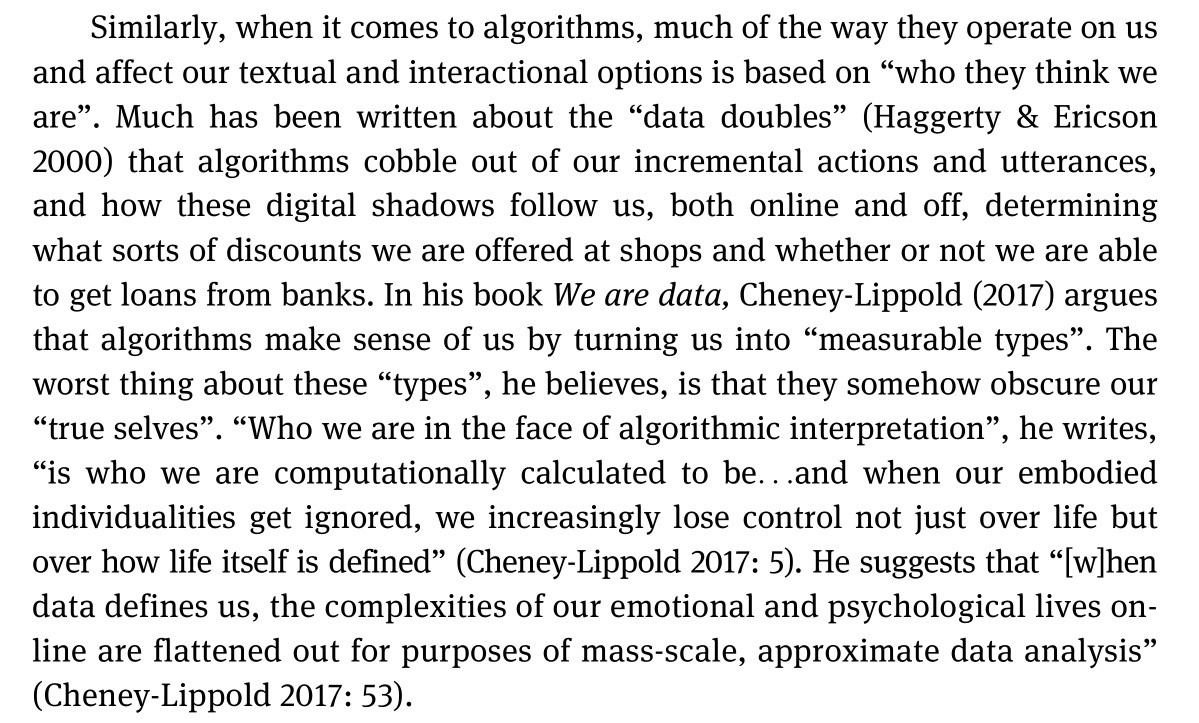

This week I decided to dive into the world of algorithms seeing as this topic is gaining traction the more we become aware of their pervasive use in everyday life. Knowingly, I engaged with an algorithmic, simulated activity called ‘Detain/Release’. The premise of the activity is to engage with a simulated, pretrial algorithmic assessment tool. You review the cases of 25 defendants, choosing to either detain or release them based on their risk assessment and some other limiting pieces of information. Here is an example of what information you are given to make this decision:

During the activity, I quickly realized I am not cut out for law enforcement! First off, I found myself leaning towards trusting the defendants testimony and opted to release them more often than detain them. Maybe it is the nursing background, but, I really did not like making the decision to detain someone. Secondly, I knew that an algorithm was making suggestions about the potential risk of releasing these individuals. With that knowledge, and knowledge of algorithmic limitations in judgement, I found myself less trusting of the AI’s recommendation than the individual. Due to these conflicting thoughts, and an enormous lack of context, I struggled to make a decision to detain or release the 25 suspects.

Kate Crawford does an excellent job of highlighting the limitations of algorithms and AI in her book (image links to a video), Atlas of AI. She discusses in detail how algorithms reinforce oppression due to embedded bias in datasets and uses a dataset of mugshots to make her case. Crawford (2021) identifies how these images are stripped from their context and are taken during times of extreme vulnerability without consent. These images then become data sets and there is this presumption that what the machine ‘sees’ is neutral. Crawford (2021) argues that these images are anything but neutral. “They represent personal histories, structural inequities, and all the injustices that have accompanied the legacies of policing and prison systems in the United States” (Crawford, 2021, p. 94).

I watched another TedX video by Hany Farid on the danger of predictive algorithms in law enforcement. I would recommend starting the video at 4:49. Hany Farid (2018) does an excellent job of proving Crawford’s points mentioned above. He walks the audience through a study they did which demonstrates how racism can be embedded in predictive modelling software as a result of systematic inequities. In the US, an African American is more likely to have a criminal record due to long standing societal and systematic injustices. Therefore, when prior criminal records are used as a data point for predictive modeling to determine risk of re-offence, the algorithms will be inherently biased to choose African American individuals. His study also demonstrated how these so-called advanced technologies have the same predictive abilities as a random person from the street. Thus, algorithms which are supposed to be overcoming these social issues are merely mirroring and replicating them. “Big data, data analytics, AI, ML, are not inherently more accurate, more fair, less biased than humans.” So why do we use them?

Anecdotally, I contacted a friend of mine who works in law enforcement on Vancouver Island to share with him the Detain and Release activity along with the article about this type of policing. I wanted to hear what he had to say from his perspective. To my pleasant surprise, he was quite aware of these tools and their limitations, he continued to explain that it isn’t used at his detachment but stated larger detachments might use it. However, he did acknowledge how minorities and poor communities experience higher rates of crime and how these tools can perpetuate that cycle of over policing. He said if we were to look at what is being done with the justice system in Canada with decriminalizing drug possession for personal use and petty crimes, and the culture of the judge not staying charges or not enforcing conditions of release, this becomes a beginning place to start combating these inequities.

References

Crawford, K. (2021). The Atlas of AI.

Farid, H (2018). Retrieved from YouTube: https://www.youtube.com/watch?v=p-82YeUPQh0