I have never heard of speculative fiction/futures before so this was an interesting module! It was fascinating to learn how the act of speculating and being creative with our thinking can open us up to new opportunities for invention and problem solving. One of the topics frequently mentioned in this weeks readings and podcasts was that of medicine, and its speculative future and what role AI will play in it. It comes as no surprise that AI is being tested in all types of industry, from education to law, so why not medicine as well? And it has been shown that AI can diagnose certain diseases, exceptionally faster and with more accuracy than physicians. So I thought that I would take a stab at speculating the role AI could potentially play in the future of medicine.

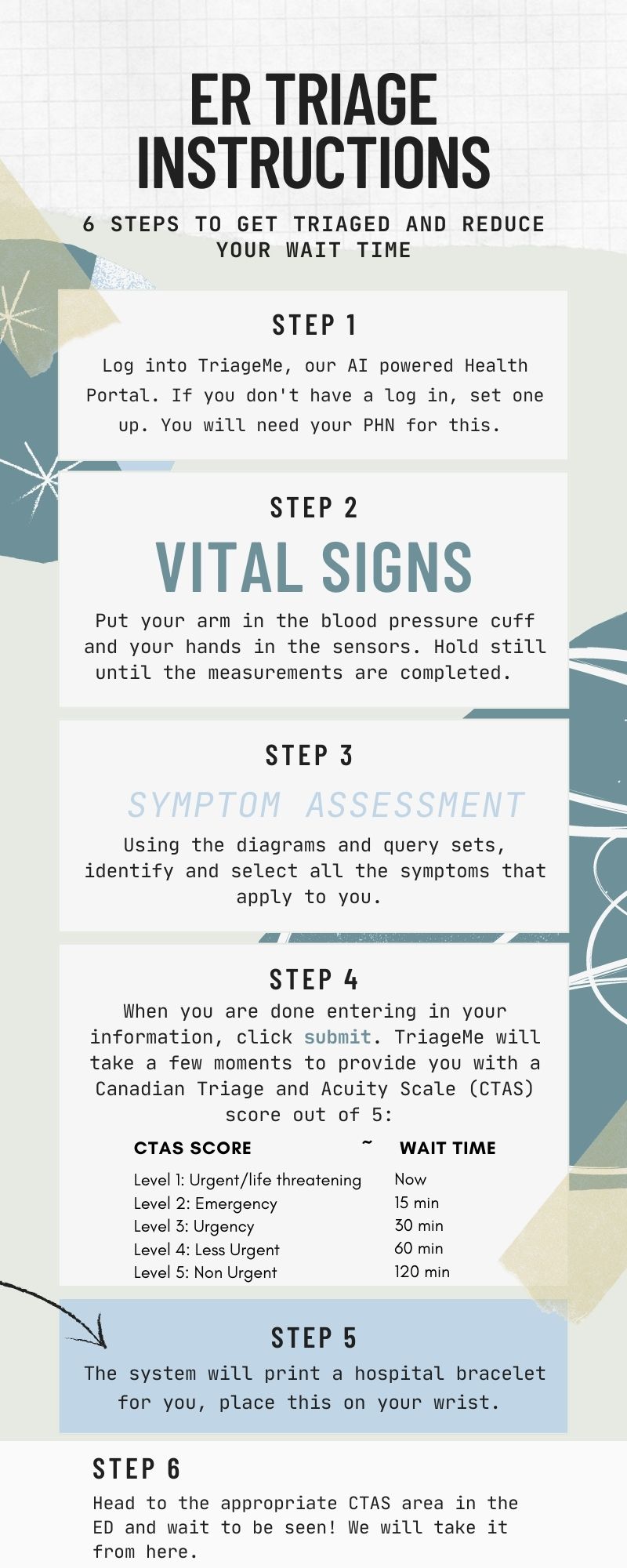

Welcome to your new and improved hospital! Equipped with the latest and greatest in technology. With advancements in our health informatics and the latest in machine learning software, we are happy to introduce to you, the first of its kind, TriageMe! Think of TriageMe as your virtual nurse. They will welcome you in the ER waiting room, guiding you through the triage process. To get you started, read this flyer, follow the directions and someone will be with you shortly!

This was an interesting activity and it makes me wonder what is currently being thought of and designed for the future state of healthcare. A couple thoughts I had about AI in medicine relate to the moral crumple zone, accessibility, and bias.

In the Bellwether podcast hosted by Sam Greenspan, a valuable point was made about the moral crumple zone. He described this as the space between AI error and failure of human intervention, resulting in harm. Then, the potential outcome of humans taking the fall and being held criminally liable for errors in AI judgement. I could not help but think about the many scenarios where a moral crumple zone like this could occur in health care, if a doctor or nurse were to fail catching and intervening when AI makes errors in judgement. AI would never be able to function without human observation and ensuring accuracy, but as Sam points out, maintaining hypervigilance 100% of the time, as a human, is not possible. To engage with AI safely in medicine, it would need to be used as a tool, an adjunct, rather than a replacement to doctors and nurses.

The other thought I had was how this technology would either make healthcare more or less accessible. Could AI combat current staffing issues we are seeing across the nation by streamlining certain things? Would it worsen it due to healthcare providers time being taken away from direct patient care to manage software and technology?

Lastly, we are acutely aware of how AI can be racist and sexist. AI essentially mirrors the current state of society, since that is where datasets are extrapolated from. Therefore there is a considerable risk for AI powered healthcare software to replicate these social injustices we are trying to move away from. How could AI in healthcare further disadvantage those who are marginalized or oppressed? Phillips-Beck et al. (2020) summarize some of the key issues related to racism within Canada’s healthcare system. While their list is not exhaustive, they mention one prevailing issue related to the outright exclusion of First Nations because healthcare is not made accessible to them. How would a dataset, that excludes a key population be able to train AI without implicit bias? It would be very difficult. The addition of AI into industry cannot be done without extreme caution and intention because it can have catastrophic effects.

References

Phillips-Beck W, Eni R, Lavoie JG, Avery Kinew K, Kyoon Achan G, & Katz A. (2020). Confronting racism within the canadian healthcare system: Systemic exclusion of first nations from quality and consistent care. Int J Environ Res Public Health. doi: 10.3390/ijerph17228343. PMID: 33187304