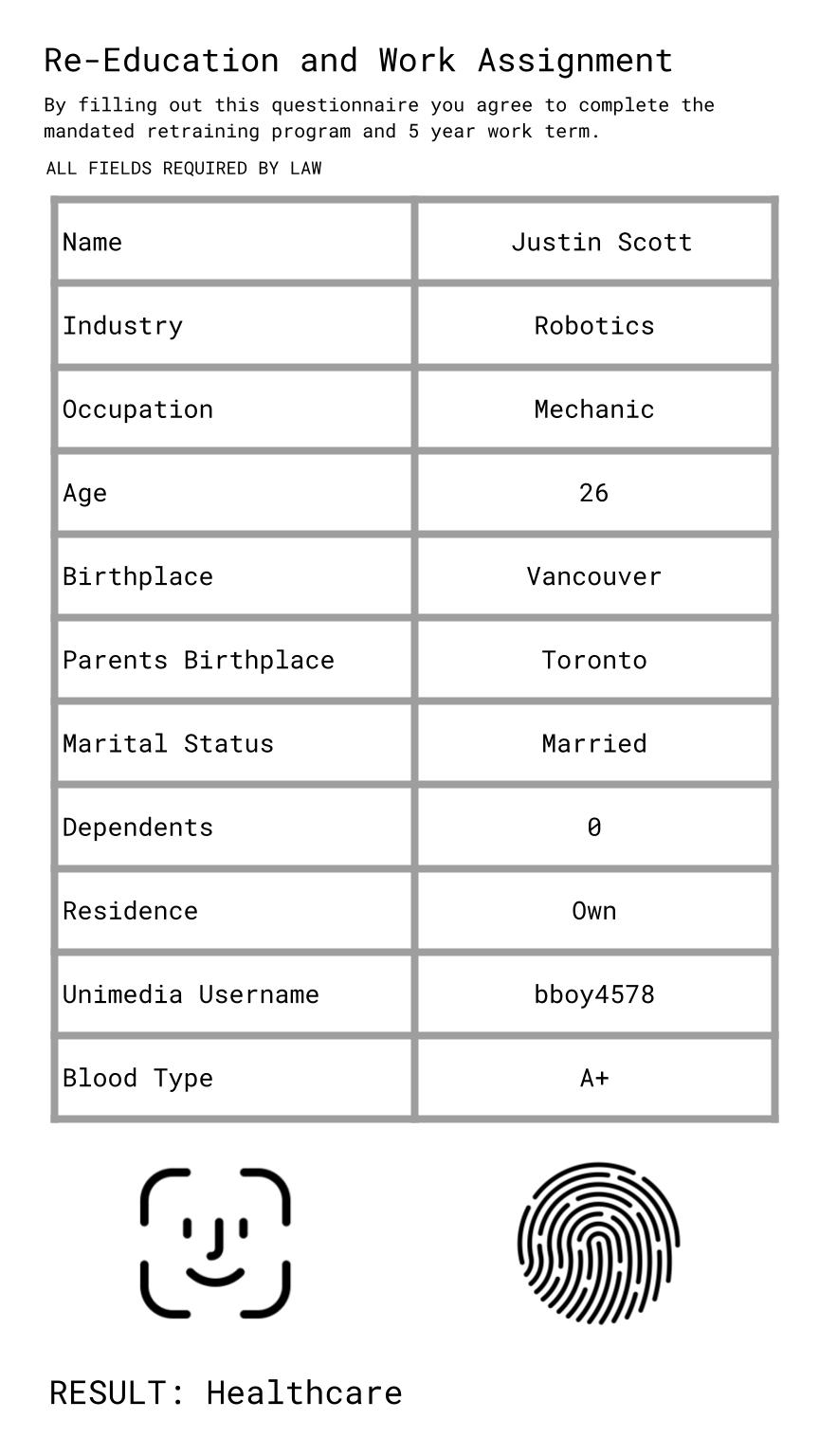

Worker one reads as a white man who has been funneled into a professional healthcare setting

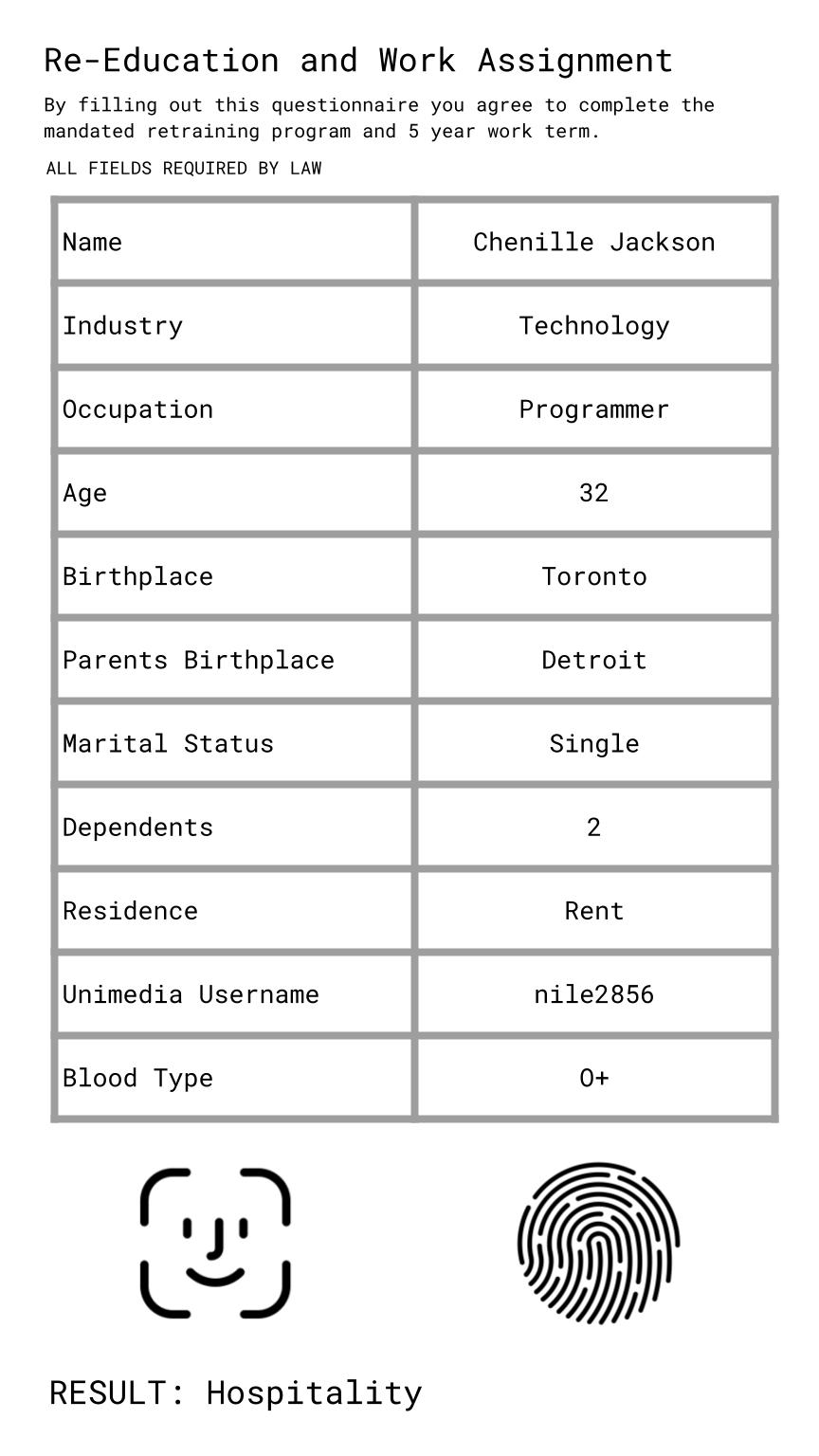

Worker two reads as a woman of colour who has been funneled into hospitality (service) work despite being a computer programmer

This speculative future is an app-based survey that would be completed by workers whose jobs have been lost to automation. The program is run by AI and the interface has lost all aesthetic qualities. Workers apply to receive educational training in order to work in a new industry. Worker retraining is currently a popular political solution for job loss due to industry/economic change. The questions are analyzed by an algorithm which predicts likelihood of success based on current workers within the database. Based on current algorithmic bias I speculate that such a system would perpetuate racist and sexist discrimination in assessing candidates for service roles vs. professional roles. I based my speculation regarding automation proof jobs based on a podcast I listened to recently with author Kevin Roose discussing his book, Futureproof: 9 Rules for Humans in the Age of Automation. Roose theorizes that “surprising” jobs that involve making decisions based on changing and surprising variables and “social” jobs that involve service or care are likely not going to be automated. In my speculative example we see the results for two individuals coming from different industries completing the questionnaire and being routed into mandatory education and work. The questionnaire is short and becomes highly personal and seemingly arbitrary. It also requires the worker to disclose their social media username, face ID and fingerprints implying that the algorithm will track and collect their media data in addition to demographic, crime and health data. The results imply that individuals who fought hard against systemic barriers to work in technology industries are set back again due to automation.

Davis, D. (2021, March 16). The Age Of Automation Is Now: Here’s How To ‘Futureproof’ Yourself. NPR. https://www.npr.org/2021/03/16/977769873/the-age-of-automation-is-now-heres-how-to-futureproof-yourself

Megan – Thanks for this creative future speculation! The concept of humanity putting faith and confidence in AI to the point that it will relegate us to our most suitable professional roles is an interesting one. I echoed a similar concept, but in a much different manner. Truthfully, in the not so distant future, I can foresee these AI technologies not so much as reliant on the survey data that a user inputs, but rather incorporating the relevant genetic bio-markers to make a more informed and coherent decision. This is far more reaching than filling out a survey. For example, if a person has a genetic predisposition to diabetes, they probably shouldn’t be a candy-maker. If a person’s parents were both Olympic athletes, chances are their offspring are going to have athletic genetics as well. Both these situations evoke ‘necessary discrimination’. Moreover, you’ve indicated that the first user is married and has no dependents, while the second is single with two dependents – These are not facts generated by the AI but rather information inputted by the user. There is no mention of race in the illustrations either, which prompts me to question the reasons why you feel AI will perpetuate “racist or sexist” modes of discrimination.

Thanks for the feedback Carlo

WELL DONE Megan. Your artifacts are very close to my first narrative in many ways. Both demonstrate the consequences of building systems on flawed assumptions that we are even unable to explain and scrutinize.