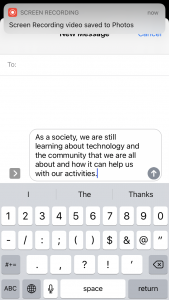

Predictive Text Screenshot

Writing a micro-blog using a prompt and predictive text, reminded me of what it often looks like when young children are learning to write. They may have lots they want to say, but when they go to write, they often limit their stories by only using simple words that are easy to write. They often change their story as they go to substitute words that are simpler to write compared with the words they choose when storytelling. In addition, they will often cut their story short when they get frustrated by abruptly ending it with a period. I felt similarly constrained when writing based on my prompt “As a society, we are…”, as I was limited to the simple words that the messaging app suggested and I wanted to end my message early when I became frustrated and it became clear that what I wanted to say was going going to be articulated in this way. The final product was the following:

As as society, we are still learning about technology and the community that we are all about and how it can help us with our activities.

I do feel this way (I think!), but that is definitely not how I would have articulated this sentiment outside of the confines of predictive text.

Thus, it was very interesting to learn a bit of the science behind the predictive text algorithms and how they are built upon the past 50 years of print journalism (McRaney, 2017). It makes sense then as to why the majority of the words that are suggested when using predictive text, as simple words would largely make up the most commonly used words in the English language. It does sort of bring to light the philosophical question as to whether or not the prevalent use of algorithms in our society are helping us or hurting us?

Prior to this week, I was oblivious to the proprietary nature of algorithms and the countless ways that algorithms have been used and abused. The Reply All podcast episodes of “The Crime Machine, Part I and II” (Vogt, 2018; Vogt, 2018) should be required listening for everyone to gain an understanding of how algorithms help to perpetuate systemic racism. Especially since according to Dr. Shannon Vallor, algorithms act as both an “accelerant” and a “mirror” for existing problems within society (Santa Clara University, 2018). This in combination with the “nefarious” uses for algorithms that Dr. Cathy O’Neil (2017; Talks at Google, 2016) describes by the way that algorithms shield and hide crimes, deceptions, and injustices due to the fact that often even the people using the algorithms have little understanding of how they operate. This is incredibly disturbing. It would be nice to see more government oversight, but yet on the other hand, politicians are slow to pass new policies and susceptible to the influence of rich lobby groups, particularly in the United States where the need for this type of regulatory framework would largely need to be developed to be implemented. It is hard to believe or trust that this would be a solution. Earlier today, I happened to see this TikTok video by @washingtonpost which I think comically captures the sad realities of waiting for any governments to step-in and regulate the tech industry and their use of algorithms, when they really do not have the technical knowledge to fully understand what is happening.

@washingtonpostLawmakers are interrogating the CEOs of Google, Facebook and Twitter today on the role the companies have played in spreading misinformation.♬ original sound – Tara’s Mum and Dad

In the end, I think that transparency is going to have to be demanded by consumers perhaps similar to the environmental movement and facilitated by an NGO, but ultimately, their success will depend on education. The public needs to become aware of the power of algorithms, gain a deeper understanding of how they work, and demand better to prevent algorithms from hurting people and society.

References

McRaney, D. (Host). (2017, November 20). Machine bias (No. 115) [Audio podcast episode]. In You are not so smart. https://youarenotsosmart.com/2017/11/20/yanss-115-how-we-transferred-our-biases-into-our-machines-and-what-we-can-do-about-it/

O’Neil, C. (2017, July 16). How can we stop algorithms telling lies? The Guardian. https://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies

Santa Clara University. (2018, November 6). Lessons from the AI mirror Shannon Vallor [Video]. YouTube. https://www.youtube.com/watch?v=40UbpSoYN4k

Talks at Google. (2016, November 2). Weapons of math destruction | Cathy O’Neil | Talks at Google [Video]. YouTube. https://www.youtube.com/watch?v=TQHs8SA1qpk

Vogt, P.J. (Host). (2018, October 12). The crime machine, part I (No. 127) [Audio podcast episode]. In Reply all. Gimlet Media. https://gimletmedia.com/shows/reply-all/76h967/127-the-crime-machine-part-i

Vogt, P.J. (Host). (2018, October 12). The crime machine, part II (No. 128) [Audio podcast episode]. In Reply all. Gimlet Media. https://gimletmedia.com/shows/reply-all/n8hwl7/128-the-crime-machine-part-ii#episode-player

Washington Post [@washingtonpost]. (2021, March 25). Lawmakers are interrogating the CEOs of Google, Facebook and Twitter today on the role the companies have played in spreading misinformation. https://www.tiktok.com/@washingtonpost/video/6943684534089092358?lang=en&is_copy_url=0&is_from_webapp=v3&sender_device=pc&sender_web_id=6903017051725383173