It’s ironic that as I sit here typing, I have started season 2 of Stranger Things (It came highly recommended by Ernesto), and I just watched the one where Dustin asks, “presumption is a a good thing right?” (Stranger Things, Ep2).

From the start of this task I did not want to presume anything. Most of the resources we read or listened to this week, discussed how algorithms can be skewed because of bias of race, gender, and wealth amongst other characteristics. So, when making the choice to detain or release I wanted to avoid looking at the picture in case it indicated colour or gender. Now, I feel strongly that I would not have made choices based on the colour or gender of the detainee, but I can imagine that many people have felt the same way and with good intentions created algorithms that did unintentionally focus on those characteristics. Dr O’Neil (2107) outlines an algorithms as formal rules, usually written in computer code, that make predictions on future events based on historical patterns.

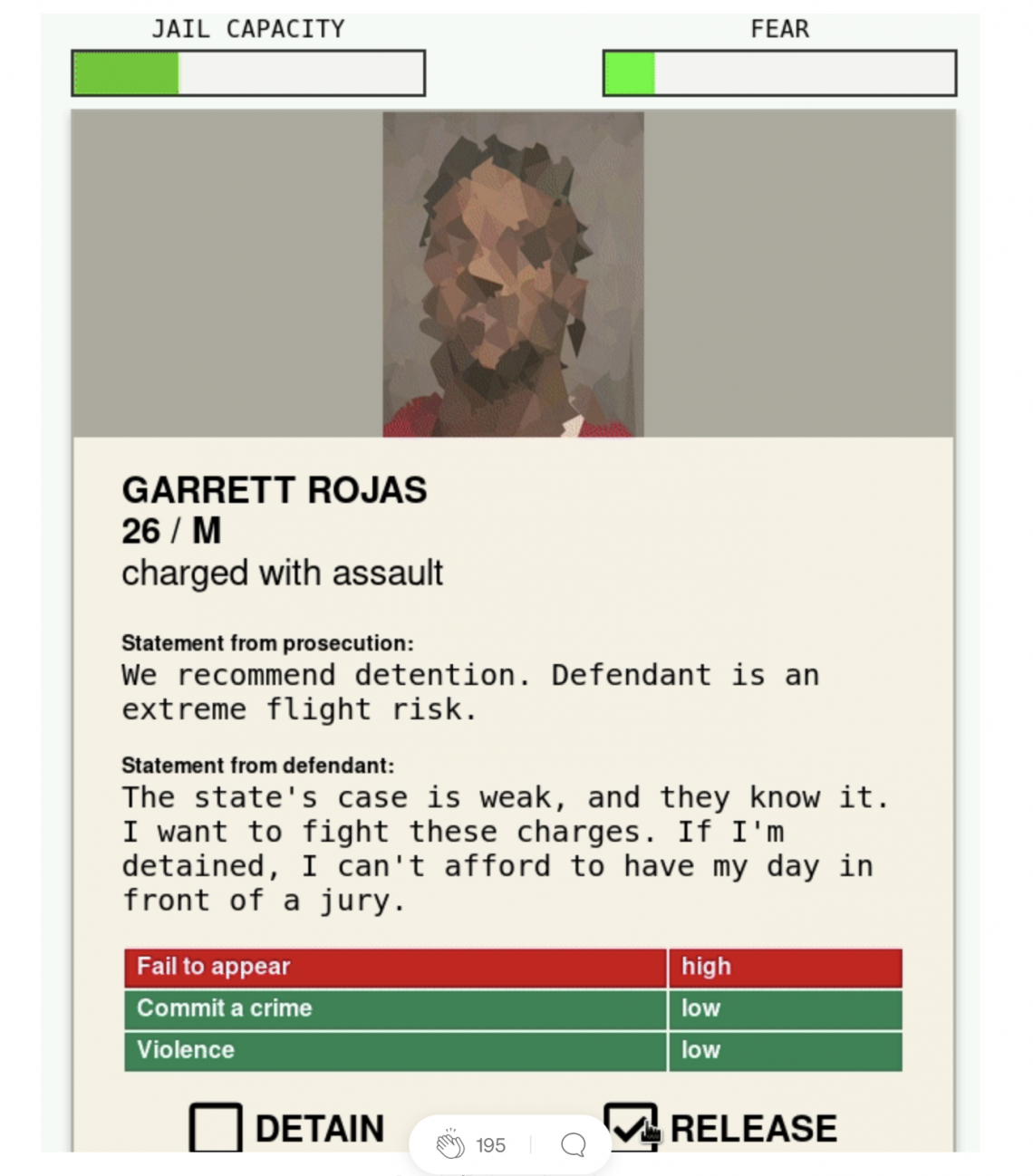

The first parameter I looked was the level of violence; if it said high, then they were detained. If the violence level was medium, then I looked at the rate under ‘Fail to appear’ or ‘Commit a crime.’ Anything under high and I would read the defendant’s statement. If job or children were affected them by detaining then I usually released. Level of violence was a limit that I felt was measurable without being affected by characteristics like gender or race. ‘Fail to appear’ or ‘Commit a crime’ parameters are unknowns. How do they measure them? How do they rate them? That is why I didn’t feel they were of value to influence my choices. Cathy O’Neil (2016) warns that just because you don’t look doesn’t mean it’s fair.

I did look at the levels of capacity as well as fear, but I decided that I would not let it affect my decision unless one level got high. As you can see in the above image, at the end of the simulation I had filled the measurement bars on both over 75%.

When you see real people affected by choices made, it is very tempting to look at something like an algorithm to take the emotions out of the choices made. Numbers are straight forward and they can make the decisions for you. The problem is in the formula used to make those calculations. They should be continually assessed. Just as a teacher just reassess their practices as they go. Ensuring the pedagogy is doing the job it should and not be negatively affecting students in their class.

Resources

O’Neil, C. (2017, July 16). How can we stop algorithms telling lies? The Observer. Retrieved fromhttps://www.theguardian.com/technology/2017/jul/16/how-can-we-stop-algorithms-telling-lies

O’Neil, C [Google Talks]. (2016, November 2). Weapons of Math Destruction [Video]. YouTube. https://www.youtube.com/watch?v=TQHs8SA1qpk