Link 1 – Sebastoam’s Task 1 – What’s in Your Bag?

Comment made on May 21st but did not go through moderation:

Hi Sebastian,

Looking forward to learning aside you. Wow, 4 MET courses in one semester. Best of luck! And congrats on the upcoming retirement as well!

25 years ago my bag would definitely have a Sony Discman (I think they got rebranded to Walkman later when the original Walkman with cassettes were discontinued) as well, and I also carried around a 25 disc CD wallet as well so that I can switch out CDs whenever I was in the mood for. Did you do something similar, or did you just have the one CD at a time?

If memory serves, 25 years ago CD burners weren’t popular yet, so I just listened to the CD tracks in the order that the artist designed. I think this is something that was gradually lost over time: as MP3 players and online music streaming services become more popular, I find it more rare to listen to CDs in its entirety and only jump around tracks I enjoyed. If we go with the “weaving” and “creating” definition of text, then we went from going with large “texts” created by the artist (the CD with its specific track order) to small chunks by different authors, or a “text” created by an algorithm such as Spotify’s recommended playlists. What are your thoughts on that transition?

Cheers,

Matt

This link demonstrates that as early as week 1, my preexisting notions of “text” has changed from something with written words to something that is weaved and created. Seeing Sebastoam mention that they would have a Sony Walkman in their bag 25 years ago reminds me of my own experiences with Discmans and Walkmans, and the type of text that is created when one creates a mixtape or a mix CD, or the various thoughts that artists and support staff go through when they decide the track order in an album. Sebastoam’s post, in conjunction with the module one readings, allowed me in incorporate more items into my mental schema of what is text.

Link 2 – Kris’s Task 2 – Does Language Shape the Way We Think?

Thank you for sharing your perspectives. Your point about Borditsky’s [sic] points at 24:00 reminds me of this TED Talk (https://youtu.be/PWCtoVt1CJM?t=234) by Gebru. Artificial intelligence perpetuate certain gender stereotypes and associations, following the trends of the data that trains it.

Kris’s point about gendered nouns and the prevalence of gender stereotypes in society reminded me of Gebru’s TED Talk that I’ve encountered in previous MET courses. Little did I know at the time that this would be a major topic several months later in module 11 when we talk about algorithms perpetuating preexisting bias. This linkage shows how this various aspects of this course could be interconnected, much like how hypertext has affects our thinking from linear to networks. Boroditsky’s TED Talk links to algorithms, and to a far weaker extent, my thoughts on how the arrangement of tracks on an album could be text links to the Golden Voyage Record task.

Link 3 – Sebastoam’s Task 2: Does Language Shape the Way We Think

Comment made on May 26th but did not go through moderation:

I just wanted to chime in on your 1:46 point about the abundance of languages. In a TED Talk (https://youtu.be/RKK7wGAYP6k?t=762) that’s a condensed version of this video, Boroditsky talks about how we’re losing one language a week, and we could potentially lose half of our languages in the next century. Things such as colonialization, globalization, social values that view certain languages superior than others, all lead to continued loss of languages around the world.

That said, there certainly are efforts to combat there. As part of Truth and Reconciliation efforts, the BC Ministry Education has began to offer indigenous language classes in secondary school. One of the videos in 2.2 came from Wikitongues, an attempt to archive languages and dialects near extinction around the world. Hopefully more places around the world also begin to implement policies to help preserve dying languages.

Sebastoam’s point about languages being at a risk of extinction is one that I emphasized in my Task 2 as well by talking about my mother tongue, Taiwanese. Even outside of this course, whether it be teaching an introductory epistemology course, or my own musings on various subreddits on Reddit, I get quite passionate about talking about dying languages, so I jumped at the chance to reply to Sebastoam who made that point. Sebastoam’s post also allowed me to think and elaborate on ongoing efforts to preserve dying languages, something I didn’t have a chance to discuss in my own task.

Link 4 – Joti’s Task 3 – Voice to Speech

I really enjoyed reading your Digging Deeper section. From reading Gnanadesikan I’ve made a few thoughts about Taiwanese, Chinese, and literacy rates, and reading your Aha Moment section led to further reflections.

My initial response to the readings this module was skepticism. Many of the readings seem to suggest a “writing-envy” ” in speakers of languages without writing, and that without writing a language is fated to die out (Gnanadesikan 2011) or not be able to perform complex mental processes (Ong 2002, Schmandt-Besserat & Erard 2007). Schamndt-Besserat & Erard 2007 also argue that the English/Roman alphabet will eventually find its way to various languages. My initial reading response was focused on Indigenous languages and how though transcribing Indigenous languages using the English/Roman alphabet this may lead to further westernization of the Indigenous peoples and losing what is unique about an oral-only Indigenous language. That said, as the latter readings of the module demonstrates, Romanizing indigenous languages is a practice that the indigenous communities are actively engaging in, so perhaps my fears are unfounded (Hadley 2019, Anishinaabemodaa n.d.).

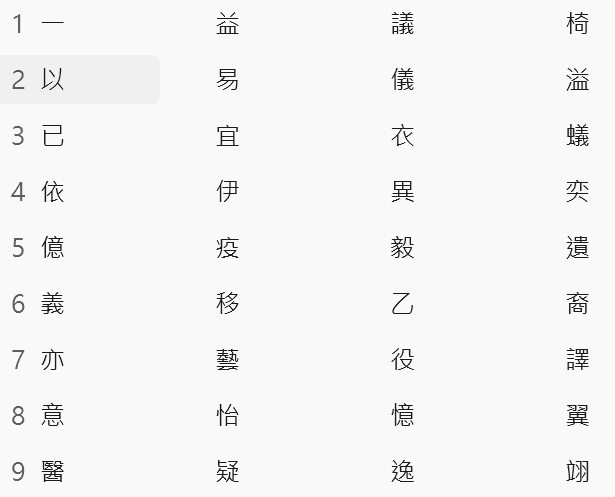

Your reflections led to some further thoughts about how Taiwanese applies to the arguments in this module. On the one hand, Taiwanese did have a Romanization system called Peh Oe Ji (POJ) that Western missionaries introduced to transcribe Taiwanese sounds using the Roman alphabet into written text, but this system was mostly unused since the Japanese colonial era (Kloter 2017). The fact that Taiwanese doesn’t have a writing system (Mair 2003) and has fewer speakers every year supports Gnanadesikan’s 2011 arguments, but I also wish to point out that it’s because of writing that regional languages/dialects are eliminated in favour of a main national language. For example, in China there is a negative correlation between literacy rates and the usage of regional languages/dialects such as Shanghainese (Wellman 2013), and module 4 readings such as Innis (2007) highlight how the printing press allows for far faster dissemination of knowledge through writing than oral communication. Writing may help preserve languages, but it’s also a driving force for languages becoming endangered in the first place.

Joti’s task 3 led to a lot of opportunities for reflection and elaboration. While going through the readings, especially Schamndt-Besserat & Erard, one topic I was quite uncomfortable with was that Romanization is inevitable in other languages, and I thought of how the local indigenous languages are Romanized in various ways. On the one hand, it helps with the preservation of the language, but on the other hand, it’s further westernization/colonialization on indigenous culture. This was a topic that I wish I could consult various local indigenous people on and hear their thoughts, though further course materials such as Hadley 2019 and Anishnaabemodaa n.d. did alleviate my concerns somewhat by emphasizing language preservation.

I was able to further elaborate on Taiwanese, and now at the end of the course I’m reminded of how Joti’s Task 3 allowed me to make the link between the printing press talked about in module 4 to how writing both preserve and endanger languages.

Link 5 – Brie’s Task 5 – Twine

Hi Brie,

Thanks for the fun Twine. My first time through I managed to make most of the “moving forward” decisions rather than the decisions that needed to loop back, but my second time around I decided to check out the other options. I think my experiences with your Twine shows a potential limitation of hypertext (and choose Your Own Adventure stories), that with so many potential paths, not everyone will explore every path.

This reflects a current conflict in pedagogy. One the one hand, as a teacher I want to provide opportunities for students to explore whatever paths they are interested in to further develop this skills and knowledge, and I think mediums that use hypertext is perfect for this. On the other hand, I’m limited by time and curricular constraints, so if I want my students to learn how to balance chemical reactions, rather than a non-linear medium such as hypertext where students can “wander off the path,” I prefer paper/linear texts that provide scaffolding for students to gradually develop skills and competencies.

Would you also say that there are some things that non-linear mediums are better for, but for other things linear text is the way to go? Or, is that an anachronistic way of thinking and that we should just fully embrace non-linear text?

Brie’s wonderfully made Twine allowed me to think about a few aspects of hypertext that I didn’t have a chance to discuss in my own task. The first is that with many possible branches and endings, not everyone will explore every path. While one may argue that for entertainment purposes such as choose your own adventure stories this may not be a huge issue, I believe that by not exploring all possible paths presented we’re doing a disservice to the creator and the work they put in to every path. Visual novels, a genre of video games that’s akin to choose your own adventure stories, sometimes get around this problem by unlocking the “true story” once all the major paths of a story are explored.

Brie’s task also allowed me to reflect on hypertext and education, and how under the current BC Ministry of Education policies there’s a conflict between wanting students to choose and explore various topics to develop skills and knowledge, and yet being constrained by a curriculum to focus on teaching certain topics, not to mention that various pedagogical concepts such as scaffolding may be far more difficult through the hypertext medium.

Link 6 – Steph’s Task 9 – Network Assignment

Hi Steph,

Thank you for your analysis of the data. Like you I quickly noticed that we all selected El Cascabel and most of us selected Wedding Song, but that only led to more questions: what about the other seven people that selected El Cascabel that didn’t end up in our group, or the other five people that selected Wedding Song? I started looking at our non selections and noticed that all five of us did not select Brandenburg Concerto 2, Sacrificial Dance, or Flowing Streams. While this could be a potential reason, as you’ve mentioned it’s impossible to infer accurately.

Another grouping method I thought about was seeing how many of tracks I shared with all participants. I shared five tracks with you and Jonathan, while I only shared three tracks with Carlo and four tracks with Carol. There were three others whom I shared five tracks with that didn’t end up in my group. If I had the motivation and time, this could potentially be done with all participants, and that could perhaps give more data on how the algorithm grouped us.

Your point about the one track you truly liked wasn’t well connected in our group also highlights the deficiencies in our data. If we had to rank our selections and produce a weighted graph (which would have made both this task and task 7 far more difficult), we may have been able to obtain a visualization and grouping that better reflects our personal connects to these tracks.

Finally, like you I shared the same thought about not belonging to this group. While most of the group had diversity as their top selection criteria, I went with having vocals for mine. This further supports your point of the arbitrary nature of algorithm groups; my main take away is that we should be vigilant about trying to understand the processes behind these algorithms rather than allowing it to remain as a “black box.”

Both Steph and I had the same reservations about whether or not we truly belong in the group we were placed in. For our analysis, even though we used different methods, we looked at similar things such as how all of us selected El Cascabel and most of us selected Wedding Song. I feel that with the adjacency matrix that I constructed, it was easier to organize the data to see who else selected El Cascabel and Wedding Song, as well as being able to see our non-selections as well. Moving slightly away from the course (although still talking pedagogy), this is a prime example of constructivism, how different people when seeing the same thing (our Golden Record data) will look at it from different angles, highlight and analyze different things, and reach different conclusions. At the same time, this also gives more weight to the consensus truth test, where even if we approach it from different perspectives and reach the same conclusion (such as that algorithm groupings feel arbitrary as they do not consider the reasons of why we selected these tracks), then there is more validity to the conclusion we reached through different paths.