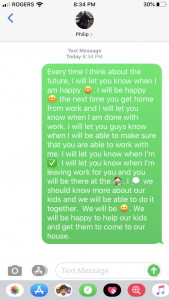

After going through this exercise, I found that the predictive text was not representing my own words. While doing this activity, I was not allowed to really think about what I was trying to convey based on the prompt. My focus through this entire activity was primarily on trying to create sentences that would make sense. Relying solely on the predictive text, I found that it stifled my own creativity and individual thought. This text does not represent my own thoughts and feelings on this subject.

For me personally, I like to use words and the predictive text was an over-simplification of language. I am also an optimistic individual and when I am talking about my future, I would naturally talk about my own ambitions for my family or my job. Having two young kids, I would most likely talk about what I would hope to see for them in the future. These are the things that I would be talking about when I am referencing the future because at this point in my life, these are the three biggest influences right now. While some of those words that the predictive text used were word that I often use when I am texting my co-workers or friends. Words like “work” and “kids” are things that are not only associated to my future but they are words that I often use consistently when I am texting. That is what I found during this exercise. The predictive text was often providing words that I use frequently and there is were no insight words that would reflect my own thoughts on my hopes and dreams. The predictive text merely scratches the surface but never delves into the details into providing words that would be associated with the concept of the future. Frequent words was more of a priority over word association in terms of what words would go with the word “future.”

While it is extremely convenient for the algorithm to pick up one’s tendencies and commonly used phrases or words, it fails in not having the ability to “think” in more abstract ways. This is the scary part about our reliance on algorithms. In on of the podcasts that I listened to, it was clear that the New York police commanding officers were altering their arrests to make their precinct look good to skew their performance in the Comstat program. The biases in the program was not examined and and the commanding officers were afraid to speak out on the fact that the data was skewing the policing tactics.

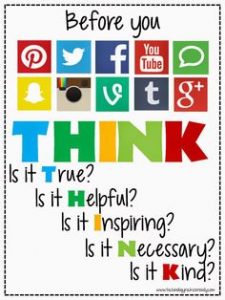

In public spaces, we are currently watching as individuals are not questioning or thinking about what they see or read. Individuals are using algorithms to manipulate what we see on public spaces like Instagram, Facebook or Twitter for example. We need to teach all individuals to question what we read and truly reflect on what the see or read. Algorithms need to be monitored closely and need to be governed accordingly to ensure that they are not manipulating individual’s thoughts in public spaces. With some governance and education, people need the digital literary to “THINK.” We need individuals to do the following:

Teaching digital literary to the students at my school, it is easy for them to understand this concept and apply through out their time at our school. We need the same governing principles around the algorithms that we use daily. Further education on these algorithms will better inform the public and provide a basic literary that will help individuals better understand the use of these algorithms and not have an over reliance on predictive texts.