The prompt I selected for this task was “My idea of technology is…”

Out of curiosity, I put the prompt into Google and the auto-complete options available did not pique my interest.

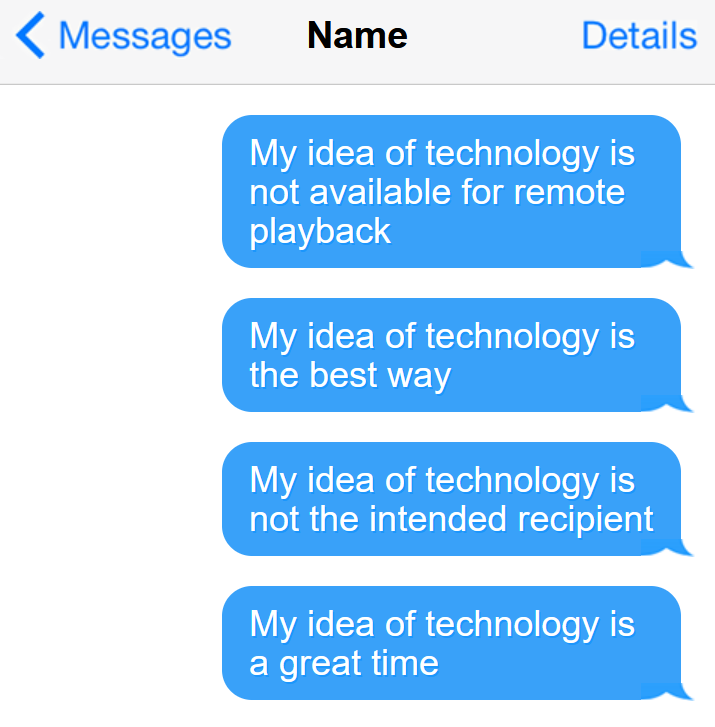

Next, I used the same prompt in messages on my phone and I received 3 options, “a,” “the”, and “not” to finish the sentence. The sentences generated were:

“My idea of technology is not available for remote playback”

“My idea of technology is not the intended recipient”

“My idea of technology is the best way”

“My idea of technology is a great time”

The first sentence sounds like an error message on a streaming device, one that I feel have seen before in reality before but cannot recall with certainty. The second sentence likewise, could be found as an error on a website or product. The third and fourth sentences that were generated sound like they are thoughts from an elementary-aged child on technology, and they may have expressed such opinions in their English writing class.

None of the generated sentences reflect my thoughts on technology. This could be due to the fact that my discussions on topics over text are quite limited — either to memes or articles shared, and received responses are similarly communicated with emojis or brief messages. The predictive text does imitate how I would string together a text as I tend to keep my messages short with simple vocabulary.

The predicative options are also endless. If I don’t use any punctuation or end the sentence myself, the suggested words keep going whether the whole sentence or idea makes sense or not.

This is akin to an episode of the Office where Michael Scott runs with a sentence but doesn’t know where its going.

While my predictive sentence option did not change when entering prompts about careers held traditionally by certain gender roles as McRaney (n.d.) observed in his podcast, I did notice that my options were always “he” when entering similar prompts. This could be the algorithm’s way of remaining neutral, but such response options can have implications for politics, business, and education where systems can be used to perpetuate bias and discriminatory practises that come to be accepted as the norm. I see the value of using algorithms – they can be immensely useful in resolving problems, cutting down time and material costs related to tasks, unearthing patterns and helping to inform our decisions. But they cannot be viable in situations of complexity, creativity and where exceptions are required, so they must be used with caution.

Reference

McRaney, D. (n.d.). Machine Bias (rebroadcast). In You Are Not so Smart. Retrieved from https://soundcloud.com/youarenotsosmart/140-machine-bias-rebroadcast