Though chatbots are by no means a new concept, their utility and popularity has exploded in recent years. This represents exciting new prospects for investment, and rapidly emerging markets throughout education.

But with this rapid development and proliferation comes with it new risks and uncertainties. The use of chatbots in general carries a variety of ethical, environmental, and privacy risks related to their development, training, biases, and corporate control. For our purposes though, we will highlight several key risks directly relevant to investment in the market.

Scaling Ceiling

“People call them scaling laws. That’s a misnomer. Like Moore’s law is a misnomer. Moore’s laws, scaling laws, they’re not laws of the universe. They’re empirical regularities. I am going to bet in favor of them continuing, but I’m not certain of that.”

Anthropic CEO Dario Amodei

As we discussed in the previous section, the blistering pace of advancement in the models that power contemporary chatbots isn’t guaranteed to continue in perpetuity. When, or if, this technological trend will change should represent a key consideration for any investor entering into the educational chatbot market. Will the knowledge of a More Knowledgeable Other increase, while becoming more cheap and accessible? Or has the technology reached a plateau which will require venture’s to introduce new innovations in order to produce value?

This makes investment in companies that offer more than just window-dressing on top of an existing LLM API less risky, as they bring a value proposition beyond the benefits of perpetually scaling chatbot capabilities.

Academic Integrity

As we have discussed, the traditional contemporary chatbot is a generator, one primarily focused on generating answers to user queries. It’s training allows these answers to be quite sophisticated, adaptive, and accurate, on nearly any topic. It’s output often indistinguishable to human writing (I promise this page was written by a human!). Thus it is unsurprising that the temptation to misuse chatbots as a shortcut through learning exercises is high.

A recent study by MIT found that using for essay writing resulted in lower brain engagement and underperformance in comparison to writing without AI. In China, tech firms recently disabled access to their chatbots during the time-period of the country’s university entrance exams. Regulatory pressures from educational institutions and governments due to academic integrity worries will further elevate the risk of the chatbot market as a whole.

Educational chatbots that aren’t focused on delivering rote answers are thus at inherently lower risk. An MKO chatbot that continuously prompts the learner and guides them carefully through the learning process is intended to elevate the learning experience, not deteriorate it.

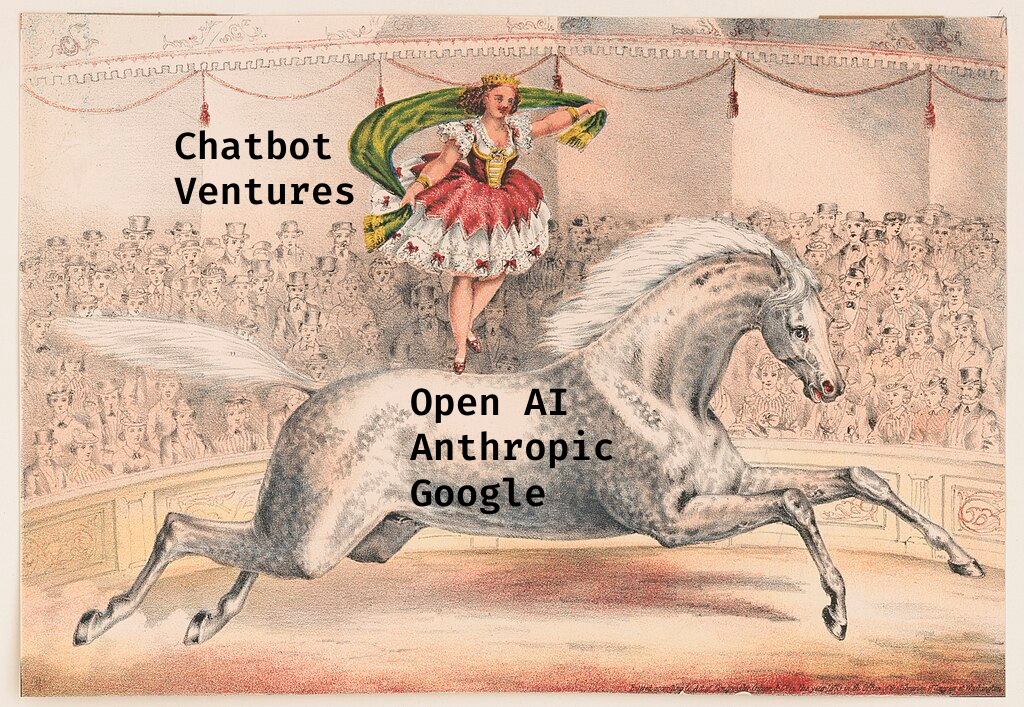

Reliance on Core Models

A venture in the educational chabot market is either OpenAI, Google, Anthropic, Facebook, Deepseek, etc. themselves, or is built directly atop one of their APIs. This is logical, as the investment and resources required to develop and run an LLM are astronomical. But like the circus performer tip-toeing on a horse, it places ventures relying on this technology in a risky position. Every pricing change, feature pivot, or regulatory adjustment are felt downstream by these chatbot ventures.

In some cases, the LLM companies themselves release products that compete directly with existing ventures built atop their ecosystem, often eclipsing them due to existing market share. Educational chatbot ventures modeling their product off of educational theory should be quite nervously monitoring Google’s explorations with LearnLM.

After reading the risks above, which ones concern you the most based on your work context? Why?

Or click here to add your thoughts.