Everyone comes with their own biases and algorithms may be formed with the best intentions, but they are undoubtedly embedded with the bias of their creators. The biases I had coming into the detain/release module were

- I’ve watched every season of the Good Wife. I think in the last season the main character in bail court.

- I’ve watched most, if not all of John Oliver’s shows on prison and detainments.

- The song “What’s Your Story?” came to mind while I was listening to the different podcasts.

I don’t think this background makes me an expert, but this viewing history has made me more aware of biases, an unjust and prejudiced judicial and policing system where sometimes evidence is “lost” or “found” to get a case off the docket.

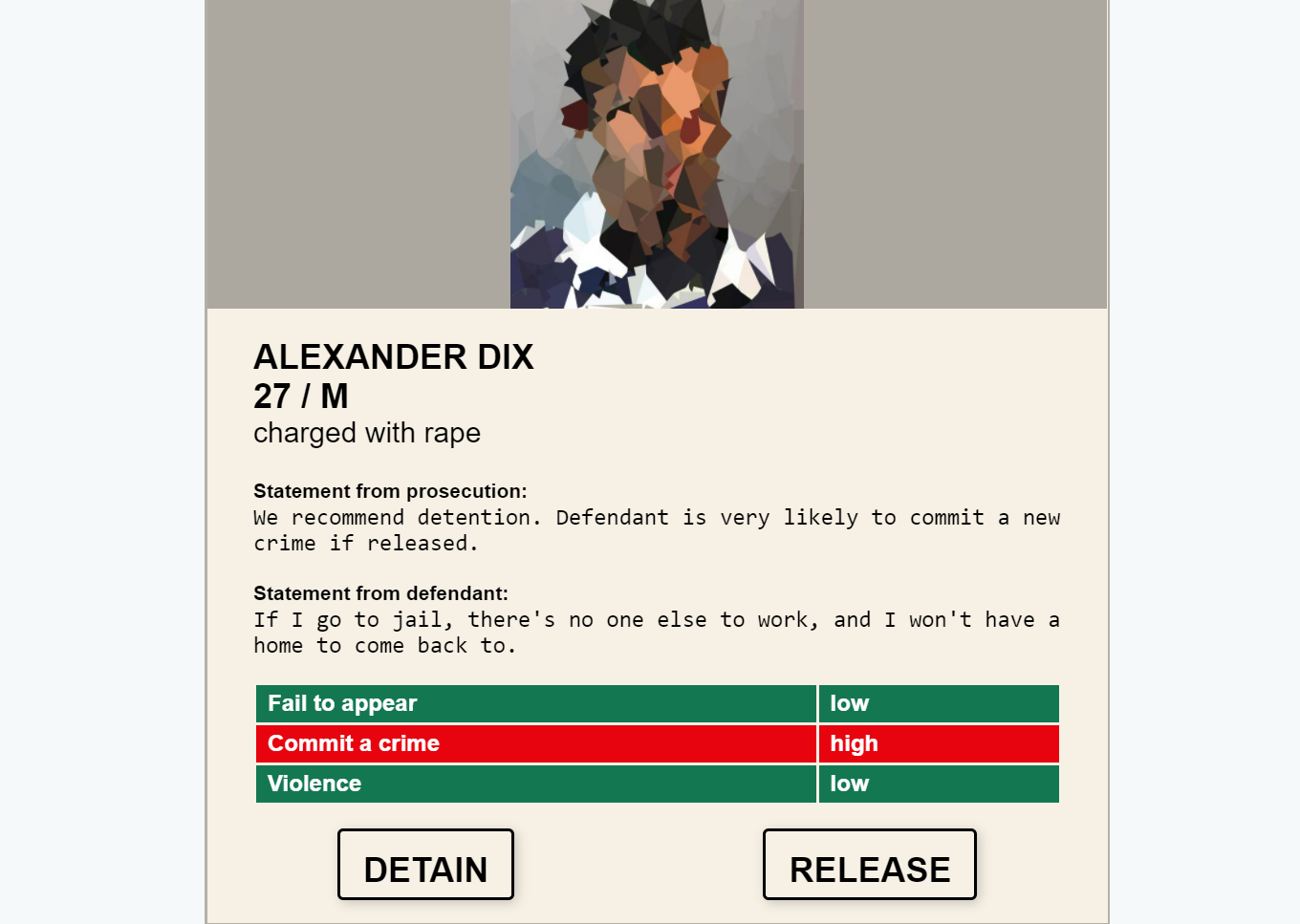

The charge is serious and sensitive. The information from the algorithm is confusing and makes me think of To Kill a Mockingbird where Tom Robinson was wrongly accused of rape. At the same time, there are plenty of reports in the news where rape victims’ claims were dismissed for no reason. I’d want to see the evidence they have against Alexander Dix. Why is he likely to appear and unlikely to be violent, but likely to commit a crime? Wouldn’t a person likely to appear also be unlikely to commit a crime? Did the algorithm predict he’d commit a crime because of skin colour? What kind of crime does the algorithm predict he’d commit? Shoplifting? Shoplifting is not something I condone, but why would he shoplift? It sounds like he has a family to support. If he is no longer there to support his family, isn’t it more likely that the people he left behind will suffer and perhaps resort to petty crime?

I think the problem with the algorithm is it overlooks underlying issues that need to be solved, such as over-policing in certain areas, lack of social support systems such as welfare and childcare, which O’Neil (2017) noted are related and results in the poor being caught up in “digital dragnets”.

It’s not just adults who can become entangled by these algorithms. LMS, which are becoming a part of more educational systems, can collect data from students that can be used to identify at-risk students. As a teacher I can use the data to confirm what I already know or look into cases that surprise me–perhaps a student who did well in class did poorly on a test due to a stomachache or an argument; however, if this data were sent to the head of school or the school board, the data would not show the wider picture that the classroom teacher is privy to. A report sent without teacher input could unnecessarily alarm and upset parents and students.

Algorithms do have their time and place. They definitely would have made my life easier back when I had 200 students during online classes, but I would have had to follow up with the students’ homeroom teachers to effectively use that data to evaluate and support students.

References

O’Neil, C. (2017, April 6). Justice in the age of big data. IDEAS.TED.COM https://ideas.ted.com/justice-in-the-age-of-big-data/