“Jay, why did you scream?”

“I don’t know.”

“Think about it.”

“I thought that was your job.”

“What was happening before you screamed?”

“Well, B screamed first so I decided to too.”

“If B jumped off a bridge, would you do that too?

“You sound like my teacher. This is really annoying; why do you have to go over everything I do?”

“Because you don’t.”

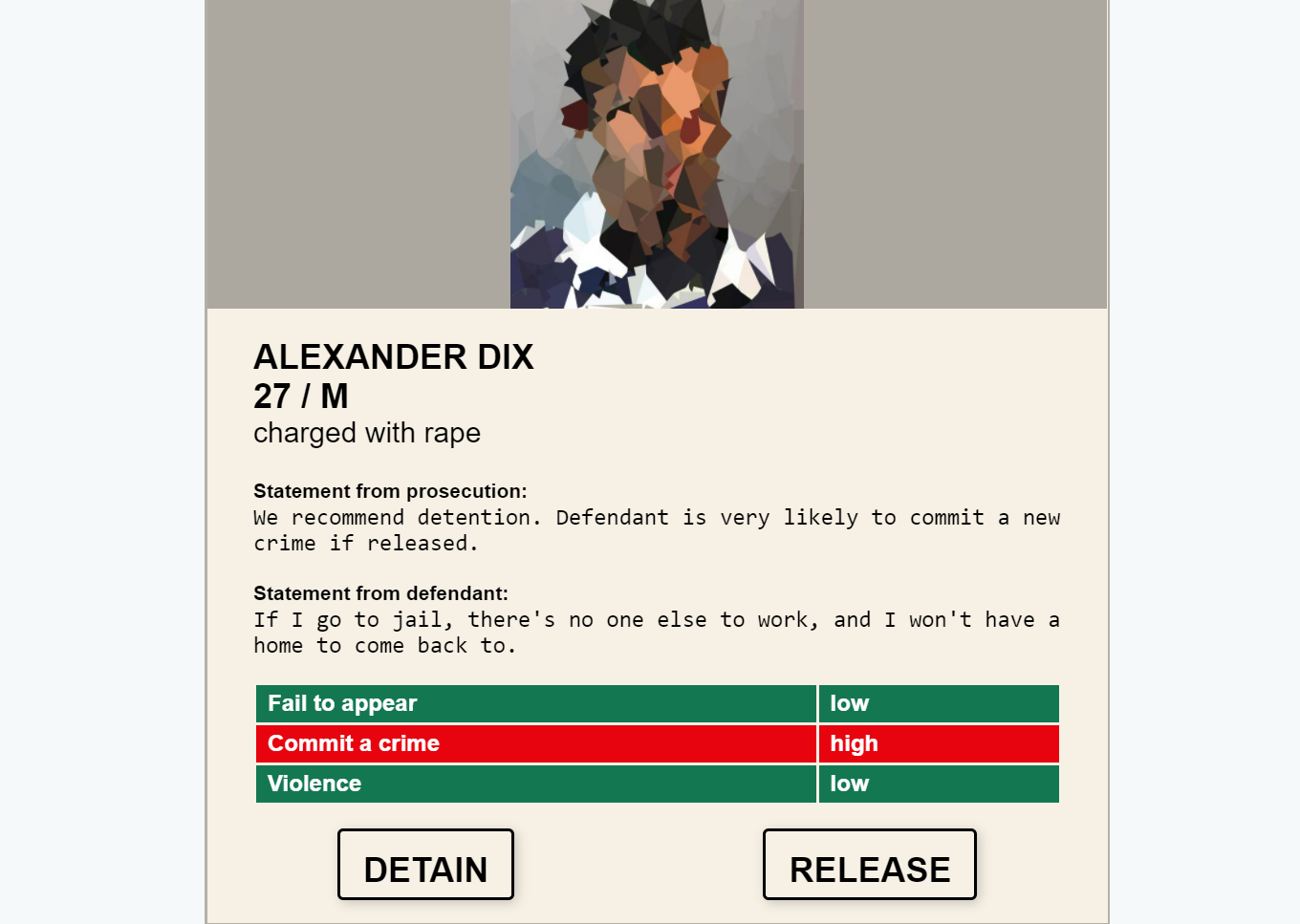

Dunne and Raby (2013) state that conceptual designs are more than just ideas, they’re ideals as well. Moral philosopher Susan Neiman elaborates on this, “Ideals are not measured by whether they conform to reality; reality is judged by whether it lives up to ideals” (cited in Dunne & Raby 2013, p. 12). An only child with busy career-oriented parents, their parents have become concerned about Jay’s emotional maturity. They have invested in a SmartWatch app that uses sensors to detect Jay’s emotional state and behaviour to prompt them to reflect and regulate his emotions and actions. Dunne and Raby (2013) note that designers’ creations are made with the best intentions, often neglecting people’s worst tendencies. Their solution to this design flaw is to change “our values, beliefs, attitudes, and behavior” (Dunne & Raby, 2013, p.2). The app’s algorithms used to determine behaviours and emotions are user-dependant, meaning Jay will be prompted by the app to input their emotions and causes.

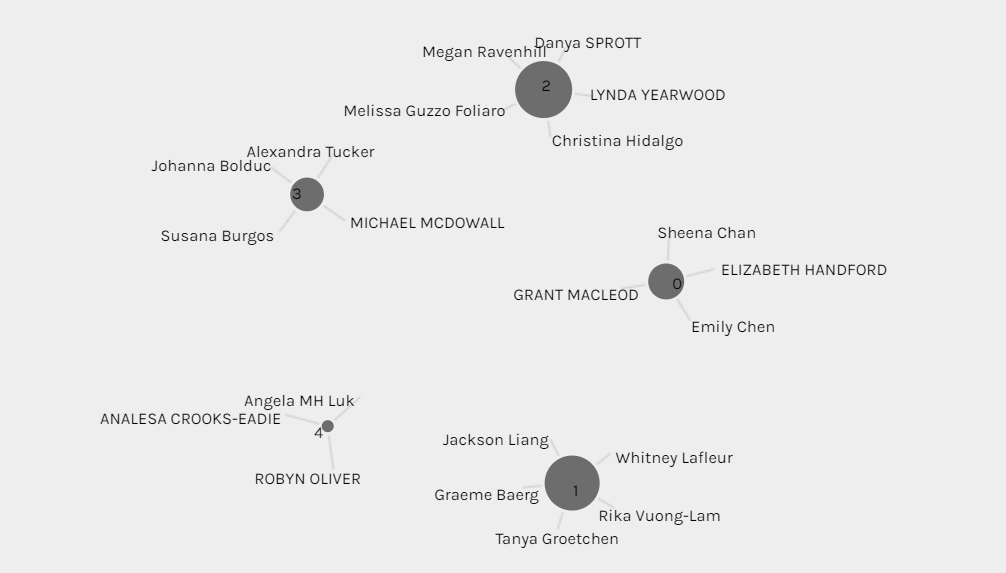

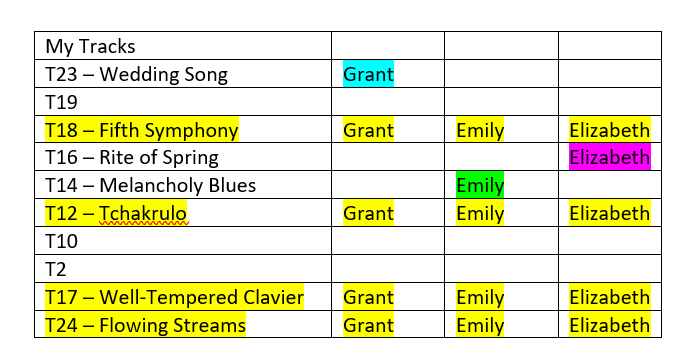

Meanwhile, Jay’s teacher is talking to herself, or is she? She’s having a discussion with her teaching app. The app goes through the curriculum and student data. From the time students enter the school, their learning progress has been input into a database. This data plus lesson plans taken from several online resources are input into the app which then uses this information to plan lessons around the teacher’s learning objectives. Initially, teachers were concerned that their jobs and/or pay would be cut, but as Dr Shannon Vallor (2018) observed, “AI is not ready for solo flight” so “we [people] are still the responsible agents.” The purpose of this app is to free up time for teachers to have non-contact hours for evaluating student work during school hours, focusing on presentation rather than creation, mentoring and coaching new teachers, and of course, inputting students’ learning progress into the database. Teachers are still involved and engaged in the lesson planning progress since the lessons will use the data teachers input into the database to determine if the class should move on to the next learning objectives. Different activities and lesson formats are suggested by the app for teachers to choose from with the option to make modifications. The role of teaching is moving from lesson planner to evaluator and mentor. Dr. Vallor (2018) points out that the “future of human-AI partnership, one that serves and enriches human lives, won’t happen organically; it will need to be a choice we make, to improve our machines by improving ourselves.”

References

Dunne, A. & Raby, F. (2013). Speculative Everything: Design, Fiction, and Social Dreaming. Cambridge: The MIT Press. Retrieved August 30, 2019, from Project MUSE database.

Vallor, S. (2018, Nov 6). Lessons from the AI mirror Shannon Vallor [Video]. YouTube. https://www.youtube.com/watch?v=40UbpSoYN4k&t=872s