Hi!

I believe we are supposed to share our Assignment 02 Option 02 with the rest of the class, but I can’t find another space so I will share here: https://etec533thecaseofthemissingbees.weebly.com/

Enjoy!

Kari

ETEC 533 – Technology in the Mathematics and Science Classroom

Hi!

I believe we are supposed to share our Assignment 02 Option 02 with the rest of the class, but I can’t find another space so I will share here: https://etec533thecaseofthemissingbees.weebly.com/

Enjoy!

Kari

I understand distributed learning to be the idea that knowledge construction does not occur from one source but from experiencing and engaging with many different sources of information. It is through the interaction with so many different, and sometimes conflicting sources, that a student can build a sophisticated understanding of a topic. The internet provides an excellent opportunity to expose students to a deep world of distributed learning. As educators our role in this is to help guide students through what they can find online and help them assess the content as being appropriate, relevant, etc. But even in this action student learn. In my other course we are developing a lesson on digital information literacy, where we lead students how to find and evaluate sources online across a number of dimensions. I believe, regardless the subject, when it comes to engaging students in distributed learning through online digital resources a lesson on digital information literacy is necessary. The implications of having access to JIT and on demand content is one of the major reasons why I think a lesson on digital information literacy is so important. With so much information at a students fingertips it is essential for them to learn how to evaluate content and determine if it should be consumed or not. While exposure to multiple view points is important, understanding that not all published content should be given the same level of consideration is an important lesson to learn, and to learn early.

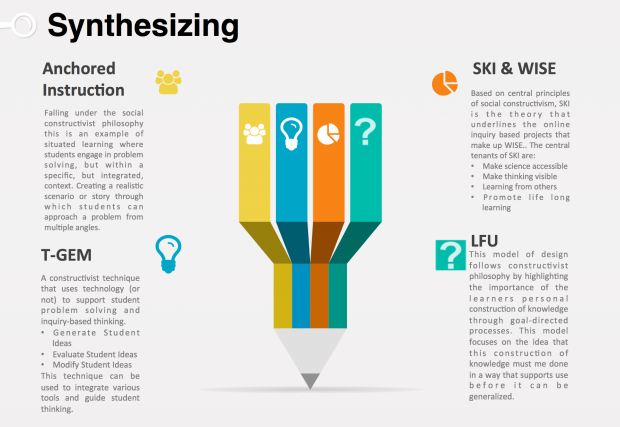

The image above doesn’t view well. To view download a PDF of Synthesizing STEM.

Looking at each of these techniques, design models, frameworks and strategies together I noticed that each were complementary to each other and could be used together in the design and development of a rich, technology enhanced, learning environment. I would almost argue that a well designed module/lesson/course would include all of these simultaneously. There is a lot of overlap across each of these components, mostly around constructivist philosophy, problem solving framework and guided inquiry that the areas where you see differences really complements the rest of the components. At the end the final product is certainly more than the sum of its parts.

In my design of Assignment 2, I employ each of these components in some form across my lesson, from building a case study that creates a situated learning context to having students work through the GEM technique to reframe their thinking after detailed exploration. I think most teachers already do incorporate a lot of these ideas into their teaching, even if they don’t know that they do or they use techniques or models that are nearly interchangeable!

While reading Edelson’s (2001) explanation and rationale for the Learning-for-use (LfU) design model I recognize a number elements that I am using while building my final project for this course. A lesson on climate change geared to upper years environmental science students. I used the backward design model to inform my lesson development but I can see many similarities between these models specifically around their shared focus on learning objectives.

As Edelson (2001) describes:

“from the perspective of design, the LfU model articulates the requirements that a set of learning actives must meet to achieve particular learning objectives. The hypothesis embodied by the LfU model is that a designer must create activities for each learning objective that effectively achieve all three steps in learning for use for that objective. To support this design process, the LU model describes different processes that can fulfill the requirements of each step”.

I have developed a series of mini lessons each aligned to a specific learning objective. Each mini-lesson includes activities demonstrate each step in the LfU model:

Motivate (An activity that creates a demand for knowledge). I began each mini-lesson with a guiding question that activates prior knowledge but also allows students to acknowledge the knowledge areas that are missing.

Eg/ What are the physical mechanisms that are causing global warming?

Construct (An activity that provides learners with either direct experience of novel phenomena or receive direct/indirect communication from others). Each mini-lesson then directed students to a web-based experience. The experiences differ depending on the mini-lesson from completing activities (direct experience) to watching a video (direct communication).

Eg./ Complete NASA’s interactive lesson on Introduction to Earth’s Dynamically Changing Climate

Refine (An activity that enable learners to apply or reflect their knowledge in meaningful ways). Each mini-lesson includes an application based group activity that allows students to demonstrate how they have followed the guiding question inquiry and built on their knowledge. I choose to incorporate a peer to peer component in order to activate social constructivism, an opportunity to learn from each other.

Eg./ After going through all the steps of this interactive website explore other resources that review the science behind climate change. What other resources would compliment this module? Be prepared to select one new resource that you think does a good job of presenting the science in an efficient manner. Make sure to collect the resources you found in a working bibliography using APA formatting. At the end of this session be ready to discuss with your working group how you would introduce and teach the science of global warming if you were tasked to teach this lesson? Share the resource you selected and why you think it does a good job of explaining the science.

Students move across a series of mini-lessons all built in this way allowing them to connect themes and reinforce ideas across the lessons. The end result is a synthesis of knowledge to answer a main question. As this lesson uses a guided inquiry philosophy the design model of LfU works very well!

References

Edelson, D.C. (2001). Learning-for-Use: A Framework for the Design of Technology-Supported Inquiry Activities. Journal of Research in Science Teaching. 38(3) pp 355-385

I chose the ‘Designing an Amusement Park’ graphing challenge as I have fond memories of riding the roller coasters with my dad when I was a kid. Roller coasters are an excellent way to help students connect concepts of physics with an experience that they are familiar with, ie/ making physics accessible, which is a main tenet of WISE (Linn, 2003). This inquiry project tries to connect graphing interpretation with concepts of motion. I would customize this activity to help teach students how to interpret graphs in a G6-8 physics classroom.

This activity starts out trying to connect speed/motion with rollercoaster thrill vs. safety. I believe this is trying to get students to activate their prior knowledge around motion, but again the link is tenuous and I am not sure that students (g6-8) would see the connection. As this lesson is supposed to be about graph interpretation I would likely want to adjust this to include some sample graphs that students can try to interpret. This lesson adds a secondary component around designing for safety vs. thrill, perhaps as a way to make concept of speed accessible, but they don’t really make that connection so I would improve this so that students are more likely to connect the dots. I would provide embedded links about motion and speed and the effects on the human body. By doing this I would be helping to provide more of a scaffold to help engage knowledge integration (Linn, 2003).

Students are then taken through the main activity, which is to create graphs that will translate to the action of the roller coaster. Presumably the students should be seeing how the changes in the graph affect the roller coaster speed and direction. However the graph building is difficult and clumsy and the effect it has on the speed/motion of the roller coaster can be difficult to see.

While the central idea of having a simulation translate into a graph to help students understand that a graph relays information (ie/ tells a story) I feel that this would have more impact with a couple minor adjustments:

This lesson does provide students with the opportunity to engage in collaboration and knowledge sharing. Which I thought worked well, although the guiding questions in this area were a tad vague and introduced a new observation (head movement) that students hadn’t been primed to look for or connect with the ideas in this lesson. This piece may have related to the safety questions of this activity – but because there is a long gap between asking students to consider safety and this question of head motion I don’t know if a G6-8 student would grasp the connection.This would be taken care of by the adjustments I listed earlier.

I would expand this lesson to include a more constructivist component by asking students to consider other examples where motion has an effect on the human body and ask them to hypothesize what the graphs would look like. Some examples could be driving in the car with their parents, riding their bike, watching a manned rocket, riding in an airplane, etc. Depending on the scope of the lesson this could transition to a secondary inquiry project that looked at graphs that compared speeds/motions of different vehicles and their effect on the human body.

Resources

Linn, M., Clark, D., & Slotta, J. (2003). Wise design for knowledge integration. Science Education, 87(4), 517-538.

I don’t have the same classroom experience as others taking this course but I can speak to this topic quite well as I was involved in the integration of a technology teaching tool across much of the first and second year undergrad math curriculum in a higher education institution.

The major need that faculty/teachers were expressing is that students were not getting enough formative or realistic feedback about their abilities prior to midterms and finals. In large undergrad classes there is not a realistic way to provide feedback to all students in a timely and consistent manner. Yes, there are tutorials – but one of the issues was the length of time to return quizzes and huge discrepancy in quality of feedback students received. As a result students, especially struggling students, didn’t have a realistic picture of what they knew and didn’t know.

While I didn’t at the time when devising this solution I can see now that the formulation of this solution demonstrated the application of a TPCK framework.

In order to determine the solution for this problem I had to understand the pedagogical issues that were underlying the student needs. In this case it was timely feedback back and awareness of misconceptions just in time. Additionally I also knew from my understanding of the content that ample opportunities for practicing was required to be able to master the procedures being taught.

Understanding those two sides I could then select a technology tool that would support and align with the these goals. There are various tools available to support math learning however not all would fit the needs of this particular scenario. Knowing the technologies settings, how it can be configured to support various learning situations, its limitations, etc all are important in allowing you to select and integrate the tool to support the learning goals.

For my particular example we implemented a gated practice/quiz set up with embedded help/hints to reduce unaddressed misconceptions and reduce over confidence prior to midterms/finals. Students would use the technology to practice questions. If they got a question wrong they knew right away. They could then access hints or help tools to learn/relearn how to approach the question (question values changed after they viewed these help/hints so they never were provided the right answer). Once they achieved a pre determined score on their ‘homework’ they unlocked the quiz (for marks) on which they only had one chance. If they did poorly on that quiz they could go back and practice the homework some more (or go to the help centre/instructor for more help). We saw a significant improvement on attendance to the help centre and improvement on midterm and final scores.

Without understanding the content, the pedagogical strategy, and the technology tool I wouldn’t have been able to devise this strategy that supported learner goals.

Designers of learning experiences should be examining what the overall objective(s) of that learning experience is supposed to be. Then determine what is the best way (depending on the audience, amount of time, budget, etc) to assess the learners achievement of that objective(s). Once appropriate assessment has been designed then the collection of resources, materials, activities, etc should be collected and presented to learners in a way that they can logically work through the material AND see the connection to the overall goal. Designing in this way is called backward design and is informed by understanding by design theory and focuses on learner outcomes first (Wiggins, 2005). This methodology is particularly good in TELE’s as it helps the designer ignore technology tools that are all flash and no substance and rather identify the technologies that will help students achieve the outcomes successfully.

I apply this methodology when working with faculty in the design of their online courses. We focus on the outcomes and how we can measure their achievement first before looking at what technology will be used to support the learning. I found that this has helped to keep the student at the centre of the the learning design. When I find a tech tool that I like the look of I first examine my learning objectives (LO’s) to see if it makes sense to employ this tool in my design.

Excellent description and example of backward design

Wiggins, G, McTighe, J. (2005). Understanding By Design 2nd Expanded Edition. ASCD. Alexandria, VA.

For my interview I spoke with a friend who has recently graduated from his bachelor of education program at UVIC and has a gotten a job as a substitute teacher with the North Vancouver School board. He has teaches secondary level PE and Science courses. As a new teacher I thought it would be a great opportunity to discuss how his experience being taught how to use technology in the science classroom compares with the reality of using technology in the classroom.

While our conversation inevitably deviated from this route, I found that he had a number of interesting insights about the reality of teaching with technology

Purpose

Alex described his inquiry project where they had to ask a specific question bout instruction and technology and then develop. Is technology useful and what are the problems around using technology and how can these be solved. Within the scope of this project he researched a number of different apps that could be used within the classroom. He was specifically draw to the virtual dissection apps as they solved a specific need inside of the classroom – the removal of live/real dissections from the curriculum. The way that Alex saw it is that these apps provided him with a tool to be able to provide a simulated experience to his students. In many ways he found that these simulations would be more beneficial than the real thing as they provided students with a standardized experience. However he also found real challenges in using this sort of technology as many of the existing lesson plans that align with provincial curriculum don’t fit or align with the content that the virtual dissections highlight or use. So Alex found that in many cases while the tech itself had implicit value, it would take a significant rewriting of lesson plans to make using some of these tools useful or valuable. It brought to mind the importance of establishing purpose before choosing the technology

Preparation

One of the considerations that Alex pointed out a number of times in our conversation was the importance of preparation when it came to using any tech in his courses. Alex introduced me to a quizzing app called Kahoot that he and a number of other in service teachers use across North Vancouver. Alex uses this tool mostly for lesson review. I was interested in hearing if he was able to use it or other tools like it in a more improvised scenario, for example launching a quiz in a middle of a lesson to make sure that everyone was on the same page or to re-engage a distracted/unmotivated classroom. However Alex was quick to point out the importance of preparation. That the questions that he uses through this tool are carefully thought out to review specific content within the lesson and that this is aligned with the lesson plan. This makes sense, with the number of variables coming at teachers within the classroom, preparation is key. However I am wondering how this response would differ after a couple more years of experience in the classroom!

Distraction

Finally we talked about how he has overcome some of the challenges in the classroom that he has faced when using technology. Specifically those students that are distracted by the temptation of the multiverse that is the Internet; Accessing games, social media, news, etc. when they should be focusing on the assigned task. As we all know this is one of the real challenges faced by any educator when introducing a internet capable device into the classroom. Alex talked about how these tools don’t have any limiters placed on them, no browser lockdowns, etc. that could help police students use. Rather he finds he wanders the classroom catching students and having to close down distractor windows himself (not the best use of his time). Alex solution when the classroom is getting out of hand with distractions is to remove the distractor. Saying if they are not actually using the technology/tool for the purpose that it was intended then they don’t need to use it at all. While this is certainly a strategy I wonder if he is missing the real cause, that students are not engaged in the activity that they are using the tool for? Is this less an indicator of classroom management and more an indicator of a lesson that needs to be re-tooled?

One final thing that Alex brought up in this interview that I thought was interesting was the impact that school budgets and administration decisions have on what he is able to do in the classroom. Alex used an example of a school that had the budget to purchase new technology in the classroom. The decision was between tablets or laptops. Each tool has their own benefits and draw backs, the decision to go either way will limit what teachers can do in the classroom. Some of the strategies don’t play well on laptop (think AR/VR) or on a tablet (word processing). So at a very high level the technology that is available within your school can shape how you teach in the classroom.

While others have discussed addressing higher level thinking with their students I also think technology can play a solid role in formative assessment and helping students build strong foundations.

Perhaps digital technologies can support higher order thinking by first providing a simple means for students to be able to practice basic skills and address their error and misconceptions in real time. Then being able to weave in more complex and higher order thinking will be easier?

I designed a digital literacy course for a group of ECE instructors in Nigeria. I had a lot of really cool ideas about how to address plagiarism and develop digital media skills by building a digital story, etc. However when I got in front of this class we spent a solid hour opening up Microsoft word and saving a file. Just because the technology is there and CAN be used to meet higher goals doesn’t always mean that this is the first place it should be used.

Jenkins (1990) makes an interesting statement about the fallacy of the tabula rasa assumption in teaching; that students come to you as a blank slate ready to have your teachings fill their head with the correct information. Students and their minds are not kept in vacuums ready to receive information when they enter the classroom; rather they bring with them a slew of partially formed ideas and impressions. Depending on the age of the student you often have to contend with the teachings of the other teachers/faculty/parents/peers that have influence what the student brings with them. In the video, Heather demonstrated this by describing her ideas of a wonky orbit path. What I found interesting was that part of Heather’s misconception was accidentally transmitted by a misleading diagram in a textbook.

This led me to think about how often are we as educators unintentionally the source of student misconceptions? I am reminded of faculty that would point out diagrams, pictures, and narrative in textbooks that in their effort to simplify difficult concepts simplified it to the point of error. Or in other cases diagrams that in an attempt to engage the viewer with colour and annotation, missed the mark and instead became confusing jumbles of nonsense. This relates to higher education, but think of all the different sources of information that kids (K-12) consume and how these can relate to the development of their misconceptions. For example ‘Magic School Bus’ was a popular kids show back in the day. For the most part the science discussed in this show is pretty solid, but there are some inaccuracies introduced, which, since the medium is so engaging, do have a high likelihood of sticking. For example, I still thought planets were balls of highly compressed gas….wrong. Watch this short video that points out these inaccuracies:

Top 10 Lessons The Magic School Bus Got WRONG – (Tooned Up S2 E1)

One way that we can help students address misconceptions using technology is through stop-motion or slow-motion videos. Schwessinger (2015) discusses her use of slowmation to help students overcome misconceptions in physical sciences. In slowmation, the creator produces a video or animation of an event by sequencing thousands of pictures. The claim is that this technique helps students overcome their misconceptions because they are forced to revisit the concepts so many times and provides them with ‘hands on’ time.

For those interested in reading about using slowmation in practice I would highly recommend reading Schwessinger’s paper. It also has an excellent overview of student misconceptions in science.

References

Confrey, J. (1990). A review of the research on student conceptions in mathematics, science, and programming. Review of research in education, 16, 3-56

Schwessinger, S. (2015). Slowmation: Helping Students Address their Misconceptions in Physical Science. Doctoral Projects, Masters Plan B, and Related Works. Paper 1. http://repository.uwyo.edu/plan_b/1.