Cognitive Learning Theory Renaissance

I found this week’s readings to be quite engaging as it was mostly new information for me. I mean, I’ve always felt that there was a clear benefit to having students physically engage with content in order to solidify their learning, but I’ve never had it explained to me in the detailed and justified way it was explained by Winn (2003). I learned that cognitive learning theories were strayed away from for some time, to be essentially replaced by constructivist and social learning theory approaches. Their downside seemed to be how they segregated the brain’s “internal, cerebral activity” (Winn, 2003) from the immediate environment.

Well, it turns out that learning is inextricably linked our internal, cerebral activities are to external, “embodied” activities; two seeming-opposites engaged in an endless, reciprocal dance named “dynamic adaptation” resulting in learning. These concepts are what engaged me; to consider learning as not only the result of internally-generated knowledge structures but to be described as a series of “distinctions”, environmentally-triggered, which pressure us to adapt. Perhaps even more fascinating is the rabbit hole that opens once you start to consider learning as being fundamentally linked to environment. This means that everyone’s learning, or perceived world, is literally unique, shaped by their environment and naturally-varied experiences as well as genetics, while being constrained by sensory limitations (all essentially related to the concept of “Umwelt”).

Environment guides Learning

What I found perhaps most impressive about Winn’s writing was the clarity in the explanations of how how learning can be guided by the environment itself. There are four stages:

- Declare a Break

- Activity is somehow interrupted by noticing something new or unaccounted for

- Draw a Distinction

- Sorting the new from the familiar

- Ground the Distinction

- Integrate the new distinction into the existing knowledge network (or, if it defies deeply-rooted beliefs, it will simply be memorized then forgotten – sound familiar??)

- Embody the Distinction

- The new distinction is applied to solve a problem

Artificial environments (e.g. video games, VR) can allow us to go beyond scaffolding (such as seen in SKI/WISE) and embed pedagogical strategies into the environment itself by understanding these four stages. I mean, why not? Rarely can we design every aspect of our real-world environments, but we certainly can in video games and VR experiences. Of course, not everyone is a game designer, but most of us could manage to create a Virtual Field Trip, for example. The experiences could be designed so that they force students to create a “series of new distinctions” which could lead them to understanding whole environments (Winn, 2003); something extremely powerful especially for students that could never visit the environment in person.

A Variety of Applications

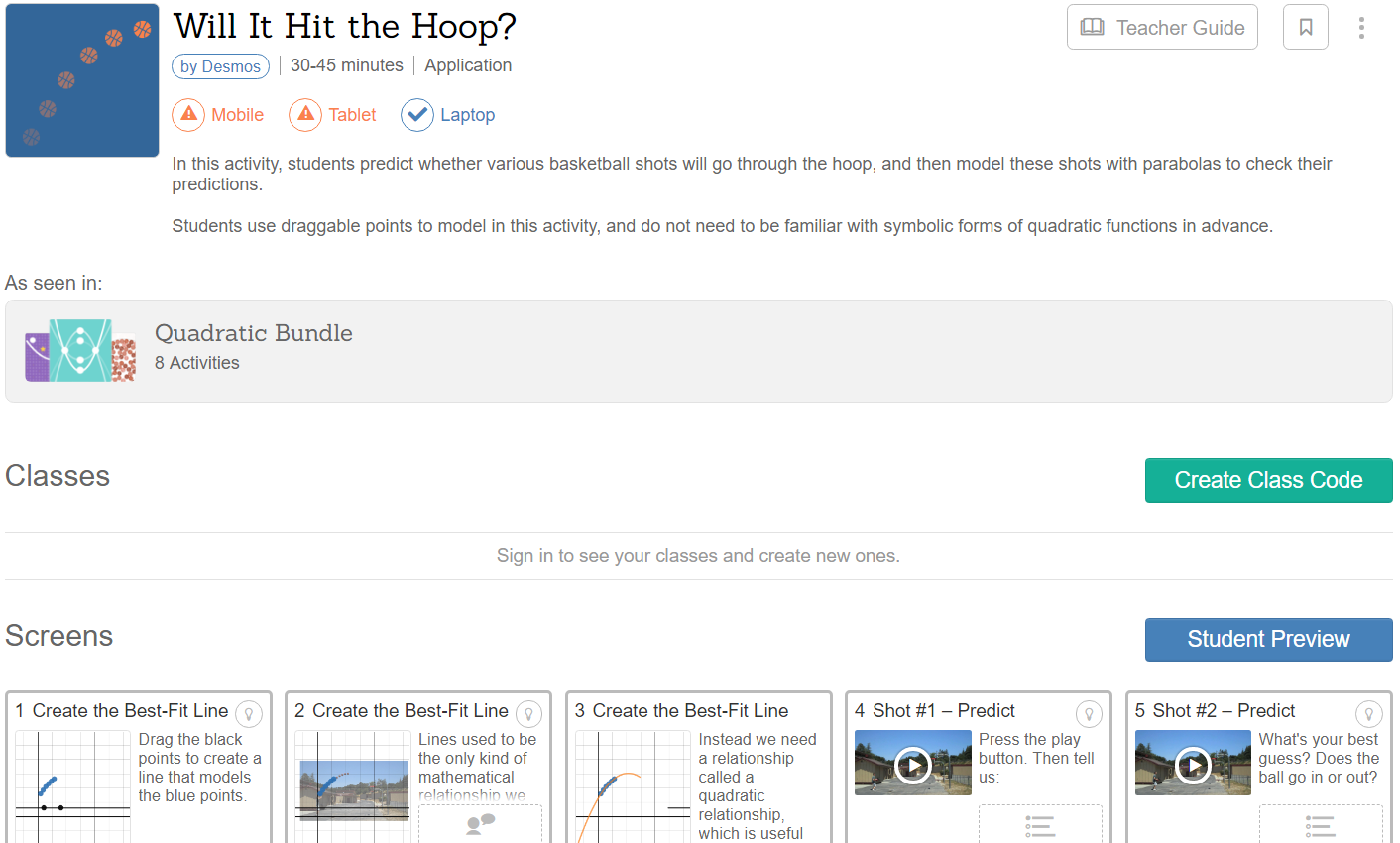

I think that even topics like quadratic equations and parabolas could benefit from this embodied earning approach. This could look like anything from a teacher designing a “tactile” activity in Activity Builder on Desmos, or leveraging tools like those found on GeoGebra or NCTM (e.g. sliders, tap-and-drag functionalities) for a more interactive, embodied experience. Tech allows us to explore abstract concepts in an embodied way, which is perhaps one of its greatest affordances.

Affordances of VLEs

All this thinking led me to explore more recent papers on the subject of virtual environments. It turns out significant research has been done on VLEs (Virtual Learning Environments). For example, I came across a paper by Dalgarno and Lee’s (2010) that identified five affordances (or benefits) of VLEs that translate directly into learning benefits:

- spatial knowledge representation,

- experiential learning,

- engagement,

- contextual learning, and

- collaborative learning.

These probably come as little to no surprise to most of us, but it is certainly nice to have them listed so simply and to know that significant research has determined their effectiveness.

VLE’s Unique Characteristics

Dalgarno and Lee’s work also argued that 3D VLEs have two unique characteristics, “representational fidelity” and “learner interaction”, both of which I feel are particularly essential to both video games and VR design.

Unique Characteristics of 3D VLEs

|

Representational Fidelity

|

Learner Interaction

|

- Realistic display of environment

- Smooth display of view changes and object motion (e.g. high frame rate)

- Consistency of object behaviour (e.g. realistic physics)

- User representation (e.g. avatars)

- Spatial audio (e.g. 7.1 surround)

- Kinesthetic and tactile force feedback (e.g. rumble functionality)

|

- Embodied actions (e.g. physical manipulatives in a virtual environment)

- Embodied verbal and non-communications (e.g. chat functionality, online/local multiplay)

- Control of environmental attributes and behaviour (e.g. customization interface)

- Construction/scripting of objects and behaviours (e.g. programmed functionalities are possible and at the whim of the programmer/designer)

|

(all examples in brackets above are my own contributions)

Basically, when these two characteristics mingle, deeper learning experiences are bound to take place as they leverage the five affordances that translate directly into learning benefits. However… there’s a limit! There is an optimum level of interactions between them that maximizes learning; going “beyond the optimum” can actually lead to limited or negative returns with respect to their learning benefits (Fowler, 2015). Pretty crazy hey? I guess this is a case of “too much of a good thing”?

Questions Linger

A few questions still linger as I come down from learning all this new stuff. Perhaps you can help? 🙂

- If contact with environment can trigger particular genetic “programs”, does this mean that genes also determine student learning capabilities? If that’s the case, is there some way we can engineer environments to “trigger the right programs”, while avoiding the “wrong” programs?

- I referenced manipulation of sliders earlier when referencing a VLE. Does this type of interaction actually “count” as physical interaction, or does embodied learning need to incorporate gross motor skills, for example?

- How does one determine the optimum level of interaction between the representational fidelity and learner interaction of a VLE?

- I mean, I feel like The Legend of Zelda: Breath of the Wild does a pretty dang great job of mixing these two and teaching the player without using any words, but how did they find that exact sweet spot without leading to negative returns?

- Bonus Question: I’ve never participated in a distance/online course that takes full advantage of any of the affordances of TELEs and VLEs. Has anyone else?

Thanks for reading, and apologies for being late here. Kinda struggling to keep my head above water at the moment.

Scott

References

Dalgarno, B. & Lee, M. (2010). What are the learning affordances of 3-D virtual environments? British Journal of Educational Technology, 41, 10-32.

Fowler, C. (2015). Virtual reality and learning: Where is the pedagogy?. British journal of educational technology, 46(2), 412-422.

Winn, W. (2003). Learning in artificial environments: Embodiment, embeddedness and dynamic adaptation. Technology, Instruction, Cognition and Learning, 1(1), 87-114.

Appendix

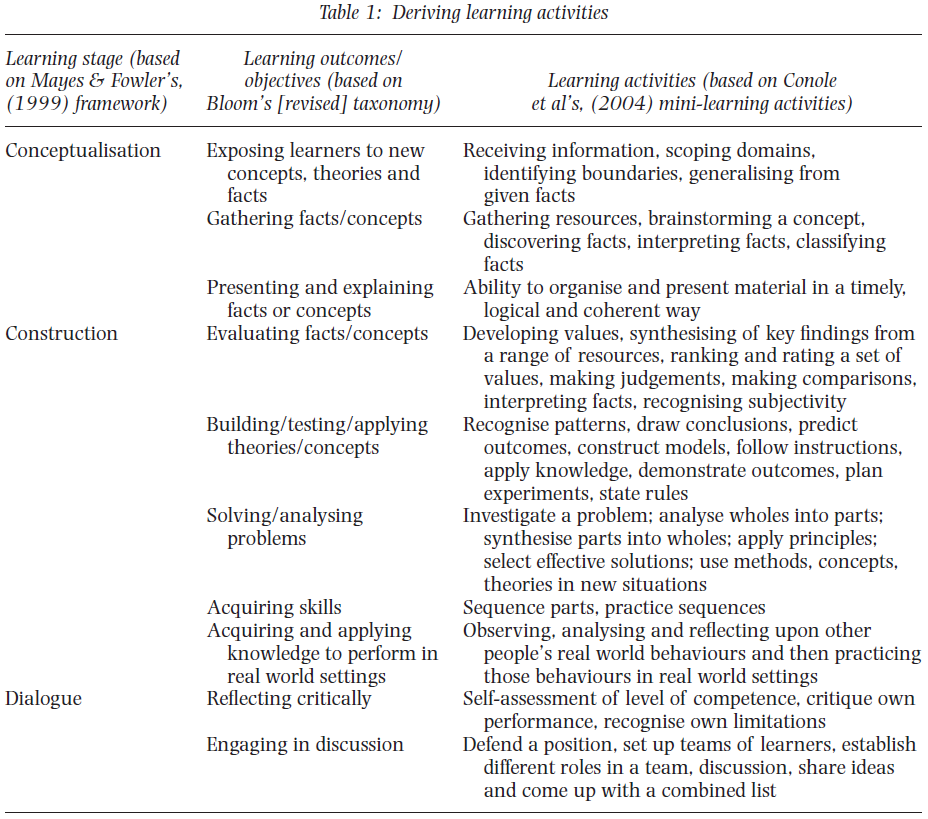

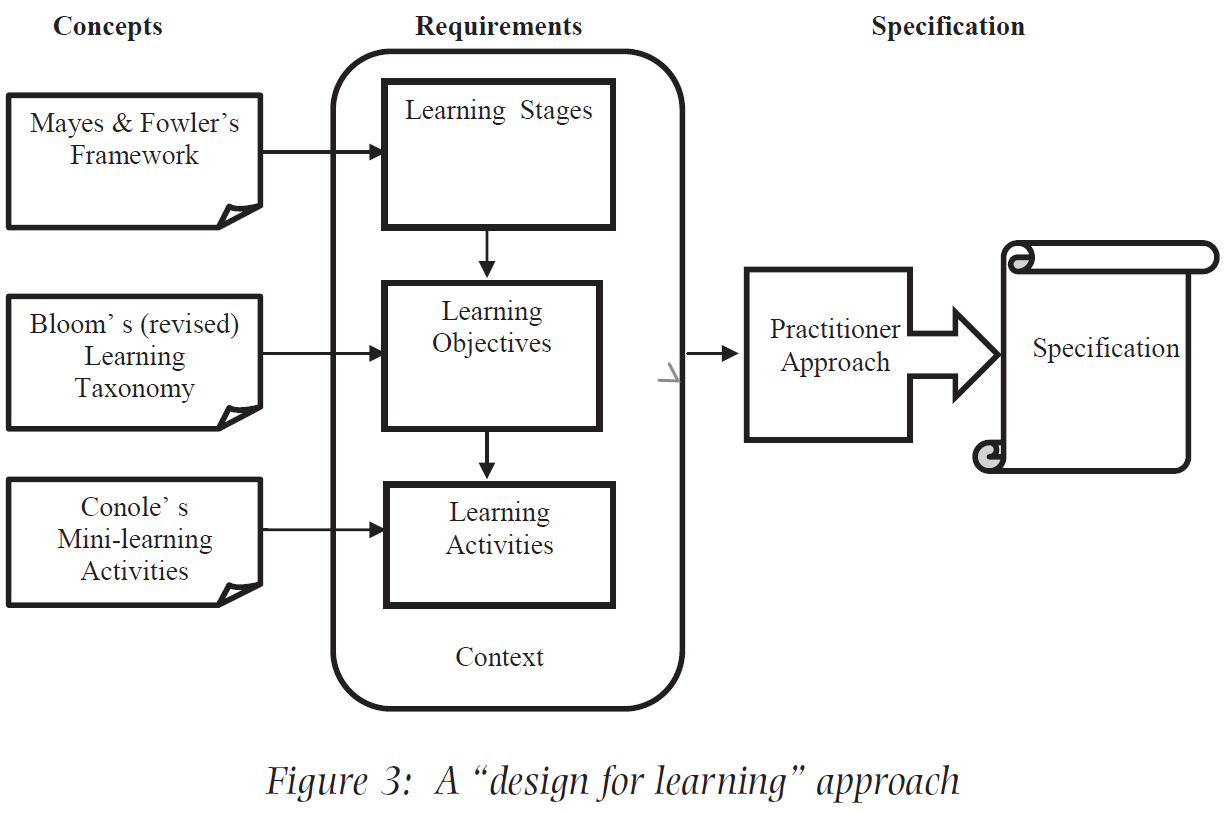

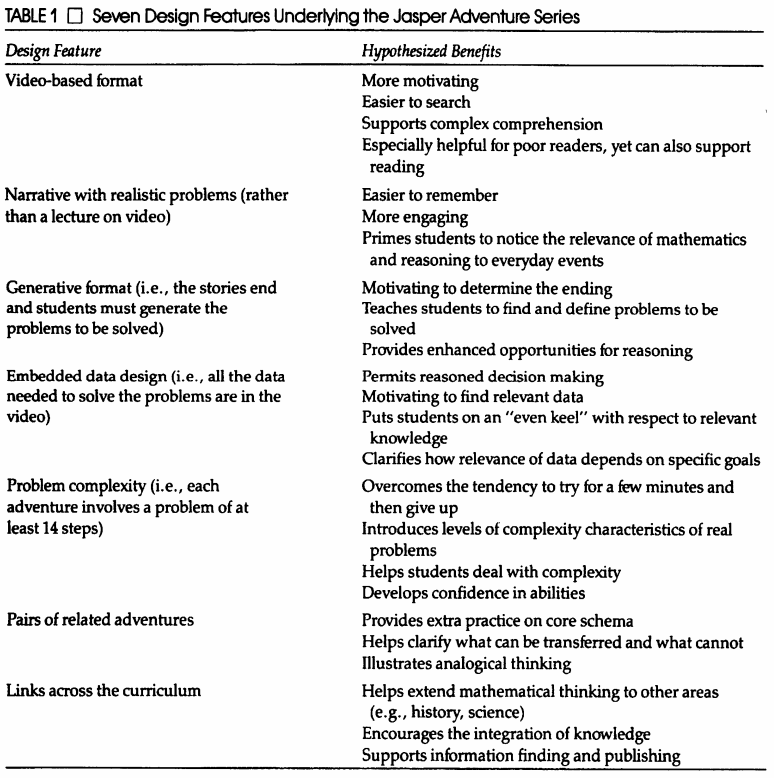

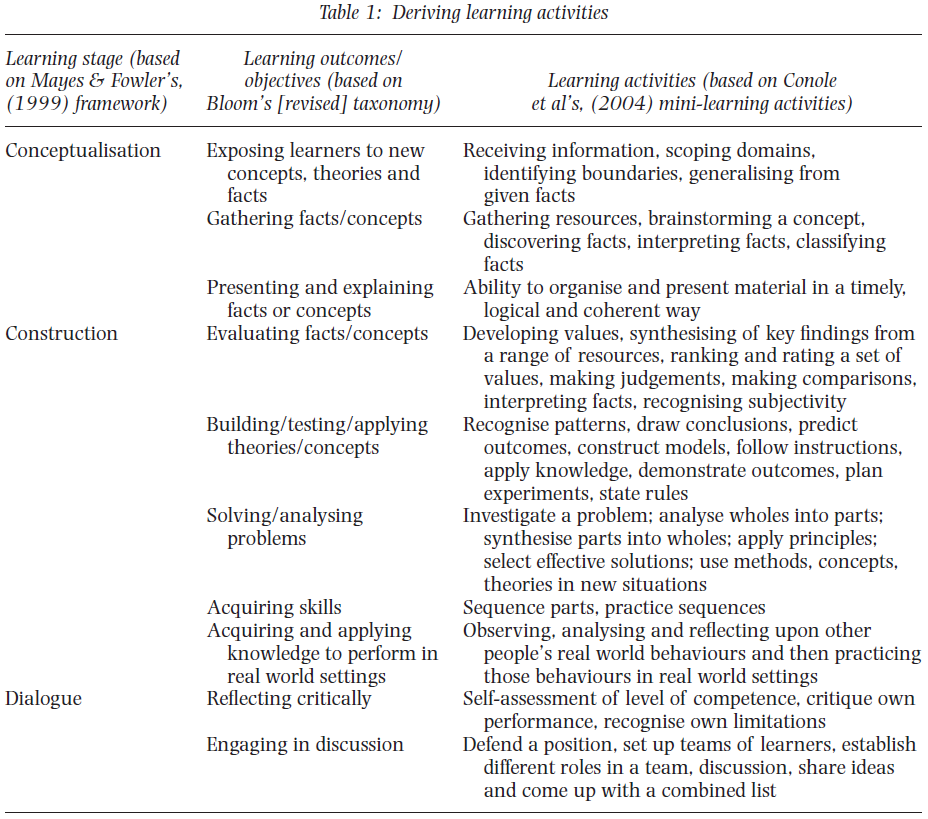

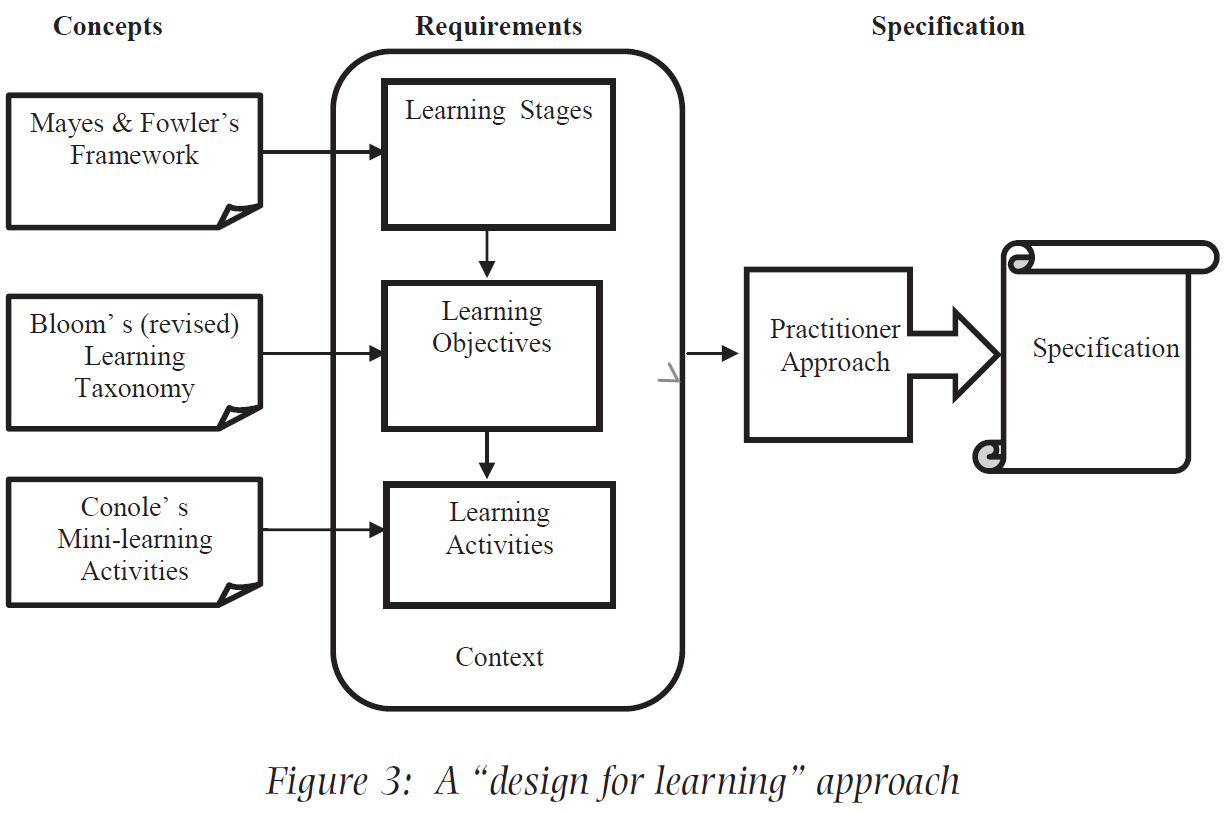

There were a few extra things I came across that were very interesting but would have made the body of the post even longer than it already was. I still wanted to share them because of how useful they seem. Mainly there’s a Table, a Figure and supporting Context, which all relate to a “design for learning” framework for deriving appropriate learning activities. In essence, these resources can help teachers clearly define the learning context before learning takes place in order to maximize effectiveness.

Specifically, the learning context should include/combine variables such as:

- Locus of control (teacher or learner)

- Group dynamics (individual or group)

- Teacher dynamics (one-to-one, one-to-many, many-to-many)

- Activity of task authenticity (realistic or not realistic)

- Level of interactivity (high, medium, or low)

- Source of information (social, reflection, informational, experiential)

The idea is that combining these variables based on the requirements of a given learning context can help a teacher determine the most appropriate teaching and learning approach.

Finally, the Table 1 and Figure 3 below (Fowler, 2015) are meant to be used to help derive learning activities that will take place within this clearly defined learning context. I hope you find them useful!

(Fowler, 2015)

(Fowler, 2015)