Task 7: Mode-Bending

Sonifying Task 1: What’s In Your Bag?

For this task I tried something very different (different to me, anyway): sonification.

Keeping Task 7 in mind as I read the New London Group’s A Pedagogy of Multiliteracies: Designing Social Futures, the following passage caught my attention: “The Redesigned is founded on historically and culturally received patterns of meaning. At the same time, it is the unique product of human agency: a transformed meaning” (New London Group, 1996, p. 76; emphasis added). I wanted to transform the meaning of Task 1, but I was also interested in retaining the original text and story within Task 1 as well. How could I do both? I decided to use the New London Group’s idea of metalanguage in order to describe Task 1 “….in various realms. These include the textual and the visual, as well as the multimodal relations between the different meaning-making processes that are now so critical in media texts and the tests of electronic media” (New London Group, 1996, p. 77).

Hello Google!

I started Googling various combinations of: “text”, “analysis”, “tools”, “visualization”, “open source”, “music”, and “audio” (among others). The first tool I came across that looked promising, was Voyant Tools (https://voyant-tools.org/). It’s pretty interesting and I highly recommend it if you’d like to visualize your text.

Voyant Tools

I copied and pasted the text component of Task 1 into Voyant Tools to see what would happen. It turns out, there is quite a lot of information to be gained by visualizing one’s text. It was pretty neat: the interactive chart (below) is from Voyant Tools. I noticed that the original text was cut into 10 equal-sized pieces with the most frequent terms from Task 1 plotted, showing the trend in discourse from the start to the end of the original blog post.

This was an interesting way to visual my text, but it had nothing to do with sound. Though I learned a lot about the text I’d written weeks ago, I still hadn’t integrated sound…yet.

Sonification

Enter: sonification. Again, Google was my go-to tool as I tried to figure out how to turn my data into sound or music or something audio-based. That’s where I came across “sonification”. I’d heard of the term before, but never really explored the concept. My Google search for sonification yielded two interesting results: TwoTone Data Sonification (https://twotone.io/, a free web-based app that turns your data into sound/music) and programminghistorian.org (more on this later).

TwoTone

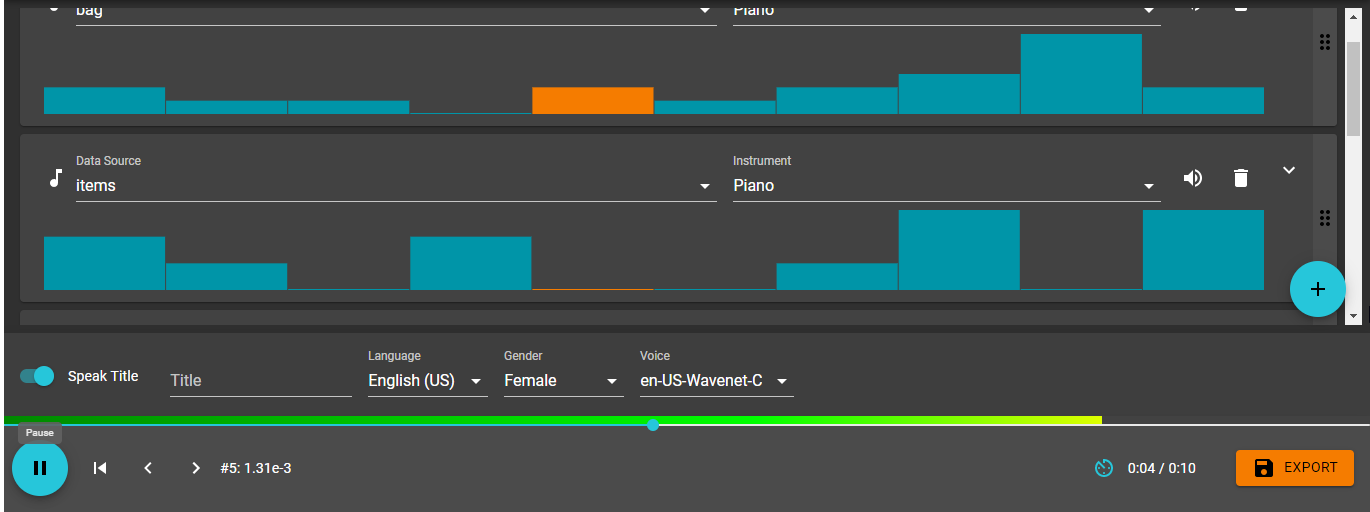

I exported the data from Voyant Tools and placed it into an Excel sheet. The exported data was divided into the same ten pieces shown in the graph/chart (above) indicating the trends of the words I’d used most frequently in the description of my image in Task 1. I then pasted the Excel data into TwoTone to see what would happen: the results were really cool! The text I’d written describing the photo of my bag had been sonified: I was now listening to my text! Check it out below:

Reflection

In preparing for this task, I was hoping to not only produce something that satisfied the audio requirement, but I also wanted to move beyond Audio and Linguistic Designs toward a more multimodal process (New London Group, 1996).

The first step in the process toward sonifying my text was to visualize the text (Visual Design). Using Voyant Tools, I was able to see my text as a word cloud (not included in this post), as a series of knots (which can also be used to sonify the text), and the graph I included above. Using the graph, I could see the five words I repeated most often in the written piece: bag, bags, items, kids, and text. I was also able to use the graph to view the trends of my word-use throughout Task 1 and view each word spatially (Spatial Design) in comparison to other words: the graph indicated where, in Task 1, I focused my attention on a particular word, and when that focus drifted toward another word (compare “kids” earlier in the written piece versus “bag” closer to the end).

When I transferred my data to TwoTone, I could also see the different “voices” representing the five most common words repeated in Task 1; as the music plays, different columns light up across the screen. (The image below displays the progress of the words “bag” and “items” during the 10 second sonification: note the yellow/orange coloured blocks indicating the notes being played).

When I listen to my sonification, I can hear the repetition of certain notes that are pleasing to the ear. This repetition of sound suggests that in my writing, I return to common themes, thoughts and ideas (or that I’m quite repetitive…). The rhythmic nature of the notes suggests an interconnectedness between the ten pieces of the chart I created in Voyant Tools (above). The rhythm also allows the listener to hear how different words ebb and flow as the story progresses.

….teachers need to develop ways in which the students can demonstrate how they can design and carry out, in a reflective manner, new practices embedded in their own goals and values. They should be able to show that they can implement understandings acquired through Overt Instruction and Critical Framing in practices that help them simultaneously to apply and revise what they have learned. (New London Group, 1996, p. 87)

This task encourages students to take what we produced in Task 1 and, through reflecting on course material, stretch our learning by applying new knowledge to an “old” task in order to create something completely new. Sounds pretty transformative to me!

NOTE: The Programming Historian

I found this resource when I was trying to figure out how to analyze my text and convert it to an audio format. Though it’s beyond the scope of this course (and I don’t have time to read it all), I found Graham’s description of sonification quite helpful.

References

Graham, S. (2016). The Sound of Data (a gentle introduction to sonification for historians). Programming Historian. https://programminghistorian.org/en/lessons/sonification

Sonification. (2020). In Wikipedia. https://en.wikipedia.org/w/index.php?title=Sonification&oldid=954085563

The New London Group. (1996). A pedagogy of multiliteracies: Designing social futures. (Links to an external site.) Harvard Educational Review 66(1), 60-92.