‘Collection of Fortune Cookies Verses from my Omnipotent iPhone’

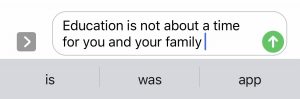

I’m sure Cathy O’Neill wouldn’t be surprised if I told her I thought my iPhone is part psychic and part Magic 8 Ball. My key takeaway from this module is that predictive text and associated algorithms are far from arbitrary and generic. They are tailored for a personalized experience given information the user has fed them previously. This is quite Vygotskian programming; using social information and experiences to create meaning and further extension of knowledge. My naive world was cracked wide-open in this module exposing my dreams of rainbows and fluffy bunnies to a nightmare of big brother and Google stalkers.

The textual products where I have seen statements like I generated are in my ‘mom’ texting with friends and parents of my children’s friends. I think it is fairly common vernacular for my demographic/social circle and the language is casual and informal, not academic at all. I think that the textual products it would most relate to would be conversational dialogue and comments on social media, such as Facebook and Instagram, and digital interpersonal communication such as texting.

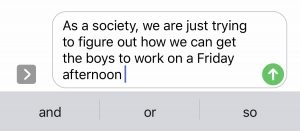

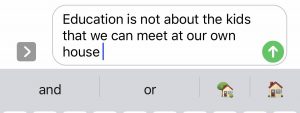

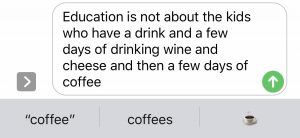

Being a mom of two boys, most of my texting involves arranging drop-offs and pick-ups from hockey practices (non-contact dryland training even during COVID), planning outings with friends (small groups with social distancing), and all things related to feeding and entertaining my growing boys. Aspects of being an educator were also frequent in the statements generated.

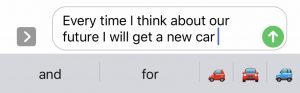

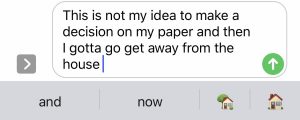

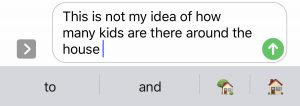

It was actually scary how much the predictive statements sound like me and the way I text with my friends. Also finishing my thoughts and using lingo that are frequently found in my communication with others. There was a human aspect to choices and directions, such as given in the example O’Neill used of making dinner for her kids, I had choice of three choices of responses of text/emojis that could form a branching hierarchy into more choices. Mostly, I was struck by how accurately predictive text was in microblogs:

- Finishing lines like ’pick up my…’ answer choices were boys, kids, mom and ‘meet up…’ answer choices were dinner, lunch, later (most of my conversations revolve around meals and my kids).

- Using slang like wanna and gonna.

- Use of the word ‘exactly’ (which I now realize I use too much!)

- Frequently used nouns such as cache, school, app, and house.

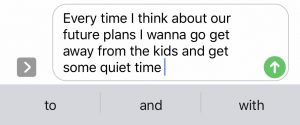

- I complain about my family way too much and the need for space and.quiet. This may/may not include both hot and cold beverages.

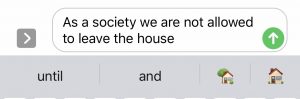

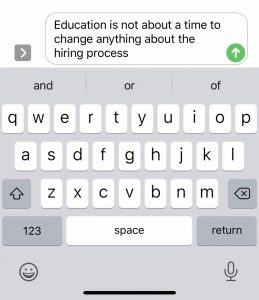

The use of predictive text (and even auto-correct) can have implications on accidental miscommunication in any area, but in a more sinister way it could affect public writing spaces to unfairly categorize or misrepresent truth. The one predictive text of mine that surprised me was about the ‘hiring process’ words that echoed a conversation with a colleague recently.

This example is two-fold. First, I was taken aback that predictive text generated this based off one conversation, however, I frequently talk to colleagues and friends about education. Being in a smaller town, a few of my closest friends and confidantes are also co-workers. Second, I was reminded of comments I’ve heard from a few temporary teachers where in order to apply for jobs, it depends on two things: the department assigned priority category (based on experience within the department and First Nation identification) and using the right key words in cover letters. When applying for jobs, these temporary teachers who are striving for permanency are learning to craft letters to include specific jargon from the posting in order to score higher to get screened in by the system to the next level of interviews. If, for example, some of the key words the system are looking for are ‘project-based learning’ and ‘extracurricular’ then would it pass to say something irrelevant like, “I once knew a guy who completed a project-based learning pizza eating contest that his three-legged dog said was extracurricular to his job as a lion tamer”? O’Neill (2017) reminded us that different jobs require people with different personality types and that algorithms often disqualify categories of people that would be ideal candidates. Could this also be used to qualify those that aren’t suitable because they were able to identify and seamlessly jump through hoops? I once had a smart, young student teacher that would have easily qualify for jobs with local criteria, yet, she had a small problem. Every time she tried to talk to a group of children (otherwise known as teaching!) she was terrified and break down in tears. The rumoured algorithm of hiring in our system would probably identify her as a good candidate, but I’m not sure a teacher with a fear of public speaking would pan out. Thank goodness I had an amazing group of empathetic students that year because other groups of students would have eaten her alive. If algorithms don’t consider any variables beyond ‘if, then, and or,’ statements (boolean-type categorization) to find a objective value (number data) for an subjective value (humans) then I agree with what we heard in the podcasts this module that it questions humanity and morals by placing value of customers, employees or students on data without considering common sense. Examples given in podcast where injustice and inhumanity bred racism, violence and plain-old stupidity from following data and algorithms include the story of NYPD and the experience of Dr. David Dao.

O’Neill, C. in The Age of the Algorithm. (n.d.). In 99 Percent Invisible. Retrieved from https://99percentinvisible.org/episode/the-age-of-the-algorithm/

.

.

Hey Valerie,

I found it so interesting that you felt that your predictive text represented you, when I had the completely opposite experience!

I really appreciated your example of the teacher who was qualified from an algorithmic perspective, but practically isn’t actually ‘cut out’ for the task. Your warning about algorithms having the potential to “unfairly categorize or misrepresent truth” is usually one of the most concerning components of predictive text for me.

Thanks for the great read!