Introduction

The objective of this assignment is to appreciate the roles and nuances of definitions in technical writing, which help readers to understand complex terms. Parenthetical, sentence, and expanded definitions demonstrate different methods for explaining and detailing technical terms.

Situation and Audience

As the roles of computers and machines grow in society, so does interest in fully autonomous systems. Where autonomous systems transcend industries, the implications of these technologies become more relevant in the public sphere. Myriad benefits balance with unanswered concerns, producing hotbeds of debate as these systems migrate from science fiction to everyday life. Hence, public awareness and opinion become increasingly relevant, not only regarding what these systems are, but what they might entail in a mechanized future.

Parenthetical Definition

In his essay “Killer Robots” (2007), philosophy professor Robert Sparrow hinges his examination of fully autonomous systems (systems which utilize digital decision-making processes to act and make decisions independent of human interference) on an ethical need for responsibility in decisions that impact human lives.

Sentence Definition

Fully autonomous systems are computerized processes that utilize available data and digital decision-making algorithms to make choices and act independently in the face of unanticipated situations, eliciting important social discussions of ethical viability and considerations of dependence.

Expanded Definitions

What are fully autonomous systems and why are they important?

Fully autonomous systems are systems that utilize digital processes to accomplish objectives and make independent decisions when faced with unanticipated scenarios (Frost, 2010; Watson & Scheidt, 2005). The goal in implementing such systems is to accomplish complex objectives in efficient and mechanized ways, preserving human time and resources. Benefits of such systems include consistency and elimination of human error, cost effectiveness over time, and preservation of human resources (Frost, 2010). However, despite potential advantages, critics warn against a dependence on non-human decision-making, as well as ethical ramifications of such systems (Sparrow, 2007).

History

At least as far back as the ancient Greeks, whose myths included personality-driven sculptures (Watson & Scheidt, 2005, p. 368), societies imagined non-living systems acting on their own accords. An expansive presence in science fiction continues this cultural trend, while technological advances in machine learning and rapid data processing lay the foundations for realizing fully autonomous systems. Cybernetics in the 1940s brought crucial comparisons between mechanisms and human activity, while digital innovations in the 1970s gave way to basic systems of planning and execution with little human interference (Watson & Scheidt, 2005, p. 369).

The late 1990s saw implementations of the first fully autonomous systems in navigation, with such systems at the helm in navigating the Deep Space 1 exploration mission (Bhaskaran, 2012; Frost, 2010).

Similarly, the 21st century dawned a new wave of autonomous systems. In 2002, the simple and innovative Roomba vacuum cleaner brought semi-autonomous systems to consumer living rooms (Frost, 2010, p. 2), while the United States military announced plans for fully autonomous units, including a ‘robot army’ hailed for deployment in 2012 (Sparrow, 2007). Commercially, consumer-facing tech giants, such as Google and Amazon, also took up the autonomous robotics mantel with plans for self-driving cars, autonomous-navigation delivery drones, and fully-mechanized warehouses, increasing relevance to daily life (Guizzo, 2011; Wurman, D’Andrea, & Mountz, 2008).

Factors and Processes

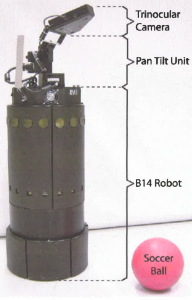

Drawing on programming, objectives, and environmental sensor data, fully autonomous agents process and weigh chains of constraints and potential outcomes before reacting and making decisions. In doing so, the agents rapidly create and modify conceptual decision maps—networks of potential choices and results—illustrating likely consequences and future outcomes. Leading artificial intelligence thinker Alan K. Mackworth (2010) uses the example of reactive soccer-playing robots to illustrate this decision-making process, as the robots must contend with a dynamic environment that includes a ball, other robots, and set objectives.

Mackworth’s soccer-playing robot prototype (Mackworth, 2010, p. 28)

After building conceptual decision networks, autonomous agents choose optimal courses of action. Here, the criteria or perspectives for making these decisions lay important ethical foundations for such systems. Some theorizers argue for utilitarian perspectives, allowing autonomous agents to choose the best overall outcomes for a society in general, given a set of circumstances. Others champion consequentialist or objective-based decision-making, whereby completing the objective is the ultimate factor in decision-making. Still others suggest self-preserving models or hybrid systems (Goodall, 2014).

Notable in this divisive debate about decision-making is a juxtaposition of human choice processing with that of autonomous systems. Should fully autonomous systems act as humans would or should societies hold mechanized systems to different decision-making standards (Goodall, 2014; Sparrow, 2007)?

Goodall’s depiction of an autonomous vehicle’s decision-analysis (Goodall, 2014, p. 61)

Implications

With advances to fully autonomous systems come discussions centered less on what is possible and more on what is ethical or what shouldbe done regarding autonomous technologies. Proponents point to robot soldiers sparing human lives in battle (Sparrow, 2007) or self-driving cars eliminating the hazards and inefficiencies of human-error from the driving equation (Goodall, 2014; Guizzo, 2011). However, skeptics highlight a dangerous reliance on non-human systems to make decisions (Sparrow, 2007), wondering whether societies can trust fully autonomous computers to make ethical decisions.

Sparrow (2007) questions how fully autonomous weapons systems might weigh crucial cost-benefit analyses, such as taking prisoners of war or deciding who to hit in an unavoidable vehicle collision. Similarly, he wonders about the ethical ramifications of these analyses and whether creators can instill human-centric ethical values in systems that reason for themselves. When it comes to fully autonomous weapons, specifically, Sparrow wonders about the human valuation message that underscores a dynamic in which only rich nations send robots to war. He also extends this discussion to ask if robot soldiers eliminate an important deterrent to war by minimizing the cost of human causalities.

Balancing these pros and cons begs new considerations, such as deciding whom to hold accountable when a fully autonomous machine makes an unpopular decision (Sparrow, 2007). As a result, societies must collectively weigh intrinsic benefits against potential drawbacks, both realized and unanticipated. No longer delegated to theoretical and academic corner discussions of feasibility, conversations about fully autonomous systems now extend to the public arena, where debates regarding such systems will define trajectories and attitudes for the future.

References

Bhaskaran, S. (2012). Autonomous navigation for deep space missions. Presented at SpaceOps 2012 Conference. Stockholm, Sweden: SpaceOps.

Frost, C. R. (2010). Challenges and opportunities for autonomous systems in space. Presented at the National Academy of Engineering’s U.S. Frontiers of Engineering Symposium. Armonk, New York: NASA Ames Research Center.

Goodall, N. (2014). Ethical decision making during automated vehicle crashes. Transportation Research Record: Journal of the Transportation Research Board, 58-65. http://dx.doi.org/10.3141/2424-07.

Guizzo, E. (2011). How Google’s self-driving car works. IEEE Spectrum Online. Retrieved from http://spectrum.ieee.org/automaton/robotics/artificial-intelligence/how-google-self-driving-car-works.

Mackworth, A. K. (2010). “Architectures and ethics for robots: constraint satisfaction as a unitary design framework.” In M. Anderson & S. L. Anderson (Eds.), Machine Ethics (pp. 335-361). New York: Cambridge University Press.

Sparrow, R. (2007). Killer robots. Journal of Applied Philosophy, 24(1), 62-77.

Watson, D. P., & Scheidt, D. H. (2005). Autonomous systems. John Hopkins APL Technical Digest, 26(4). 368-376.

Wurman, P. R., D’Andrea, R., & Mountz, M. (2008). Coordinating hundreds of cooperative, autonomous vehicles in warehouses. AI Magazine, 29(1). 9-20.

PDF Version of Definitions Assignment: Definition Assignment – Wesley Berry