I have always been very fascinated by AI and the possibilities is presents for humankind, not just in the future but even today. I have done some research on the ways AI can enhance teaching and learning, and also to understand what the future of education might look like (my previous assignments for 523: A1 and A3).

I am not entirely idealistic, however, and have been exploring the ethics and biases within AI, specifically with predictive modelling used in educational and professional settings. Similar to the predictive policing that PJ Vogt addresses in his podcast episodes, predictive modelling uses algorithms that may be inherently biased and ethically questionable. Predictive models that are used to predict student success or make enrolment decisions are not always “fair”. The algorithms that make up these models need lots and lots of data, most likely from the same institution that is developing the model. This historical data, if inherently biased, will result in a model that will propagate the same biases and discriminatory decisions. As Cathy O’Neil mentions in her talk, these machines/ AI are like mirrors – they reflect to us our own inherent biases.

Ekowo and Palmer (2016) found in their research that early alert predictor systems flagged low-income, non-binary, students of colour for poor achievement more frequently than higher income, Caucasian students (p. 14). To these flagged students, the institution’s recommender systems would then suggest courses and majors that may not be as economically beneficial or as challenging as those recommended to their Caucasian counterparts (pp. 14-15). Obviously, like with the policing issues discussed in Vogt’s podcast, these machines will disadvantage those that have historically been disadvantaged to begin with. Ekowo and Palmer suggest how the students that are discriminated against by the algorithm get demoralized, leading to lower self-esteem, disadvantageous choices, and self-fulfilling prophecies of underachievement (p. 15). This 2016 TED Talk by Zeynep Tufekci highlights some more issues with biases in AI and emphasizes the importance of human morals to counter these. (See also: Keep human bias out of AI and Fighting algorithmic bias). Additionally, I believe that it is more important than ever to have transparency in the development of these algorithms. If we are unable to look in the mirror ourselves, maybe someone else can hold it up for us. Instead of then defending or accepting the biases, if we can work together to eliminate or diminish them, we will create a better, fairer world (and there is my idealism).

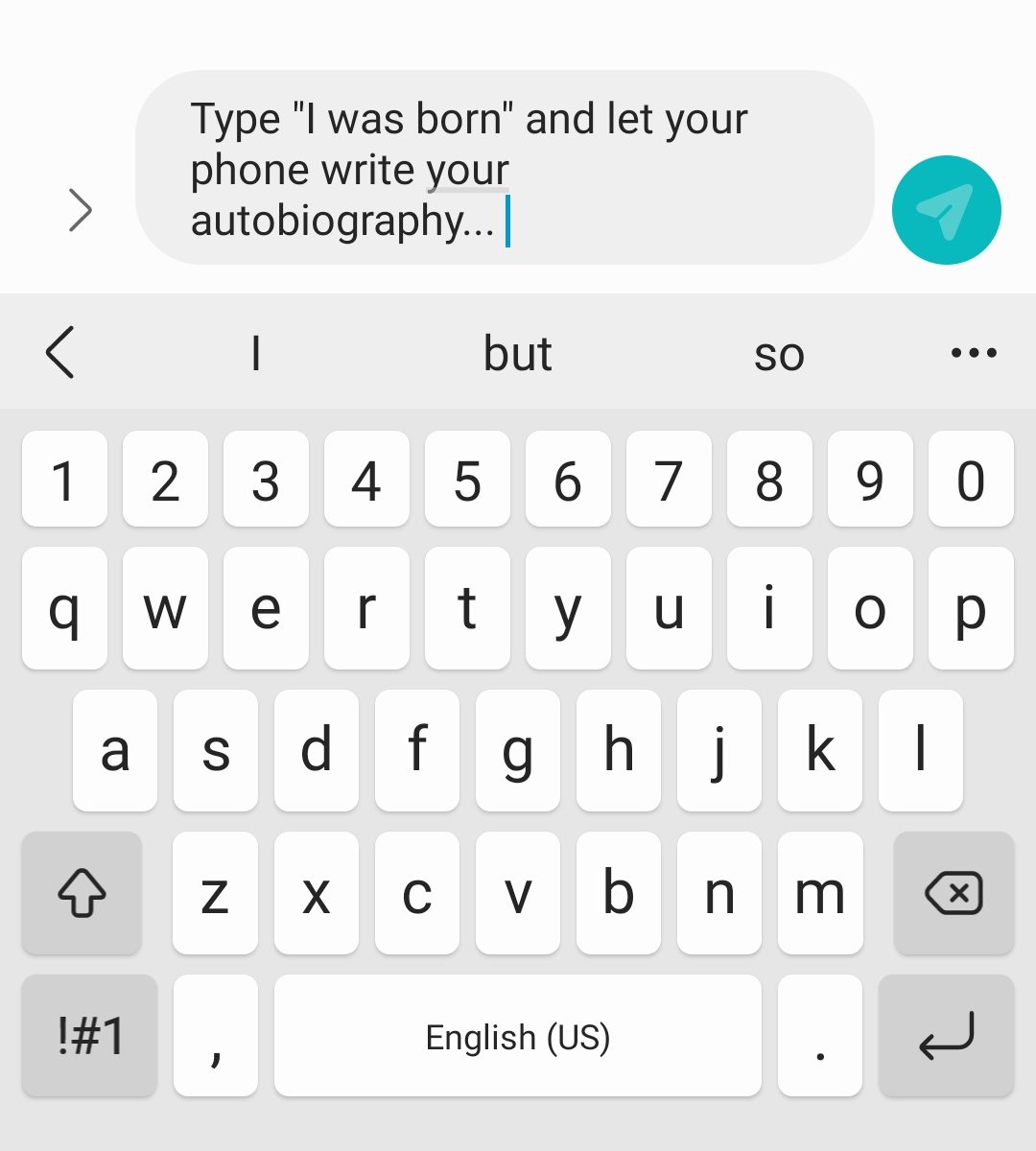

For this task, I was fascinated with the “sentences” my phone’s predictive algorithms came up with, so I did all five. Here is the screenshot of the sentences, and below you can find the videos of me (slowly) generating these.

Not all of these sentences make complete sense, but they are not complete gibberish either. The meaning behind all of these can be easily inferred. I think the reason for this is that when I was given the choice of three predictions, I chose the one I thought would flow well in a coherent sentence. I did not realize I was doing this at first, and I’m not sure when I realized this either. This already reflects my bias before the sentence is even completed – not that I knew what the phone was going to predict next. Regardless, I don’t think all of them are entirely in my “voice”.

The first one about education is relevant to my job – I teach IB and this year’s grade 11 class have suffered the most, in my opinion, because they are still learning remotely and I have never met most of them in person. Maybe my phone is telling me it won’t be fair to test them? I’m not sure why it’s only the boys who can see if anyone else wants to work on this mysterious project, though.

I found the second sentence about technology the most hilarious. I would say the Marvel movies have awesome technology. It is my dream to visit Wakanda and work with Shuri. I definitely do NOT think that “all of us can be looked up on the livestream” is my idea of good technology! That is a definite NO… is this a warning, phone??? I thought that big smile was super ironic at the end of this sentence.

I am not going to comment on the third one, but I think the fourth sentence is closest to my “voice”, at least for the first half of the sentence. This sentence reminded me of “with great power comes great responsibility”. Cliched, I know. However, I do think that humanity has a long way to go to make the world “fairer”. Eliminating biases from AI might be a good place to start addressing this goal. I agree with both Shannon Vallor and Cathy O’Neil that fiction has got us fearing robots taking over the world, whereas instead we should see how AI can support us to achieve what we cannot otherwise achieve.

The fifth sentence is one I understand the least. Maybe we should worry about cats ruling the world, not AI?

What I reflected most on through this exercise is the fact that a lot of these predictions were able to capture my personal beliefs, feelings, or values to an extent. Were these prompts more politically inclined, or professionally inclined, etc. they may have revealed more about me than I would have chosen. These sentences here are quite harmless, I think, but I wonder whether they could be more “damaging” were they completely automated, without any editing or inference on my part.

—

References:

Ekowo, M., & Palmer, I. (2016). The promise and peril of predictive analytics in higher education New America. Retrieved from: https://explore.openaire.eu/search/publication?articleId=od______2485::e5cde111791c43368359153fc42ebeea

O’Neil, C. (2017). Justice in the age of big data. Retrieved from: https://ideas.ted.com/justice-in-the-age-of-big-data/

Santa Clara University. (2018). Lessons from the AI Mirror Shannon Vallor. Retrieved from: https://youtu.be/40UbpSoYN4k

Talks at Google. (2016). Weapons of math destruction | Cathy O’Neil | Talks at Google. Retrieved from https://youtu.be/TQHs8SA1qpk

Vogt, P. (n.d.-a). The Crime Machine, Part I. In Reply All.

Vogt, P. (n.d.-b). The Crime Machine, Part II. In Reply All.

—