Findings from Readings

From O’Neil, C. (2017):

- -People tend to see algorithms as purely mathematical and unbiased, but our inherent biases are programmed into them (poorer people/neighborhoods are more likely to be hotspots of crime, and blacks and hispanics are more likely to be poor and residing there compared to rich white people who are still also committing crimes)

- Collected data that judges use to predict the chances of someone committing a crime again rates black people higher than white people

From Vogt, P. (2018):

- Random ticketing and needing to meet quota needed for COMPSTAT data

- Police would downgrade crimes so that it would not show up on COMPSTAT, therefore making the crime rate go down artificially and they would survive the COMPSTAT meeting

- Police would have to meet a quota to show that they were being more active by doing more summons and frisking people, not actually solving crimes

- Impact zones where police officers would randomly summon innocent people and target young black and hispanic men

- White men would not be targeted

Task reflection

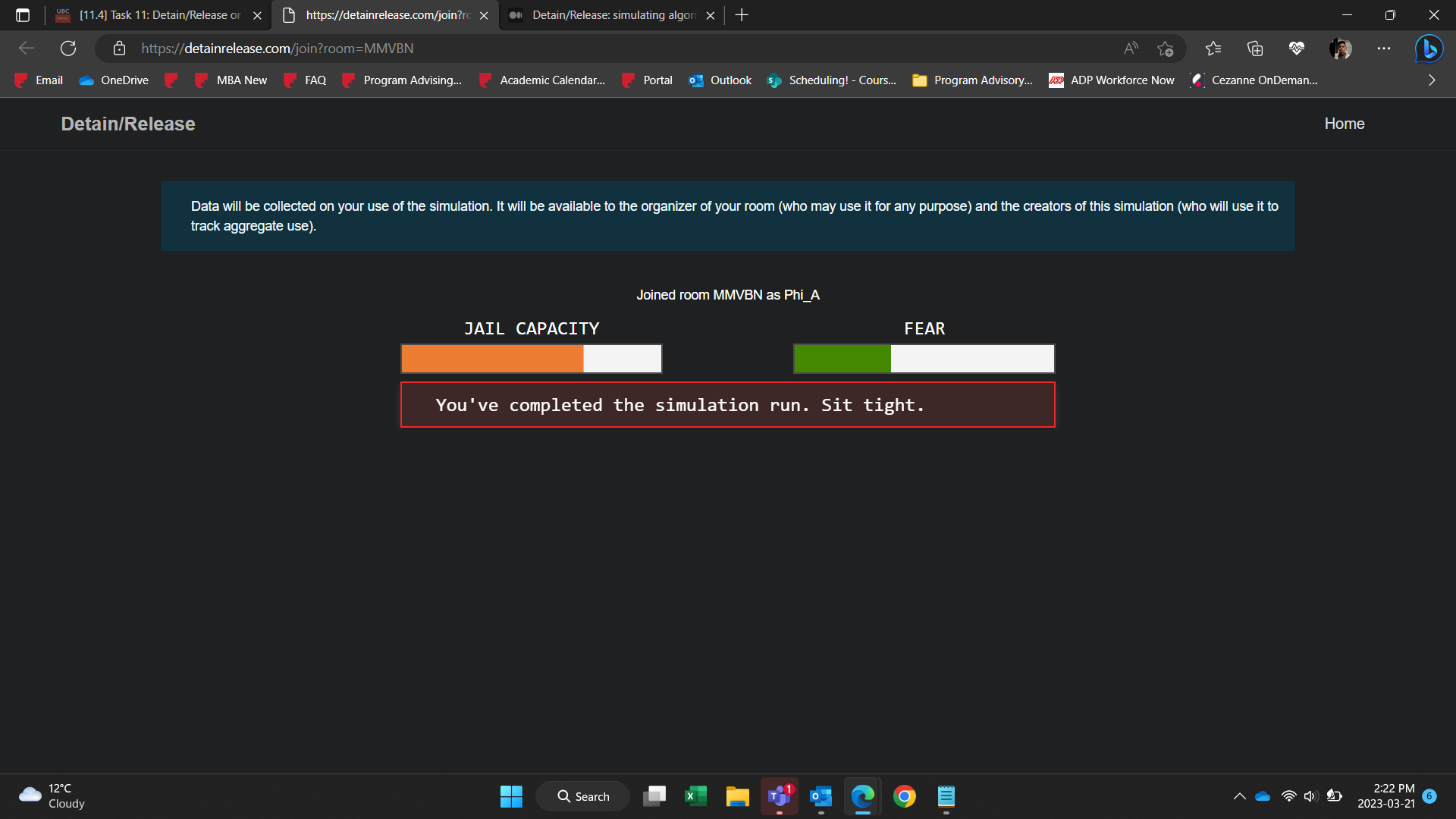

After the readings and podcasts, I was quite wary of being biased in my decisions while going through the Detain/Release simulation. I honestly should have blocked the AI generated mosaic of the people that I was making decisions on so that race/skin color did not play a factor. Nonetheless I needed to experience the simulation in full so I observed everything. I based my decisions off of their stats such as likelihood to commit another crime, violence, and failure to appear, as well as the crime. Their age, picture, and name did not influence me much.

However, upon reading through Porcaro (2019) after completing the simulation, I realized that I had no idea how the system measured these chances of these people committing another crime, or their violence level, or their tendency to miss trial. I simply just believed what was shown to me, and based my decisions off of that data. Perhaps this is similar to how cops at the lower level felt when instructed by their superiors to meet quotas and specifically target men of color- blindly following instructions based off of supposedly “unbiased” data can certainly have some dangerous consequences. It would be interesting to see the results from the class data, as I was unable to see that after completing the simulation.

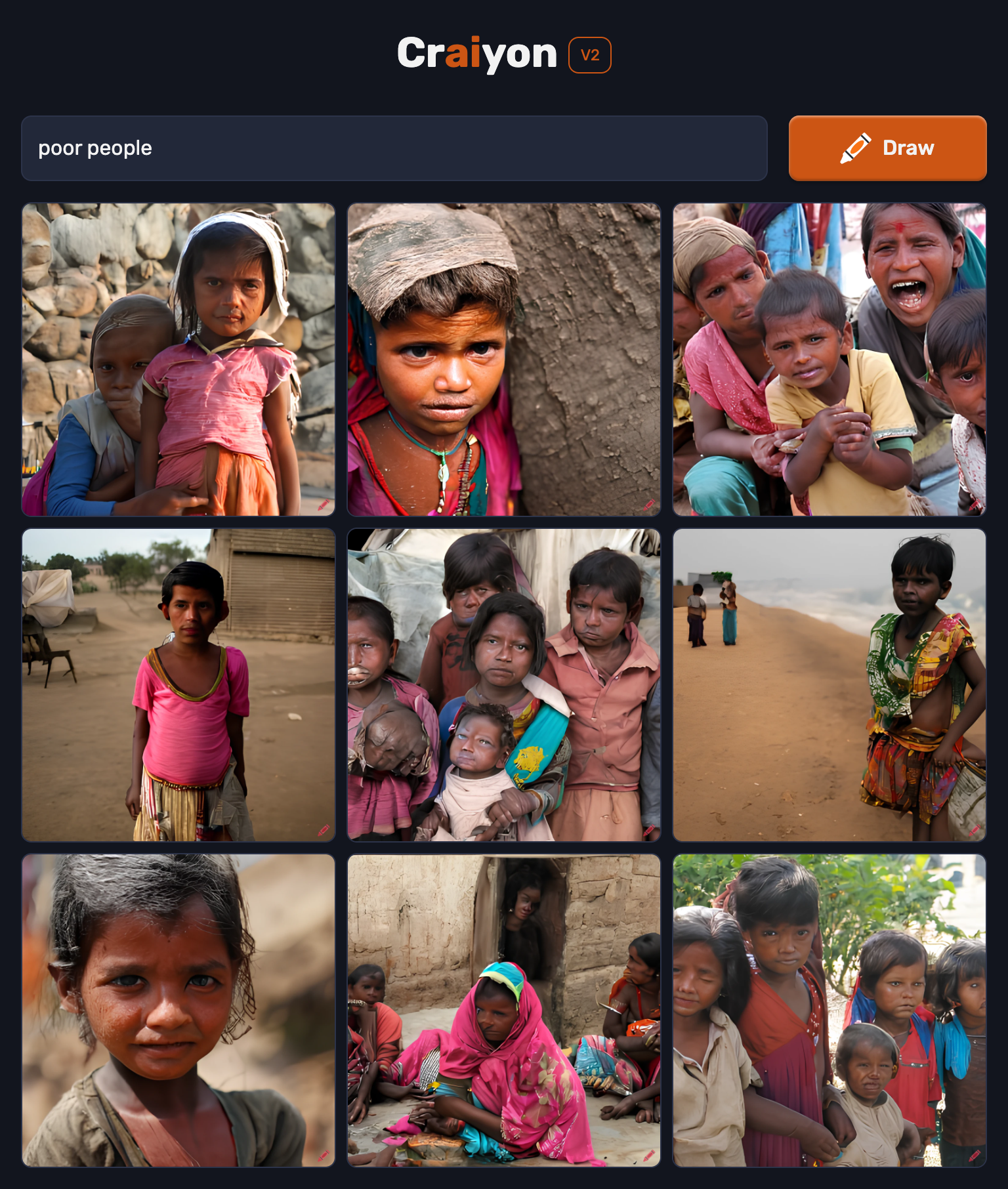

Although it was another option, I was curious as to how the Craiyon AI would draw these prompts (rich people and poor people) after seeing the example in the module. Because they were so general, I did not know exactly what to expect for the prompts. However, I could almost guarantee that poor people would include some people of color in desolate neighborhoods, and rich people would be mostly white people. The results are below. How do you feel about what you see?

References

O’Neil, C. (2017, April 6). Justice in the age of big data. TED. Retrieved August 12, 2022.

Porcaro, K. (2019, January 8). Detain/Release: simulating algorithmic risk assessments at pretrial. Medium.

Vogt, P. (2018, October 12a). The Crime Machine, Part I (no. 127) [Audio podcast episode]. In Reply All. Gimlet Media.

Vogt, P. (2018, October 12b). The Crime Machine, Part II (no. 128) [Audio podcast episode]. In Reply All. Gimlet Media.

Hi Phiviet,

I linked to your task here:

https://blogs.ubc.ca/etec540lyasin/2023/04/08/link-5-phiviet-vo-task-11-text-to-image/

l.