Though the use of generative models to produce images from text prompts is quickly becoming both easier and more useful, one of its difficulties, which does not seem to be easy to resolve, draws us back not only to the readings we are currently doing on algorithms but also to our discussion of the Golden Record. This problem may be described, simplistically, as the problem of transparency. I would suggest that this manifests in at least two ways. First, it is not really possible for the model’s user, and often not even for the model’s developer, to know exactly how the model reached its image from its data. This often depends on the model in question, some are more transparent in this respect than others, and some more transparent to their developers than to their users, but in many cases, it is impossible to really understand the process the model takes from input to output.

Again, it is also sometimes difficult to understand which training material was used for the model as a whole. A model is, after all, only as good as its training data, but understanding how good that training data is, particularly as the model’s user, is very difficult. When considering Craiyon, just for example, and asking it to “Draw a very cute kitten lying down and looking at the camera” I am able to obtain the following image.

I thought about this prompt, and all the others I used, to try to make inferences about the data used to train the model. As expected, because it is fairly simple, and because this is something which appears quite often in images made before the model’s production, the model was able to put forward a very clear image from this prompt, and the image seemed to match the prompt.

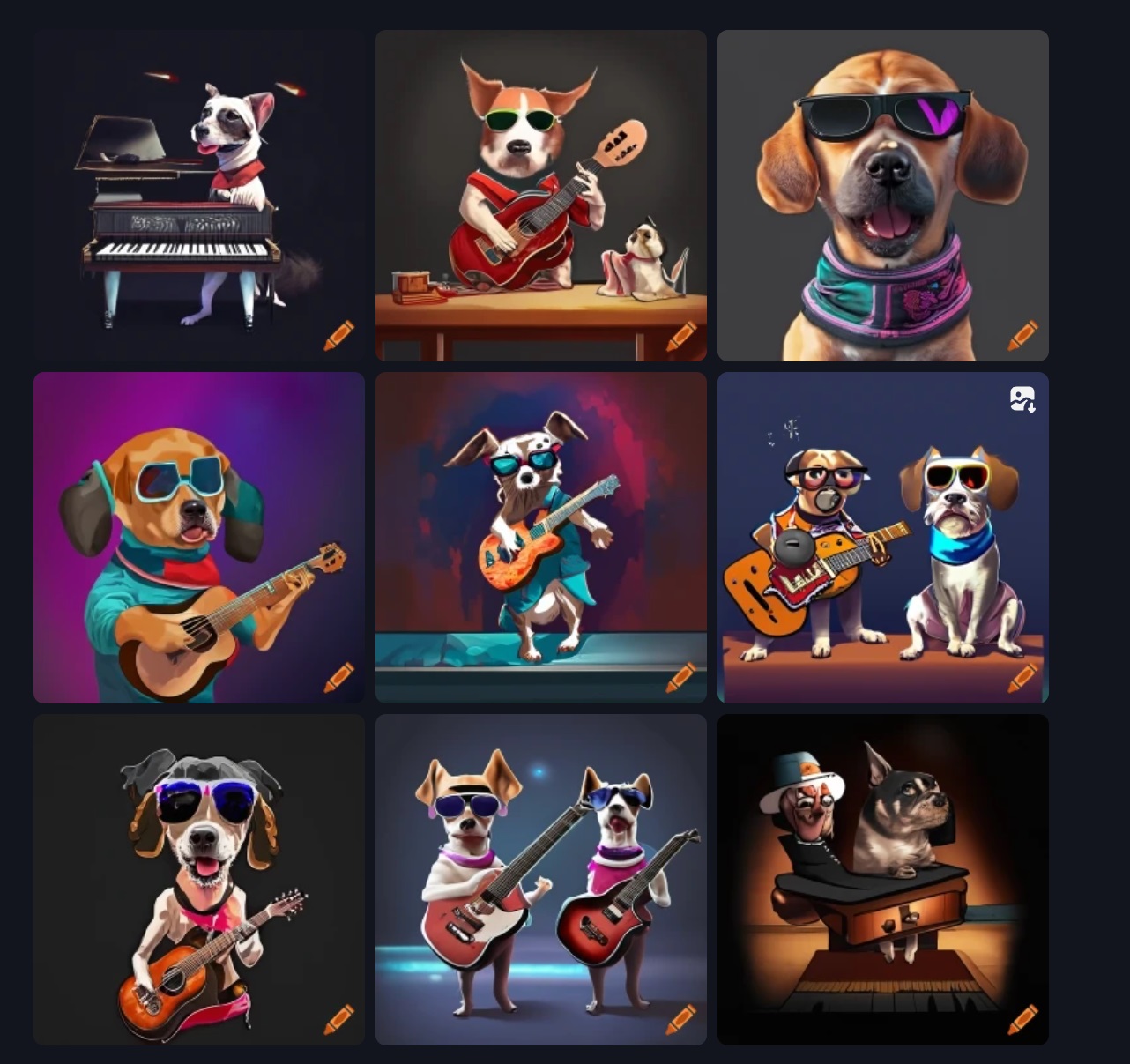

Where the model was given more complicated prompts, however, it often produced something like what was wanted, but failed in important details. For example, when I asked it, being quite specific, to “create an image of 3 dogs playing together each playing with an instrument. Dog 1 is playing the piano with dark sunglasses, dog 2 is playing the guitar with clothing like rock musicians and dog 3 is playing the drums with a bandana and sunglasses”.

As can be seen, the dogs are playing instruments, but in most cases, the dogs are playing instruments which can best be described as hybrids of those which we know of. The ability to combine seems to require far more training data for this model though, again, it is difficult to say whether the issue is insufficient data, a problem in the model itself, or a lack of variety in the training data given. Again, all that can be done, particularly by the model’s user, is to infer, from fairly little evidence, what the problem may be. Further evidence for the existence of the problem itself, however, can be seen when I asked the model “create an image of 3 robots doing house chores. Robot 1 is cleaning the dishes, Robot 2 is mopping the floors and robot 3 is cleaning the shelves”

Once again, the issue seems to be combination. The robots are not together, they are in separate images. They are also not cleaning, rather, they are in the vicinity of cleaning activities, not performing those activities themselves. There seems to be a problem in conjoining different ideas but, besides the existence of the problem, it is difficult, as someone using the model, to connect that problem with a specific lack, whether in training data or in the model itself.

cody peters

November 27, 2023 — 11:26 am

Hello Hassan, I just wanted to start off saying that I too decided to mess around with Craiyon for this task, and I also ran into a bit of the same conundrum; in creating images using this sort of tool, we are encouraged to be as specific and detailed in our prompts as possible in order to achieve an end result that best fits within our own parameters. However, as you saw yourself, there are certain prompts or keywords that do not appear to be applicable to this particular tool; namely, the inclusion of multiple individual elements (three dogs, multiple robots, etc). I wonder if it is simply a feature of this sort of tool to prioritize generating singular images over multi-facet works? I also wonder if the wording that you chose could possibly be re-worked for better results? I have found in my own experience using generative ai art tools that I often have to re-work a prompt several times before I get to an end result that is close to what I want. I would try to re-write your prompt of “create an image of 3 robots doing house chores. Robot 1 is cleaning the dishes, Robot 2 is mopping the floors and robot 3 is cleaning the shelves” into “three robots depicted in a line, with the leftmost robot cleaning dishes, the center robot mopping the floor, and the rightmost robot cleaning some shelves”, and see if that changes the orientation or inclusions of different elements into the revised images. It makes me wonder if it is a problem with the ai art generation, or a miscommunication due to semantics and how the program is laid out?