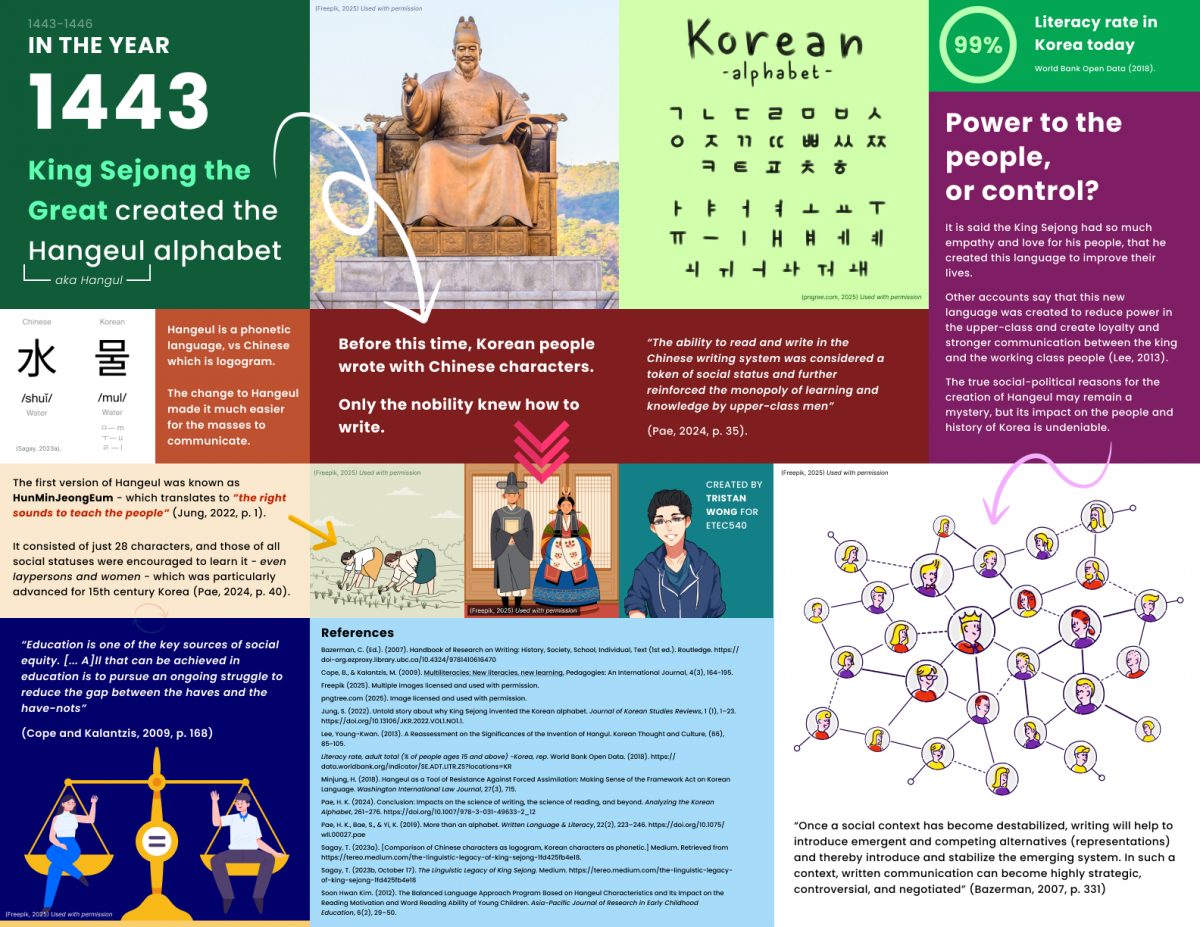

Link 1 – Task 1

https://blogs.ubc.ca/sourabhaggarwal/2025/01/15/whats-in-my-bag/

Since this was the first assignment in this course, I was really curious to see what other people included as part of their “bag”. After reading the example provided to the class, I felt very much that my bag was sort of boring and hyper-functional (as the example was going through an avid paddler’s bag!). I also felt that perhaps what I had presented felt a bit “bleached” or failed to show any information about myself. After visiting Sourabh’s submission, I felt a lot better because:

- He also had a bag that I felt was similar to mine in terms of function

- Even though his bag was similar in function, I did not at all get the sense that I could not discern information about him, in fact, it felt like there was plenty of information on display – I shared my comments on Sourabh’s post as well.

I realized (alongside my peer’s and professor’s comments) that my bag was actually interesting, and that despite my misgivings, there was actually a lot of information that could be taken from my image. I believe that this gave me a really good foundation to start the course, and opened up my mind to the different ways of thinking I may be exposed to during this course.

Link 2 – Task 3

https://blogs.ubc.ca/keizern/voice-to-text-task-3

As captured in my comments to Natalie, I felt that we shared a lot of similar outcomes from the voice to task assignment. We both mostly found that the use of punctuation was one of the larger challenges we faced with this task.

We both also agreed that a scripted version of our oral story would have been most organized – though Natalie focuses more on her own ability to “control” the outcome by speaking clearer, whereas I focused more on how the output of the text would be impacted by such a change.

Lastly, we both took away that oral storytelling is in a category of it’s own right. Natalie described it as an “art form in itself” (Keizer, 2025), while I wrote that “Oral storytelling is also in many ways a form of visual storytelling” (Wong, 2025). We both highlighted the differences between oral storytelling and written storytelling, including the pros and cons of each.

References

Keizer, N. (2025, January 25). Voice to text task 3. Text Technologies The Changing Spaces of Reading and Writing. https://blogs.ubc.ca/keizern/voice-to-text-task-3

Wong, T. (2025). Task 3: Voice to Text Task. ETEC 540 Tristan Wong. https://blogs.ubc.ca/twong540/task-3-voice-to-text-task/

Link 3 – Task 6

https://blogs.ubc.ca/twong540/task-6-an-emoji-story/

Pasted from my blog entry (linked above)

As part of my Task 6 submission, I visited my colleagues websites and took my best guess at what their emoji stories could be:

Tom Skinner created a whole bunch of really creative ideas which included: Pineapple Express, Batman, Spiderman, and Brokeback Mountain: https://sites.google.com/view/etec540-tomskinner/assignments-and-activities

Jazz Chapman wrote about some sort of medical TV show – I’m not a big TV watcher so I would have to guess Grey’s anatomy or something similar: https://blogs.ubc.ca/jasminechapmanetec540/2025/02/16/an-emoji-tv-show/

Tatiana Kloster‘s post displayed a movie that I’m not sure of the title. When I first saw the emojis, I thought of the movie “Bridesmaids”, but I realized that doesn’t match the hint of the title, so I was a bit stuck: https://sites.google.com/view/etec540/weekly-tasks/an-emoji-story

It was very interesting to see the variety of stories that my colleagues created for this task. I found that there was a wide variety of approaches with this task, with many people using emojis to describe the title, plot, and/or both! I realized that my take on this task was a bit simplistic, but I still believe it was an effective approach at conveying the crux of the movie. That being said, comments on my post made me realize that there were probably a few movies that shared a very similar plot to what I shared, which was an oversight.

I do not regularly watch movies, in fact, I usually avoid watching movies as it is not an activity that I actively enjoy. I would say that I probably watch less than five movies a year (if that). So I found this task sightly challenging while visiting other webspaces, as there were probably many “easy” emoji stories that I did not know due to my lack of knowledge. To me, this highlighted how our culture, background, and upbringing might influence how we are able to interpret and communicate with others effectively.

Link 4 – Task 7

https://blogs.ubc.ca/jgock87/2025/02/21/task-7-mode-bending/

This week, I chose Jon’s task because we both took away similar points from the week’s readings and decided to “mode-bend” by shifting the assignment to an aural format.

Interestingly, this is mostly where the similarities in our approaches end, as Jon’s direction focused on the sounds of the objects in his bag, while I focused more on a socio-cultural approach – attempting to created a “reaction-style” piece.

What I really appreciated about Jon’s post was how he highlighted the accessibility component of an auditory approach, and how the cultural contexts might heavily impact one’s perception of those sounds. I found his post to be extremely insightful and impactful on my thinking.

Meanwhile, I attempted to make a slight commentary on current-day content by paying homage to both podcasts (which have seen rapid popularity over the past few years), as well as reaction videos – an internet staple. I also thought that changing the content of the task to more personal items might be a more interesting take on the original task.

While these are both extremely valid directions to take this assignment. I felt very strongly that Jon had presented some very powerful ideas that stayed with me through the rest of the course.

References

Cope, B., & Kalantzis, M. (2009). Multiliteracies: New literacies, new learning. Pedagogies: An International Journal, 4(3), 164-195.

The New London Group. (1996). A pedagogy of multiliteracies: Designing social futures. (Links to an external site.) Harvard Educational Review 66(1), 60-92.

Link 5 – Task 8

https://blogs.ubc.ca/writingacrossthecenter/task-8-golden-record-curation/

For this link, I chose Evan’s submission. I found that while we mostly agreed on the approach, Evan had some valuable insights for his approach which I hasn’t considered.

For example, Evan’s process included dividing the audience between humans and aliens, and then focused on how to select the tracks for an alien audience – disregarding things that would not be relevant to them (such as country of origin).

Although slightly humorous, I hasn’t thought of how things that were important factors for us (e.g. country of origin) would be completely irrelevant to an alien civilization. In fact, I made a point to ensure that as many continents as possible were represented on the record.

Despite aliens not knowing our civilization, continents, and culture, I am not sure that the country of origin is something that should be discarded entirely. Although they would not care about the country of origin, it can not be argued that the country of origin does not impact the sound/music, and from a human perspective, it is important to include diverse sounds and perspectives – which is why I think it could still be an important factor in the decision-making process.

Even so, Evan and I ended up matching on five out of the ten tracks, which is not a small amount – so our similarities in approach when considering a variety of songs using factors such as instrumental/vocal nature, speed, and purpose seemed to still align in the end.

Link 6 – Task 10

https://blogs.ubc.ca/veltri540/2025/01/12/task-10-attention-economy/

Isabella wrote about her challenges using the User Inyerface website as someone who with ADHD. As someone who has also been diagnosed with ADHD, I instantly connected with her post.

While our experiences using the website may have differed. I connected greatly to the frustration that Isabella described in her reflection. While I did not struggle on this task in a similar way, the experiences that Isabella described were things that I have felt many times over my life, in many differences scenarios.

It is always powerful to see stories of others who may experience similar things to you, and I think that this is a great example of how these sorts of tasks can link and unite people (even in a passive or roundabout way). Learning about others is how we can create a sense of community and belonging – even in a world that has become increasingly isolated and digital.