I came across an article pondering Google’s newest AI software, DeepMind, and followed a hyperlinked thread to a page about Project Euphonia. The AI software behind automatic speech recognition (ASR), such as that which powers Google Assistant, is consistently reliable for most speakers, but may not function as intended for those with atypical speech patterns.

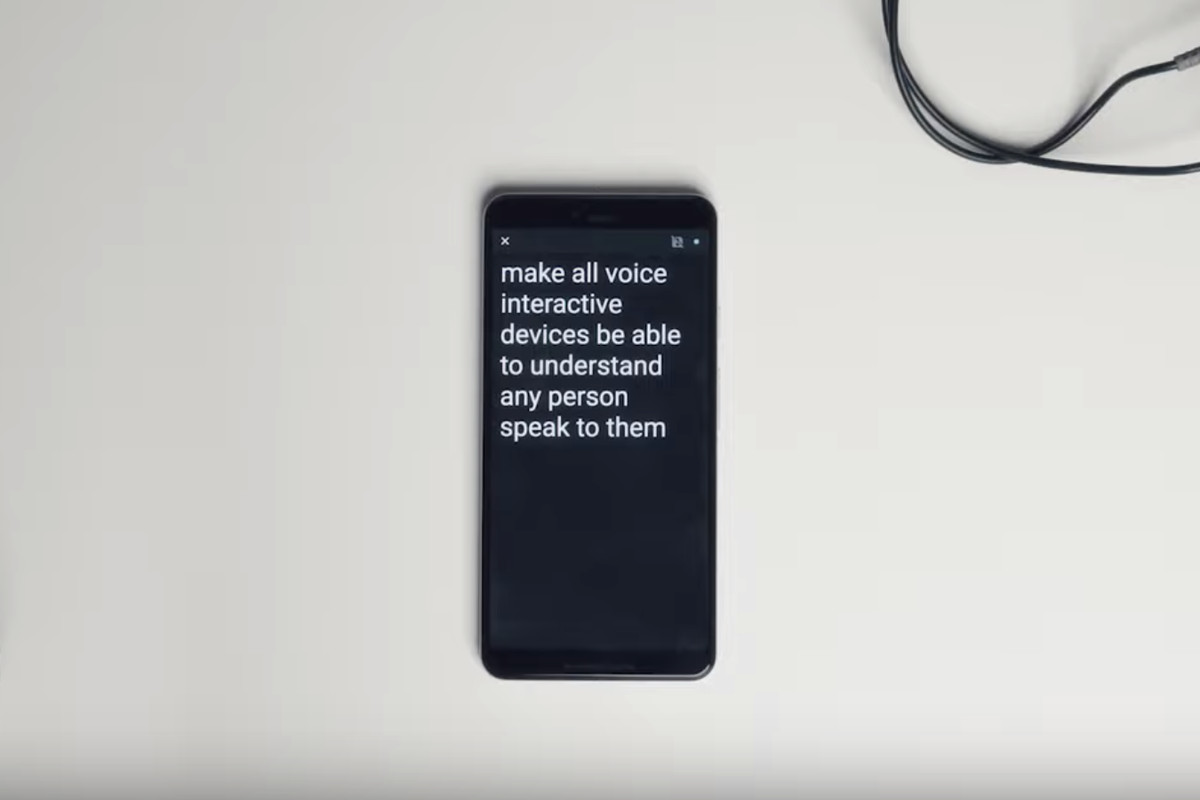

Project Euphonia is a research project conducted by Google focused on better training the AI to understand differing speech patterns more effectively by creating personalized ASRs. This is done by creating recordings using “at-home” recording hardware in the form of any mobile device with an enabled microphone. The long-term goal of this project is to synthesize these recordings with the AI software behind the speech-to-text and allow wider access to technologies that use it, such as virtual assistants or accessing smart home voice controls.

In this study, researchers found that personalized ASR models actually could understand speakers better than human listeners trained in speech patterning. With this level of understanding and reduction of word errors, learners would be able to more accurately make their thinking apparent and actively participate in the creation of knowledge or control of other tools, such as speech-to-text for writing. I believe the continuing development of this type of AI training may allow for the increased agency of learners, particularly those with diverse needs not catered to by current tools. Project Euphonia may serve as a building block for access to other mobile technologies that exist. Additionally, AI-assisted software such as this allows for unobstructed communication between individuals, fostering a community of collaboration and growth through the elimination of barriers to communication.

Are there any other applications that may benefit from AI-assisted comprehension of human speech?

Hey Braden – your post caught my eye because I recently got interested in ASR as well, but within the context of language learning. Perhaps that would be my response to your question – I was teaching English in Korea last year, where some students used mobile language learning apps that utilize speech recognition to practice pronunciation. From what I understand, apps like Google Translate are tailored towards the “common” English accents, and may not pick up Asian accents, for example.

Braden, have you heard of Dragon Dictate? I took a course with Dr. Heidi Janz and Dr. Michelle Stack through the MET program, 565C and she talked about the program Dragon Dictate that seems very familiar to Project Euphonia. However, Dr. Janz was not considered an ideal candidate because of her dysarthric speech patterns through cerebral palsey. Do you know if Project Euphonia has the same concerns about not recognizing dysarthric speech? Is this a different iteration of the Dragon Dictate program?

Janz, H. & Stack, M. (2019). How (not) to train your technology…and other dragons. UBC MET Lecture, 2019.

Hi Jennifer, I have minimal familiarity with the Dragon software family, but my understanding of Project Euphonia is that it is more about the manual input and teaching of AI software by the researchers within the project rather than relying simply on the AI for speech-to-text functionality. I would hypothesize that the goal is to teach the DeepMind AI to recognize speech that may be dysarthric so that programs like Dragon Dictate may eventually be a more useful and accessible tool for those with atypical speech. Project Euphonia is a subsidiary of Google, so despite sharing similarities, they appear to be separate iterations.

Jennifer, this was also the first thing that came to mind to me when I was reading this article. Jennifer and I were in the same class last year and I thought that this technology sounded very similar to Dragon Dictate as well. Thank you for clarifying about project Euphonia Braden.

Impressive! Thanks for sharing this, Braden. I can see connections between this technology and what I shared in my post, as both break barriers of communication, which is an essential part of learning. As you mention, these technologies can empower students, giving them agency to pursue learning experiences that would have been too difficult or impossible. I am glad that in recent years there has been more attention on accessibility and we can see in cases like this that technology has a big part to play.

What comes to mind is how in the future we might have AI-assisted human speech technologies that decode emotional content to improve communication. It is common for individuals to say something that is not conveying their deeper emotional messages, may it be out of an inability to express it or repression. Perhaps this is what is meant by how future technologies will allow us to understand our emotions and communicate more efficiently.

I totally agree with your point of view, Eduardo. It was actually your posting on the augmented reality translation glasses that led me in this direction. One other usage of the DeepMind AI software that I came across was to help make computer-generated voices more realistic or even customizable to mimic a person’s natural speaking voice. The reasoning behind that research was that not only could the AI assist with improving communication, but it could also promote a sense of individuality and personability in the expression. So perhaps the technology could help use understand our emotions, but also present our true voice in a medium that could be preserved digitally and easily accessible to all language speakers.