Thoughts on the Cambridge Forum

Cambridge Forum Reflections and Analysis

It is interesting that as we discuss and reflect upon the influence of information technology on language and education our source material is a dated, scratchy sounding audio recording. Perhaps due to this and the length I resisted listening to the recording, preferring to examine other readings and posts. However, once I committed to listening to the Cambridge Forum I found that it was actually quite engaging and an enjoyable alternative to reading. I think that the lack of video may have increased my focus on what was spoken. A challenge – especially during the question period – was determining who was speaking. I also found it hard to take notes without any sort of visual to guide the organization and the topics being discussed. Clearly there are advantages and challenges associated with the technology being used.

As technology has evolved throughout the ages our society has seen many benefits, yet also experiences certain costs. Postman (2011) cites the legend of Thamus to illustrate how every technology is simultaneously both a burden and a blessing, and encourages us to avoid being blinded by advances in order to examine who in our society really benefits and what are the costs. Prophetically he cautioned about the loss of privacy as private information will be tracked. We now can look at proliferation of social media, including Facebook, Instagram, or twitter, whose business is collecting and selling their customer’s information as the cost of easily connecting and communicating with people (Laudon & Laudon, 2013). The huge popularity of social media is evidence that in general society values of its benefits much than the loss of privacy.

O’Donnell (1999) suggests that the online world is currently a frontier that will take a generation to civilize. We can see evidence in this in the battles of internet freedom versus copyright protection. One of the most valuable aspects of our interconnected world is the ease of which one can share information around the world. While this is invaluable for those who wish to share their voice or ideas it is also a huge threat to the established publishers, television and movie studios, or record labels. New technologies have given individuals an audience to communicate to that never existed before.

As people are increasingly able to share their ideas we have seen the growth of forums online. Forums enable people to find likeminded people from around the world. O’Donnell (1999) cautions that a risk of participating in online discussions is losing out on real life interactions. Furthermore, as technology is better able to track your preferences search engines and other sites suggest information, news articles, and websites that it thinks match your previous preferences (Diaz, 2008). The issue with receiving curated news and suggestions is that one may believe that they are experiencing an accurate world view while actually only really being exposed to one small portion of thought and experience.

Engell (1999) is concerned with the pace of change and the ability of institutions to keep up with the technological changes. Technology is evolving at a terrific rate and within years what was once cutting edge frequently has become obsolete. Examples of this include the floppy discs, pagers, CD’s, or even once extremely popular websites like Myspace, which have been relegated to obsolescence.

Furthermore, as information is being shared and created at ever increasing rates Engell discussed being concerned about the ability to sort through and find the quality thoughts and writing hidden within the mountains of rubbish. His thoughts have turned true to some extent as search engines value popularity over quality or thoroughness (Diaz, 2008). It is increasingly the job of educators to teach students how best to find and choose what information to use rather than simply teaching the information itself. Thanks to the internet and smart phones, answers to almost anything are only a few clicks away. The challenge is being able to discern what a valid source is and what isn’t.

One of the biggest effects of current technological communication is the ever shrinking vocabulary as people have moved from letters, to email, and now onto texts, tweets, and vines. Personally, I worry that quality writing is gradually being lost as sound bites and twenty second videos overtake in-depth thoughtful prose.

References

Diaz, A. (2008). Through the Google goggles: Sociopolitical bias in search engine design (pp. 11-34). Springer Berlin Heidelberg.

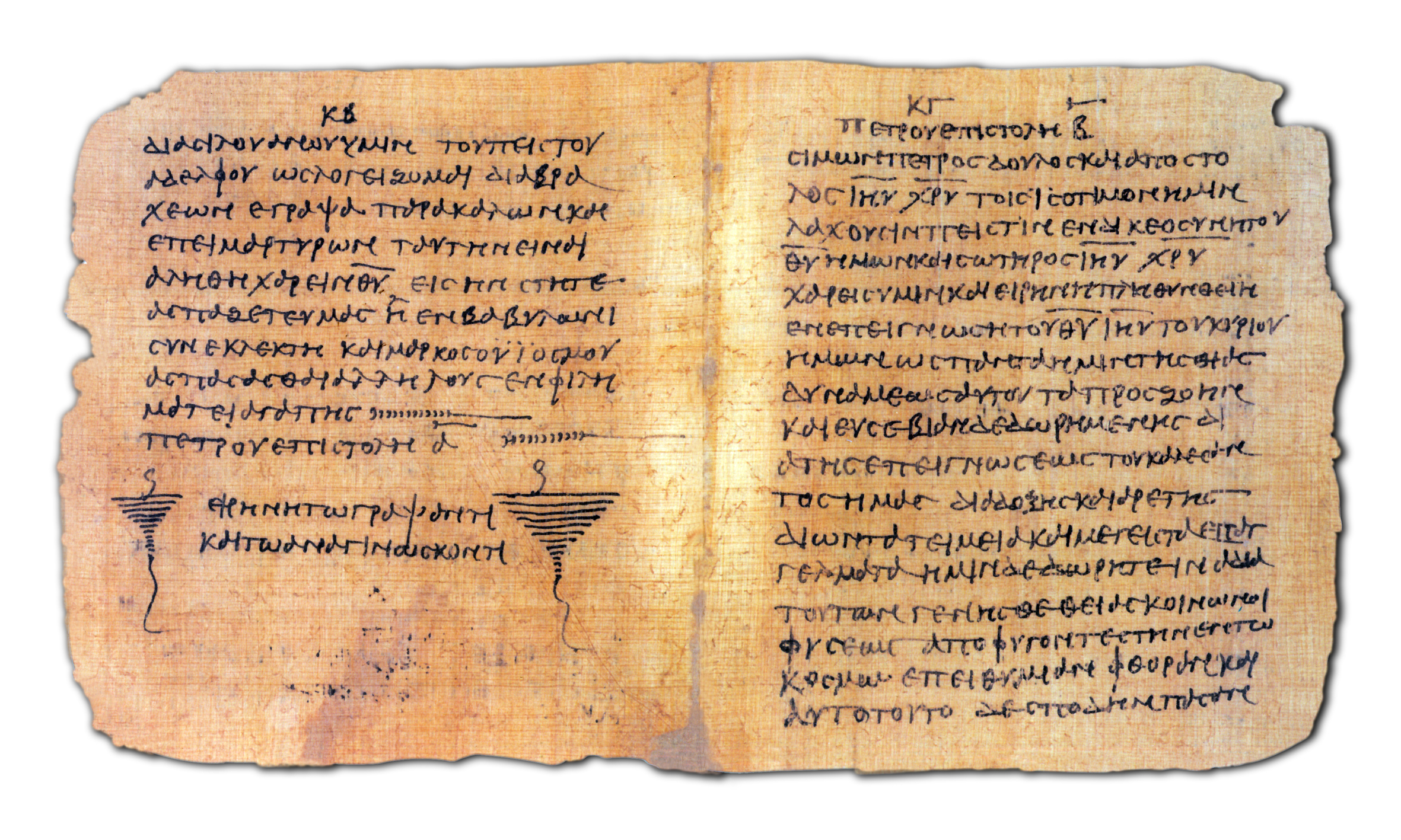

Engell J. & O’Donnell J. (1999). From Papyrus to Cyberspace. [Audio File]. Cambridge Forums.

Laudon, K. C., & Laudon, J. P. (2013). Management Information Systems 11e.

Postman, N. (2011). Technopoly: The surrender of culture to technology. Vintage.

Hello Jesse,

Your comments on censorship and freedom on the internet fascinates me. From the course readings so far, I extract a key theme that literacy helped bring forth the idea of freedom and democracy. Especially in the early years when humans were learning to live together, that is in Sumerian culture then later Greek culture. It seems the alpha numeric system largely pioneered by Greeks allowed even young children to pick up this relatively easy to learn language.

If literacy helped to facilitate democracy, can it be argued that more literacy promotes a great degree of democracy? What exactly is democracy? If democracy is freedom, we are always restricted in our freedom by laws and rules. So in that case, there really is no such thing as a prefect democracy? On that argument, why shouldn’t the internet be censored? Everything seems to be. The books available to us in the library are filtered by the librarians. For the most part, they decide what I can and cannot access by lining up their book choices to commonly accepted societal values.

I remember the raging debate of SOPA and PIPA – attempts to censor the internet. If you remember, many websites “went dark” to protest against SOPA and PIPA. The general argument is that it was MPAA and RIAA behind the drive to control and censor the internet. In other words, it was based on money. But reflecting on this, some of the biggest websites that “went dark” to protest SOPA and PIPA had much to gain financially from the open nature of the internet. If I remember correctly, they were Google (only went partly “dark”), Wikipedia, Mozilla, Tumblr, Reddit.

Sorry, there seems to be more questions than answers!

Thank you for your comments and questions.

It appears to me that literacy is one key pillar of democracy as it enables anyone interested to learn about and stay engaged in their community/city/country/etc’s democratic process. Literacy has enabled an efficient and lasting spread of information. One can argue that literacy can promote democracy.

I’m not sure if I equate democracy with freedom however. Certainly democratic societies generally have more freedoms than theocracies or dictatorships. I think you could argue that the latter two (among other undemocratic societies) actively try to suppress free thought with the goal of maintaining control. In this case censorship is clearly harming those being censored.

In our western society censorship seems to be playing out in different ways, one more active and the other passive. We are seeing massive efforts to control unauthorized spreading of information (copy written data like songs, movies, or television), which is seen through legislation like you mentioned above, but also through the suing – or threatening to sue – those found with copy written material. The second type of censorship that I am personally more concerned about is the personalization of users’ web content. Examples of this include search engines basing results on popularity over quality, third party tracking, along with previous choices made by the user. The effect of this is that one may not actually see a whole or unbiased selection of information on a given topic. For example if a user frequents Tea Party pages or forums, reads Fox News, and Google’s the Republican candidates, then while searching unrelated news the user is likely to be shown a much higher percentage of right leaning or conservative biased articles which may lead them to believe that given the frequency of confirming information they see, that their views more closely match the general population then it really does.

What really concerns me about this streaming or sorting of information is that I don’t think that most/many people realize to what an extent it is happening.

On a related note, I heard through CBC news that turning Tracking Protection on (which blocks third party tracking) can speed your internet speeds up by about 40%, all while greatly increasing your internet privacy.

Finally, I should mention that the third party tracking and directed ads are what enables so much free content on the internet. Without it, there may not continue to be so many free articles, videos, etc. Our societies use provides implied consent. Perhaps all the free content is worth Google or Facebook knowing more about you than you do.

Jesse, I don’t look at the detractors (curated content that flashes right or left of my focus and I don’t look at sponsored links) but I think you make a really interesting point about the different travel prices obtained by you and your family members. You’re all online purchases I guess if there are trawlers connecting your spending profiles to your internet searches.

Jesse, I don’t look at the detractors (curated content that flashes right or left of my focus and I don’t look at sponsored links) but I think you make a really interesting point about the different travel prices obtained by you and your family members. You’re all online purchasers I guess if there are trawlers connecting your spending profiles to your internet searches.

Thanks for the post, I found O’Donnell’s concerns regarding the use of online forums to be be insightful and your additional comments connecting issues related to online curated news to be accurate and troubling. My first experiences involved with online forums began when with my quest to find cheat codes and walk throughs to 1990’s computer games. I recall that these forums were far simpler than how they appear today. Discussions seemed to be lawless and unmoderated, where my experience in today’s forums is quite the opposite. Not only does conversation within a discussion thread lose out on real life interactions as O’Donnell warned, taking part in such a discussion now has its own unique language and rules that profoundly differ from face to face interaction. Viewing or taking part in the forum’s discussion topic is voluntary. The concerns I have regarding curated news (and other forms of searchable media), is that the curating can be provided to users without their request or knowledge and the method of curation lacks transparency. Wolf and Mulholland (2013) argue that online curation needs to fall in line with the practices of museum professionals where they “tell stories through careful selection, organization and presentation of objects in an exhibition, backed up by research” (p.1). When entering a museum the style of the curation is visible and presumed to be the work of professionals. The motives of the professionals that curate news are less visible in their work.

Wolff, A., & Mulholland, P. (2013). Curation, curation, curation. Paper presented at the 1-5. doi:10.1145/2462216.2462217

I agree completely about the hidden nature of third party tracking. The effects of online curation goes beyond simply news sources. As a personal example, recently while exploring a trip to Europe, we found evidence that travel sites also curate search results. On the same day, using the same website, searching the same exact flights concurrently, resulted in pricing that was hundreds of dollars apart for my brother, father, and I. My father who travels more and is relatively more affluent then my brother or I found that the lowest prices he found were about $300 dollars a ticket more than my younger brother and about $200 more than I could find. My younger brother being a student who lives the simplest lifestyle found the lowest prices. Amazed at these results we tried searching again at different locations and again found varied prices.

Other examples of curated media would include streaming music or video sources like iTunes or Netflix who try to suggest new media that it assumes the user would like. While this can be helpful when working well, it also has the downside of hiding varied and potentially interesting options that perhaps the user would enjoy.

As curated searching expands concurrently with the expansion of technology in education, I worry that students will soon find that their education is being curated by some unknown algorithm, that pushes popular choices and hides away quality in-depth resources. This is especially scary as online learning sites like Khan academy proliferate. Khan Academy videos, (especially in History), exemplify the simplification of complex topics. Education is too important to reduce to 2 minute easy to read/watch bits.

I’m going to quote Walter Ong here: “artificiality is natural to human beings”. At the time of the recording, I doubt that O’Donnell or anyone could predict where that message technology would go. Ong is dead on in his observations that humans have the ability to make natural the unnatural. He goes on to give an example that literacy is unnatural as is music / musical instruments. But music and literacy is so inter-wound into our human consciousness that its difficult to see that it was at one time an unnatural act.

I think we could possibly extend that to the knowledge society that is the internet. As a the network administrator and local “techie” at my school, message forums are my first line of defense when I have to diagnose a weird computer issue. For instance, today at 3PM, I got a report that about 1/3 of the Chromebooks (we have 2 sets of them, each set = 36 devices) could not connect to the internet. The first thing I did was search for message forums. I usually gauge how common the problem is by the amount of chatter on the forums. As a troubleshooter, I prefer message forums to published diagnostics because forums are social. I usually find everything I need from public forums. In this case, I learned how to extract the wifi diagnoistic logs from a Chromebook and found out that it was requesting but not receiving an IP address. Well our router handles the wifi DHCP (dishing out IP’s). Reset that and it worked. I simply cannot live without message forums.

That said, the last time I went to the doctor, he took my blood pressure. I asked him what it is and he wouldn’t tell me. He said that I’m going to look it up on the internet where I will find an “average BP”. My doctor explained to me that there is no such thing as an average blood pressure just as an average fuel economy for a car. He tells me that you cannot even cite an average fuel economy for the exact same model and year of car because it would depend on how it is used. He simply told me there is nothing wrong with my blood pressure.

So there are two different sides of the coin for social media. O’Donnel’s concerns I think are common for any emerging technology. There is always a fear of how it will affect the human condition. Again, Ong: “artificiality is natural to human beings”.

I enjoyed the points made in this post, but there is one part I’d like to highlight. “Engell discussed being concerned about the ability to sort through and find the quality thoughts and writing hidden within the mountains of rubbish. His thoughts have turned true to some extent as search engines value popularity over quality or thoroughness (Diaz, 2008).”

I’d like to explore what Engell and Diaz have said in the context of:

(a) how search engines work

(b) the meaning of ‘popular’ and ‘quality’ – who or what is responsible for information retrieved from the web?

(c) information literacy.

(a) How search engines work

Let’s consider keywords and keyword search technology. The human user is responsible for choosing keywords. The keyword search technology is the operator for trawling and indexing, and it generates a list of search results. These results will be web pages that contain the same keywords that the search engine user chose.

Based on the above, can we say that search engines ‘value popularity’? Search engines can’t trawl or index keywords on a variable of popularity, only on a variable of keyword frequency.

According to Strickland (date unknown), “Google’s algorithm searches out Web pages that contain the keywords used to search, then assigning a rank to each page based several factors, including how many times the keywords appear on the page. Higher ranked pages appear further up in Google’s search engine results page (SERP).”

While Strickland commented on Google technology, we are aware that this is how all search engines work. Webpages with more frequent mentions of the user’s keyword will rate higher on the list of results.

The question then becomes ‘Why does one webpage have more frequent mentions of the keyword than other webpages’? What is it about a particular webpage that captures the trawler?

The answer could be:

– the content was written by an authoritative source (e.g. expert) and the keyword has frequent mention because of the depth of articulation of a topic on that webpage

– the webpage was designed to use the keyword in multiple locations so as to attract trawlers (e.g. content headings, URL titles), which means the frequency of mention of the keyword has nothing to do with content per se. I believe this is what website marketers refer to as ‘search engine optimisation’.

We can conclude that search engines will retrieve content that is authoritative and relevant to the user’s search interests. The user just needs some way of informing the search engine algorithm that authoritative content is wanted, not just any website with a lot of mentions of the keyword.

(b) The meaning of ‘popular’ and ‘quality’ – who or what is responsible for information retrieved?

Above, I suggested that ‘popular’ is not a term that defines the operation of search engines (it’s better to talk about frequency of keyword mentions on a webpage). Let’s now look at ‘quality’ of content retrieved by search engines. What do we mean by quality?

One study referred to ‘information quality’ on four dimensions of accuracy, completeness, relevance, and timeliness” (Kim et al 2005, cited in Arazy, Morgan and Patterson). Relevance was taken to be peer-verified content.

Arazy, Morgan & Patterson (2015) analysed some Wikipedia pages, building on previous empirical studies of Wikipedia that had attributed the success of the wiki site to large crowds with diverse ideas and opinions (no group think), methods for aggregating those contributions, and a high standard of format and writing. Arazy et al’s study confirmed that quality of content created on wikis improves when there is a large and diverse ‘crowd’ (participant pool). Hence, ‘wisdom of the crowds’ becomes an accepted method of creating quality web content.

The onus is then on users of keyword searches to somehow inform the search engines that they want the websites with ‘wisdom’. If not wisdom in the sense of ‘crowd’ wisdom, then other conventional forms of wisdom (e.g. journal publications). A keyword search that includes the term ‘journal’ or ‘peer reviewed’ or ‘wiki’ is far more likely to enable the search engine to find webpages with quality content.

(c) Information literacy

The understanding users have about search engines and choosing keywords to put into search engines is what determines their experience of retrieving ‘rubbish’ or ‘good stuff’. As stated above, a user who includes ‘journal’ or ‘wiki’ in their keyword string is likely to obtain more reliable information than a user who doesn’t.

If users of search engines are aware of ‘wisdom of the crowds’ and are in agreement with the academic literature that reports Wikipedia is a source of quality content (accurate, complete, relevant, timely), then they will have the blessing of Wikipedia pages appearing high up in the list of many search results.

In summary, Engell is correct to say users need a means to “sort through and find the quality thoughts and writing”, but the means really comes down to their choice of keyword searches. We can’t blame the search engines: they’re doing the job they are designed for. Search engine optimisation makes websites rank more highly than users might find helpful, but SEO is not going to disappear anytime soon. Hence, users need to be smart when using search engines to ‘get around’ the web marketers, and to know what keywords will link to authoritative sources. Any wiki that has multiple participants is going to be a better source of content than a webpage publishing content of one writer.

Equally, writers who use wiki technology and adopt collaborative writing and editing with the aim of gaining a global profile much like that achieved by Wikipedia will find their website more frequently visited.

References:

Arazy, O., Morgan, M., & Patterson, R. (2015). Wisdom of the crowds: Decentralized knowledge construction in Wikipedia. Conference Paper.

http://www.researchgate.net/publication/200773181_Wisdom_of_the_crowds_Decentralized_knowledge_construction_in_Wikipedia

Strickland, J. (date unknown). Why is the Google algorithm so important?

http://computer.howstuffworks.com/google-algorithm.htm

Janette,

I really enjoy reading your insightful posts! I have not read your entire post yet but wanted to respond to one particular item. I believe that Google DOES give you the most popular and frequently used links when you perform a “Google” search. It does this because when use their search engine, you are not really doing a live internet search. That would take way too long and would depend on the bandwidth of the websites.

Instead, Google has web crawlers — these are computer programs that crawl around the internet and basically downoads every piece of text, images and videos that its allowed to access. Obviously a crawler cannot download what they call the invisible web — that is the stuff behind a password. And BTW if you have a website, you can tell a crawler not to index your data by putting a robots.txt file in your root site folder.

There have been other search engines that claim to have a higher degree of accuracy and usefulness by displaying search results based on relevance instead of popularity. But one particular startup that promised this didn’t last too long. Cuil search engine

http://en.wikipedia.org/wiki/Cuil

On the topic of search engines, here is an interesting one: wolframalpha is a computation search engine:

http://www.wolframalpha.com/ | video tutorial: https://www.youtube.com/watch?v=riQ5tpHc_b8

And my favourite is way back time machine, this search engine attempts to archive the entire internet from its beginning! For example, supposedly, there is one of the first Yahoo.com page way back in 1996:

http://web.archive.org/web/19961025030253/http://www10.yahoo.com/