While technology has made communicating much more convenient for us, some lament the decreased level of human interaction as we’re all too focused on what’s happening in front of our monitors instead of paying attention to how other people behave or react. Aspects such as natural interactions, body movements and gestures are usually absent on mobile technology as they can actually help you understand and interact with everyday people and objects around you in a better sense. Studies have shown that that in the process of communication, non-verbal expression has 65% to 93% more influence than actual text as it helps people have a better understanding of the overall situation. In essence, “Body language” is the best interpretation of the behavioural psychology of the individuals and groups. (I’m sure we’ve all used our intuition/observation skills to get a feel or vibe in the environment while adjusting to it)

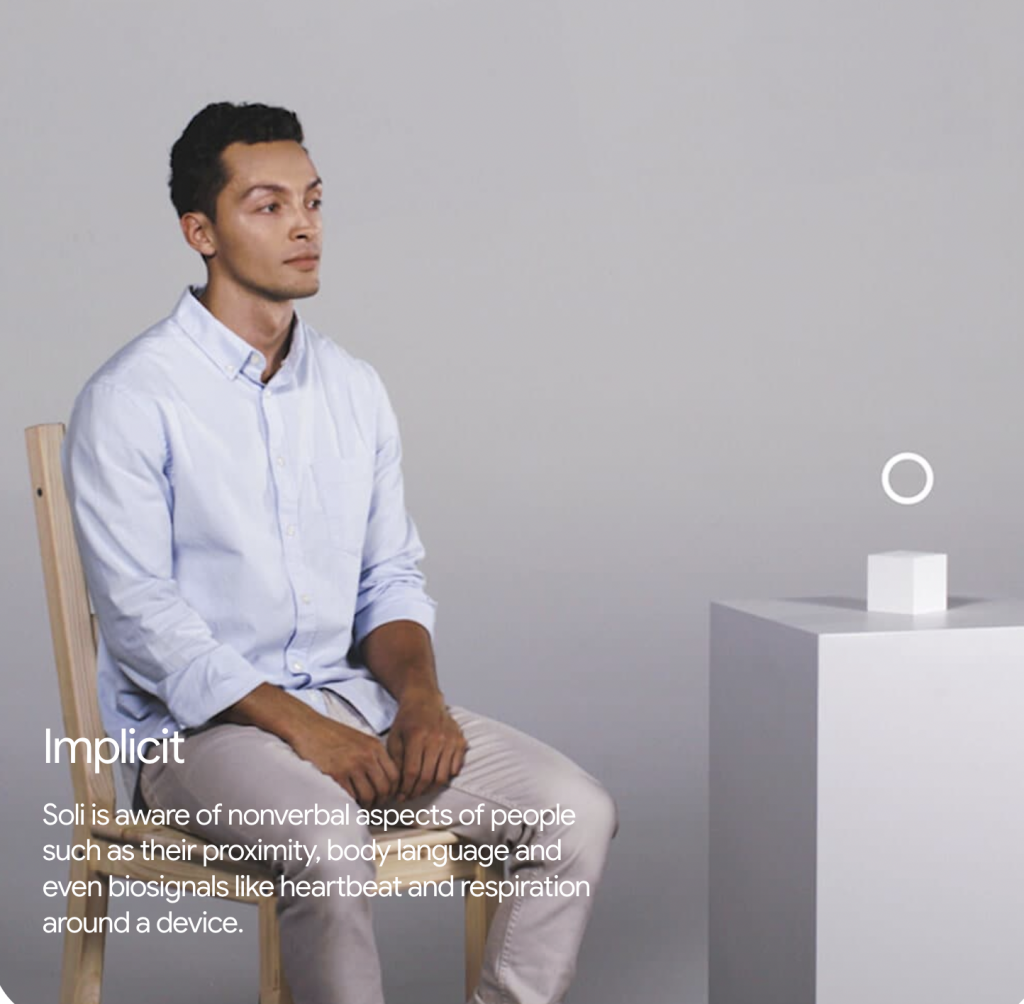

Soli is a miniature radar that understands human motions at various scales according to Google with the technology being able to pick up on motions and cues by reading implicit and explicit interactions. Implicit reactions refer to nonverbal aspects of a person that include proximity, body language and biosignals like heart beat to the Soli device whereas explicit interactions are your hand and finger movements directed to the Soli.

Google mentions that the technology is able to recognize when you lean, turn, and reach while also recognizing hand movements that indicate you want to dial, swipe and slide. Soli will also be able to recognize when there are multiple people near the device or when the user is standing or sitting. On one hand, it’s quite intriguing to be able to capture rich information about the object’s characteristics and behaviours, including size, shape, orientation, material, distance and velocity. On the flip side, this form of mobile technology will definitely raise concerns about privacy and ethical usage as the barriers between internal/external thoughts are reduced.

From the perspective of education, I could see this form of technology being useful as a form of inclusiveness as devices can be operated through air gestures while educators will be able to communicate with learners who might have speech issues or preventing too much anxiety from rising in the classroom. While it’s actual usefulness is debatable, it’s still a neat preview into the possibilities of future mobile tech.

I think this could be a potential form of technology which may additionally help to overcome cultural communication barriers for folks working together who don’t speak the same language. As nonverbal expression plays a role in understanding what is being communicated, it is important to keep in mind how different cultures utilize display rules to communicate, as mentioned in Chapter 17 – Cultural influences on emotional expression: Implications for intercultural communication. https://www.sciencedirect.com/science/article/pii/B9780120577705500199

This seems like a great way to help people with limited ability to recognize and/or interpret body language; I’m thinking Autism spectrum, or all the babies during COVID who had limited exposure to other peoples’ faces so may lack the ability to “read” micro expressions.

This idea intrigues me as a way for students who are non-verbal and with poor motor control can operate in interact with learning technologies. Detecting their presence in front of a display, and having the ability to use large gestures as a form of interaction or input with an app could be a significant breakthrough for those students in having access to learning technologies that traditionally require fine motor control or voice-recognition.

In a museum setting, rather than simple motion-sensing to detect the presence of a patron and begin a video presentation or interactive display, the kiosk could only interact beyond a simple static display when it detects attention being paid to it.

Although completely unrelated, your post reminds me of age restriction algorithms created by Yoti for social platforms as an ID check almost to (to their best extent) keep children under 13 safe and away from developmentally inappropriate content. There wasn’t much about how it has worked so far, but it would be interesting to see how it plays out. I think emotions and behaviours are far more complex to read, so if they are able to do that, then age restricting May seem much easier.

That’s an interesting point and I agree that emotions are hard to read. Perhaps future iterations of this form of tech could be used to detect whether particular content is suitable for children under 13 by sensing their reaction towards a subject matter. It’s crucial that the educator is aware of what is being taught and what the audience’s reaction is. Sometimes when people say they’re fine with something they don’t necessarily agree on an internal/mental level.