Goal

We began 2 months ago, to develop a ML model to detect distress using EEG signals.

First Month:

we were confused where to begin, the cloud servers were hard to navigate with for some of us. EEG data collected from subjects in pain, was just impossible to find, leading us to look for what we can get. We came across grasp and lift and SEED after 2 weeks of looking.

The first route of action was to understand the data, are there any missing values, how was it collected, are there any special attributes or patterns that can be used for our purpose.

The EEG data is recorded at 1000Hz (0.001s) but downsampled to 200 Hz which is 0.002s, I used time series concepts studied at UBC. Transformed the data into frequency domain using a spectral density function but soon realized due to alterations in such a small time frame, the signals would seem like white noised the autocorrelations would not be accurate due to the downsampling.

After deciding the traditional approach of building a simple LR model and then improving the base model using ML models would not be feasible due to lack of domain specific knowledge. I decided to focus my efforts more on combining both datasets together as the ideal model would only have one raw-eeg input.

The SEED data is sparse, and the authors did a fantastic job in providing the datasets with working tutorials that were building blocks for me to extract data. The MNE package is another great tool that allowed me to pick channels that are in both motion and emotion datasets. This ensured similar dimensions of the EEG data.

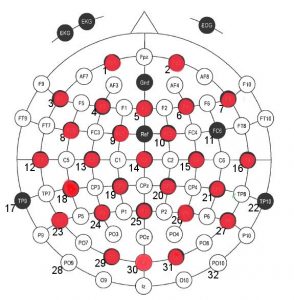

Selected 28 EEG nodes (marked in red).

Second Month:

Explored Pytorch further looking at various models, training loops and finding ways to save on memory so that the GPU dosen’t crash.

I crashed the GPU several times, trying to fit in large tensors for training. This lead to some important changes in how I was labeling my emotion dataset, as alluded in the previous post the subjects view a clip that induces emotion hence they only feel one emotion at a time. I had created a one hot encoding to account for Fear, Neutral and Other, 3 columns for each, this method worked but took 3xn size, mostly 0 values.

After coming across the GPU crash and debugging, I changed the structure to having one vector that would indicate the emotion, with different values to account for different emotions.

After the model architecture was sound, the effort was now placed into developing an appropriate training loop.

Our model has 2 output heads so 2 different loss functions one for single class classification (emotion) and the other for multi class (motion), We chose to go with switching optimizing between these two objectives at every 20th iteration.

We are happy to conclude by saying this journey did bear fruit, we built a working Multi Output Neural Network that is able to predict Emotions and Motions of the subject.

Limitations

Our research is based on a few strong assumptions, which are:

-

- The subjects in both Motion and Emotion datasets are the same.

- If we are able to accurately predict fear we should be able to predict pain.

- The emotion and motion data was collected in the same trial.

Next Steps

In order to implement this, and bring our model into production, we need to test it on a real dataset, which is collected on individuals pursuing some sort of extreme activity such as riding motorbikes, skiing or climbing and faced dangers or got injured pursuing such activities.

The 68 electrode nodes in their helmet, would allow us to achieve greater accuracy in predicting distress and help us assist Search and Rescue Groups reach these individuals effectively.

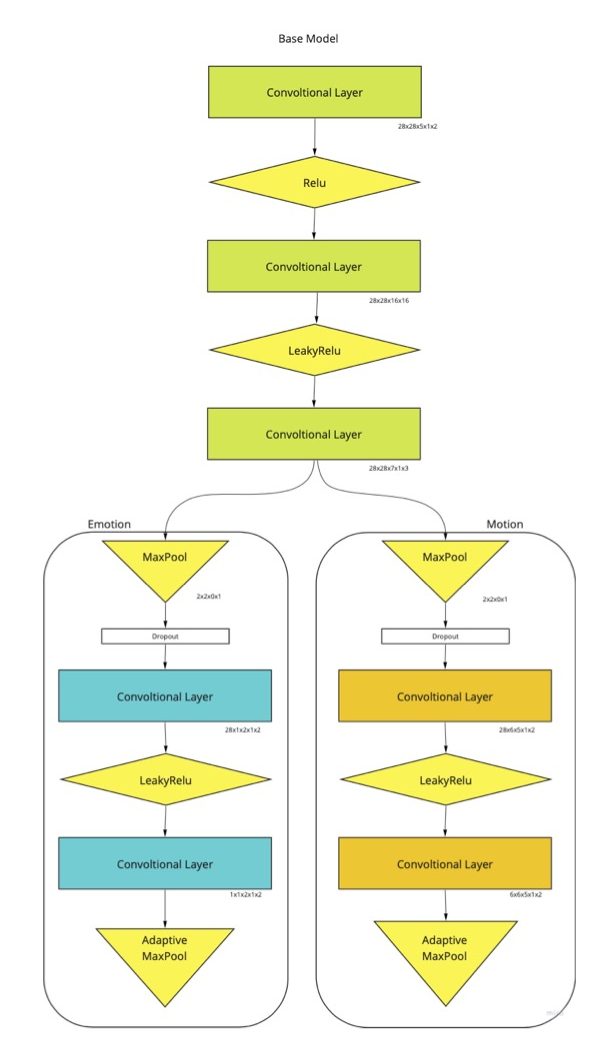

Deep Neural Network Architecture

the network has 3 base layers, and then emotion and motion have 2 layers each. To cut down on dimensions, it was decided to use Adaptive Max Pooling to condense the tensors.

the network outputs emotion prediction and motion prediction after squeezing the dimensions and running through final softmax and sigmoid functions respectively.

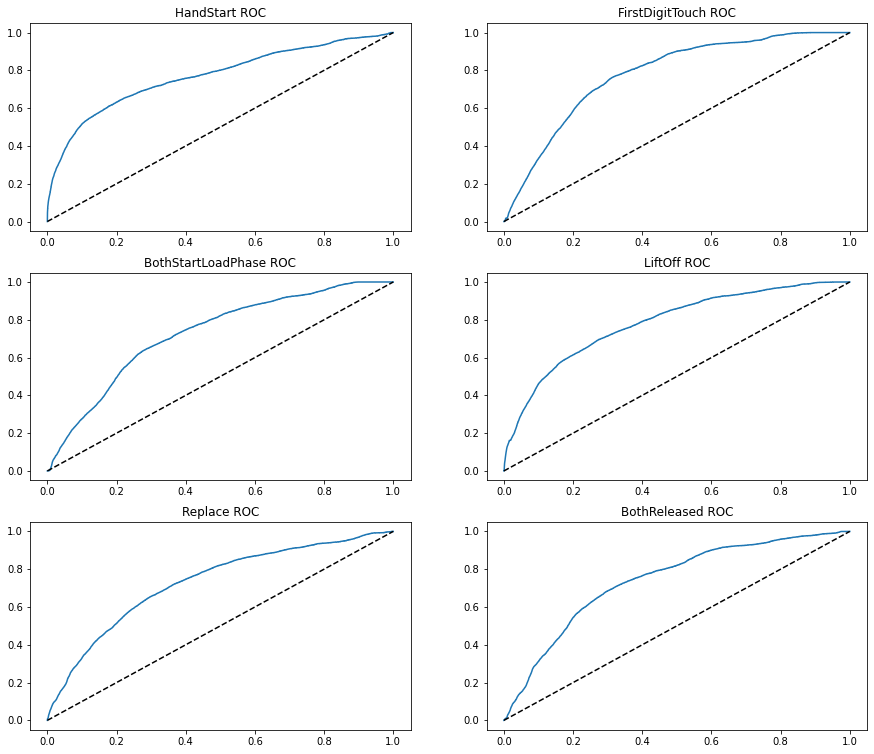

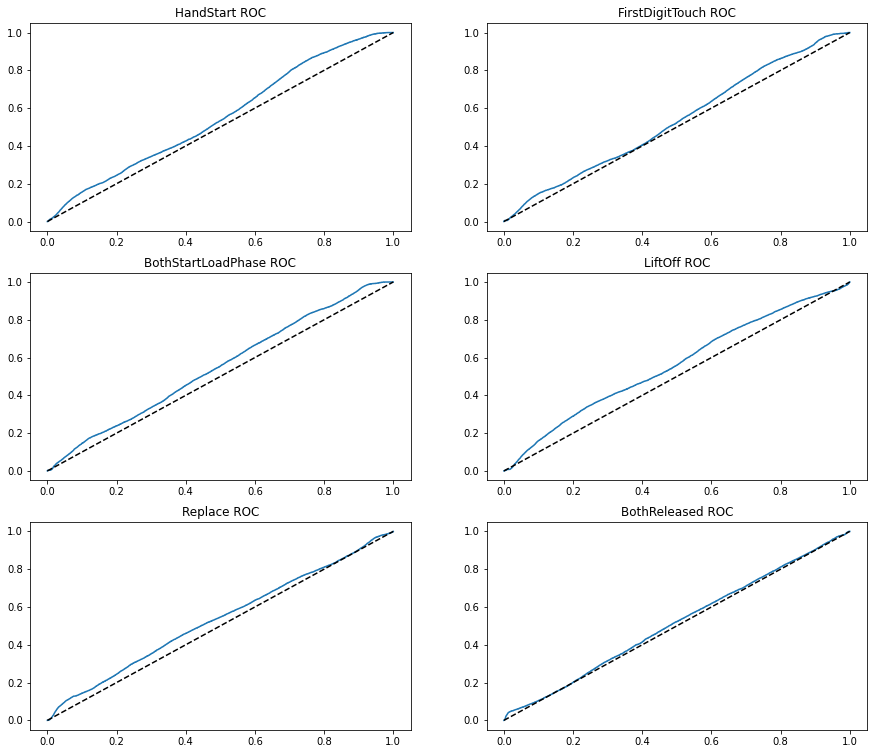

Validation

We tested the model on the motion validation set, the results (AUC ROC of 0.8) indicated a high predictive power and room for improvement.

And we tested on the emotion validation set, the results (AUC ROC of 0.54) indicated poor prediction but this is to be expected as the subjects were not involved in these activities but involved in watching movie clips that induced certain emotions.

Concluding Remarks

My time at RBCs Lets Solve It program has elapsed and this project wouldn’t have been possible without the support of Keyi Tang (ML Research Engineer at Borealis AI) and my teammates.

These past two months I learnt how to manage a data science team, find data by any means possible and develop a multi output deep learning model using pytorch.

This project has just achieved its first milestone, and there is much more room to grow. The steps for the future are as follows:

-

- Training the model on a larger dataset. (utilize 42Gb of SEED)

- validate and then test on the real dataset, where subjects take part in both motion and emotion detection activities.

- State of the Art Models, (Transformers)

- Work on implementation, publish a white paper to build a proof of concept to be worked on towards.