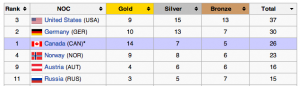

Wherever in the world you were watching the Olympics from, there would have been a nationalistic bias in what you saw and a constant counting and recounting of medals to assert the superiority of your country over all others (you hope) or at least over some other countries. That Russia, the host country, earned the most medals, and especially the most gold and silver medals declares Russia simply as #1, best in the world, and highly accomplished in amateur sports. Russia is followed by the USA, Norway, Canada, and the Netherlands in terms of national prowess in winter sports.

Wherever in the world you were watching the Olympics from, there would have been a nationalistic bias in what you saw and a constant counting and recounting of medals to assert the superiority of your country over all others (you hope) or at least over some other countries. That Russia, the host country, earned the most medals, and especially the most gold and silver medals declares Russia simply as #1, best in the world, and highly accomplished in amateur sports. Russia is followed by the USA, Norway, Canada, and the Netherlands in terms of national prowess in winter sports.

This ranking is based on the number of medals received regardless of the level of medal. Naturally, it is the media that creates these rankings (not the IOC) and this rather simple strategy might distort who is the best (if this notion of the best has any construct validity, but that’s another discussion). It seems fairly obvious that getting the gold is better than getting the silver and that both trump getting a bronze medal. If we weighted the medal count (3 pts for gold, 2 for silver, and 1 for bronze) would the rankings of countries change? They do, a bit, and there are two noticeable changes. First is that Russia is WAY better than even the other top five ranking countries with a score of 70, compared to the next highest scoring country, Canada (which has moved from fourth to second place) with a score of 55. Perhaps less profound, but still an interesting difference is that although overall the USA had two more medals than Norway their weighted scores are identical at 53.

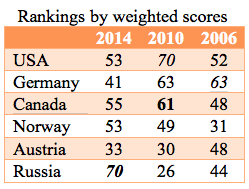

But wait. The Olympics are held every four years and while one might expect relative stability in the rankings. The table to left is the top six ranked countries in 2010, when the Olympics were held in beautiful Vancouver, BC (no bias, on my part here). Russia squeaks into the top six ranked countries.

But wait. The Olympics are held every four years and while one might expect relative stability in the rankings. The table to left is the top six ranked countries in 2010, when the Olympics were held in beautiful Vancouver, BC (no bias, on my part here). Russia squeaks into the top six ranked countries.

So two things to note: 1) using the weighted scoring suggested above the order doesn’t change and we get a similar magnitude of performance [USA score = 70; Germany = 63; Canada = 61; Norway = 49; Austria = 30; Russia = 26], and 2) something miraculous happened in Russia in the last four years! Russia’s weighted score went from 26 in 2010 to 70 in 2014.

Looking across 2006, 2010, and 2014 you get a different picture with the countries that appear in the top six countries changing and the stability of the weighted ranking fluctuating notably. There are a couple of take away messages for evaluators. The simply one is to be cautious when using ranking. There are quite specific instances when evaluators might use ranking (textbook selection; admissions decisions; research proposal evaluation are examples) and a quick examination of how that ranking is done illustrates the need for thoughtfulness in creating algorithms. Michael Scriven and Jane Davidson offer an alternative, a qualitative wt & sum technique, to a numeric wt & sum strategy I have used here, and it is often a great improvement. When we rank things we can too easily confuse the rankings with grades, in other words, the thing that is ranked most highly is defined as good. In fact, it may or may not be good… it’s all relative. The most highly ranked thing isn’t necessarily a good thing.

There are a couple of take away messages for evaluators. The simply one is to be cautious when using ranking. There are quite specific instances when evaluators might use ranking (textbook selection; admissions decisions; research proposal evaluation are examples) and a quick examination of how that ranking is done illustrates the need for thoughtfulness in creating algorithms. Michael Scriven and Jane Davidson offer an alternative, a qualitative wt & sum technique, to a numeric wt & sum strategy I have used here, and it is often a great improvement. When we rank things we can too easily confuse the rankings with grades, in other words, the thing that is ranked most highly is defined as good. In fact, it may or may not be good… it’s all relative. The most highly ranked thing isn’t necessarily a good thing.

Follow

Follow