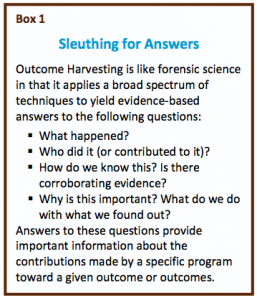

Outcome Harvesting is an evaluation approach developed by Ricardo Wilson-Grau. Using a forensics approach, outcome harvesting has the evaluator or ‘harvester’ retrospectively glean information from reports, personal interviews, and other sources to document how a given program, project, organization or initiative has contributed to outcomes.  Unlike so many evaluation approaches that begin with stated outcomes or objectives, this approach looks for evidence of outcomes, and explanations for those outcomes, in what has already happened… a process the creators call ‘sleuthing.’

Unlike so many evaluation approaches that begin with stated outcomes or objectives, this approach looks for evidence of outcomes, and explanations for those outcomes, in what has already happened… a process the creators call ‘sleuthing.’

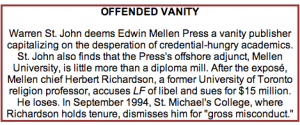

This approach blends together, and maybe eliminates the distinctions, among intended and unintended outcomes. Evaluators are enjoined to look beyond what programs say they will do to what they actually do, but in an objectives driven world this requires evaluators to convince clients that this is important or necessary, and justifying the expenditure of evaluation resources on a broader concept of outcomes than is often defined.

Wilson-Grau has written a clear explanation of the process, which can be downloaded here. In the downloadable pdf, the six steps of outcome harvesting are summarized:

1. Design the Outcome Harvest: Harvest users and harvesters identify useable questions to guide the harvest. Both users and harvesters agree on what information is to be collected and included in the outcome description as well as on the changes in the social actors and how the change agent influenced them.

2. Gather data and draft outcome descriptions: Harvesters glean information about changes that have occurred in social actors and how the change agent contributed to these changes. Information about outcomes may be found in documents or collected through interviews, surveys, and other sources. The harvesters write preliminary outcome descriptions with questions for review and clarification by the change agent.

3. Engage change agents in formulating outcome descriptions: Harvesters engage directly with change agents to review the draft outcome descriptions, identify and formulate additional outcomes, and classify all outcomes. Change agents often consult with well- informed individuals (inside or outside their organization) who can provide information about outcomes.

4. Substantiate: Harvesters obtain the views of independent individuals knowledgeable about the outcome(s) and how they were achieved; this validates and enhances the credibility of the findings.

5. Analyze and interpret: Harvesters organize outcome descriptions through a database in order to make sense of them, analyze and interpret the data, and provide evidence-based answers to the useable harvesting questions.

6. Support use of findings: Drawing on the evidence-based, actionable answers to the useable questions, harvesters propose points for discussion to harvest users, including how the users might make use of findings. The harvesters also wrap up their contribution by accompanying or facilitating the discussion amongst harvest users.

Other evaluation approaches (like the Most Significant Change technique or the Success Case Method) also look retrospectively at what happened and seek to analyze who, why and how change occurred, but this is a good addition to the evaluation literature. An example of outcome harvesting is described on the BetterEvaluation Blog. A short video introduces the example. watch?v=lNhIzzpGakE&feature=youtu.be

Follow

Follow