On the eve of the biggest lottery jackpot in the history of mankind, I was pondering probability theory (and yes I did zoom over to the US and buy a ticket, and so if this blog becomes somewhat less active you will know why). It reminded me of an excellent paper I recently came across on the role of significance tests by Charles Lambdin (2012) who has resurrected the arguments against significance tests as our fundamental statistical method for hypothesis testing. He makes the case that these really represent modern magic, rather than empirical science, and I must admit to some extent I tend to agree, and wonder why we are still rely on the use of p-values today, with an almost blind acceptance of their rightful place in the scientific world.

The modern formulation and philosophy of hypothesis testing was developed by three men between 1915-1933; Ronald Fisher (1890-1962), Jerzey Neyman (1884 -1981) and Egon Pearson (1895-1980). Fisher developed the principles of statistical significance testing and p-values, whilst Neyman & Pearson took a slightly different view and formulated the method we use today where we compare two competing hypotheses: Ho and an alternative hypothesis (Ha), and also developed the notions of Type I and II errors.

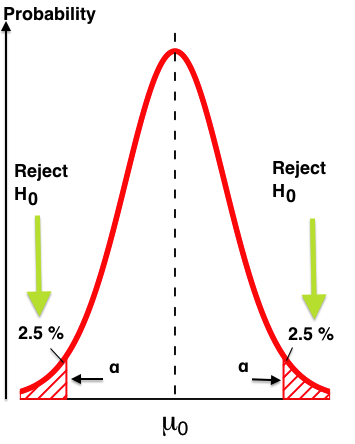

As we all know from our undergraduate studies, with hypothesis testing using p-values two outcomes are possible for a statistical test result. Either the test statistic result is in the critical region, and the test result is declared as statistically significant at the α (5%) significance level. In this case two logical choices are possible for a researcher: they can reject the null hypothesis, or accept the possibility that a Type I error has occurred (≤ α). Secondly, the test statistic result is outside of the critical region (the shaded areas below).

Here the p-value is greater than the statistical significance level (α) and either there is not enough evidence to reject H0, or there is a Type II error. We should note this is not the same as finding evidence in favour of H0. A lack of evidence against a hypothesis is not evidence for It, which is another common scientific error (see below). Another problem with the H0 is that many researchers view accepting the H0 as a failure of the experiment. This view is unfortunately rather poor science, as accepting or rejecting any hypothesis is a positive result in that it contributes to the knowledge. Even if the H0 is not refuted we have learned something new, and the term ‘failure’, should only be applied to errors of experimental design, or incorrect initial assumptions. That said, the relative dearth of reports of negative outcome studies compared to positive ones in the scientific literature should give us some cause for question here.

Here the p-value is greater than the statistical significance level (α) and either there is not enough evidence to reject H0, or there is a Type II error. We should note this is not the same as finding evidence in favour of H0. A lack of evidence against a hypothesis is not evidence for It, which is another common scientific error (see below). Another problem with the H0 is that many researchers view accepting the H0 as a failure of the experiment. This view is unfortunately rather poor science, as accepting or rejecting any hypothesis is a positive result in that it contributes to the knowledge. Even if the H0 is not refuted we have learned something new, and the term ‘failure’, should only be applied to errors of experimental design, or incorrect initial assumptions. That said, the relative dearth of reports of negative outcome studies compared to positive ones in the scientific literature should give us some cause for question here.

The Neyman-Pearson approach has attained the status of orthodoxy in modern health science, but we should note that Fisher’s inductive inference and Neyman-Pearson’s deductive approach are actually philosophically opposed, and many researchers are unaware of the philosophical distinctions between them and misinterpret this in their reports.

Commonly researchers state H0 and Ha, the type I error rate (α) and the p-value is determined, and the power for the test statistic is computed, (Neyman–Pearson’s approach). But then the p-value is mistakenly presented as the Type I error rate and the probability that the null hypothesis is true (Fisher’s approach), rather than the correct interpretation that it represents probability that random sampling would lead to a difference between sample means as large, or larger than that observed if H0 were true (Neyman-Pearson’s approach). This seems a subtle difference but technically these are two very different things, and Fisher viewed his p-value as an objective measure of evidence against the null hypothesis, whilst Neyman & Pearson did not. They view that this (and particularly a single test in a single study) never “proves” anything.

As p-values represent conditional probabilities (conditional on the premise that the H0 is true) an example to help us understand this difference would be that the probability that a given nurse is female, Prob(female | Nurse), is around 80% the inverse probability, that a given female is a nurse; Prob(Nurse| female), is likely smaller than 5%. Arguments over this misinterpretation of p-values continues to lead to widespread disagreement on the interpretation of test results.

There is also some good evidence that because of the way p-values are calculated they actually exaggerate the evidence against the null hypothesis (Berger & Selke, 1987; Hubbard & Lindsay, 2008) and this represents one of the most significant (no pun intended – Ok well just a bit) criticisms of p-values as an overall measure of evidence.

In science, we are typically interested in the causal effect size, i.e., the amount and nature of differences, and view that if a sample is large enough, a difference can be found to be “statistically significant.” However, the standard 5% significance level doesn’t really have any mathematical basis and is actually a convention as a result of a long-standing tradition, and the exaggeration of evidence against the null-hypothesis inherent in the use of p-values makes the value of significance testing to empirical scientific enquiry limited. To be clear statistical test results and significance values do not provide any of the following (although many researchers assume they do):

- the probability that the null hypothesis is true,

- the probability that the alternative hypothesis is true,

- the probability that the initial finding can be replicated, and,

- if a result is important (or not).

The do give us some statistical evidence that the phenomenon we are examining likely exists but that is about it, and yet we should be very aware that the ubiquitous p-value does not provide an objective, or unambiguous measure of evidence in hypothesis testing. This is not a new argument and has been argued since the 1930’s with some researchers arguing that significance tests really represent modern sorcery rather than science (Bakan, 1966; Lambdin, 2012) and that their counter-intuitive nature frequently leads to confusion about the terminology.

Logically it has also been noted that p-values fail to meet the simple logical condition required by a measure of support, in that if hypothesis Ha implies hypothesis H0 as the converse we should expect at least as much support for H0 as there is for Ha (Hubbard & Lindsay, 2008; Schervish, 1996).

Our problem is we need practical methods that avoid us both dismissing meaningful results, and exaggerating evidence. Bayesian techniques have been a suggestion for the replacement significance texting and p-values, but have yet to take hold, probably because of the simplicity of implementing p-values and their widespread perceived objectivity (Thompson, 1998). Overall these concerns should emphasize the importance of repeated studies and consideration of findings in a larger context, and ultimately this leads us to a good argument of the value of meta-analysis.

But the question remains, why are we still relying on p-values when there are so many issues with them, and probably much better techniques?

Beat’s me and maybe the sooner we become Bayesian’s the better for science.

Bernie

References

Bakan, D. (1966). The test of significance in psychological research Psychological Bulletin, 66, 423-437.

Berger, J. O., & Selke, T. (1987). Testing a point null hypothesis: The irreconcilability of p values and evidence. Journal of the American Statistical Association, 82(2,), 112–139.

Hubbard, R., & Lindsay, R. M. (2008). Why P values are not a useful measure of evidence in statistical significance testing Theory & Psychology, 18(1), 69-88.

Lambdin, C. (2012). Significance tests as sorcery: Science is empirical-significance tests are not. Theory & Psychology, 22(67), 67-90.

Schervish, M. J. (1996). P values: What they are and what they are not. The American Statistician, 50, 203-206.

Thompson, J. R. (1998). A response to “describing data requires no adjustment for multiple comparisons” American Journal of Epidemiology, 147(9)

Follow

Follow