Like the Earth itself, the world of science never stops turning.

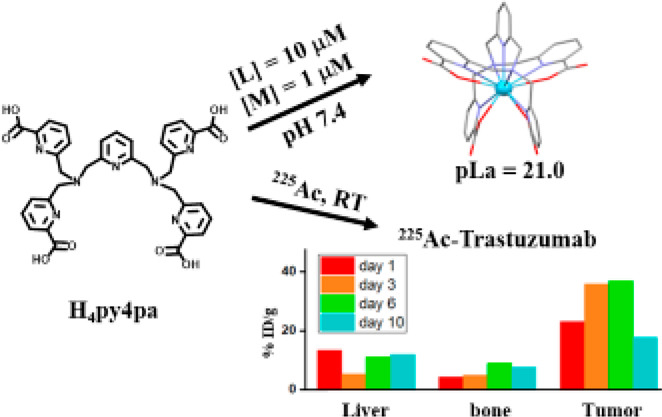

More specifically, the world of cancer research never stops turning; there are always improvements to be made. With this mindset, scientists Lily et al. from the Orvig group wrote and published a paper about the characteristics of a molecule called H4py4pa in early 2021. They studied the molecule at multiple locations, such as the UBC Chemistry Labs, TRIUMF and BC Cancer Research Centre, in hopes of improving the effectiveness of a specific type of cancer treatment called targeted alpha therapy. The paper supports the effectiveness of this new molecule by comparing characteristics, such as stability, with the original molecule used in targeted alpha therapy.

With all that said… What is targeted alpha therapy and how exactly does it work?

What in the Jargon?… A Carefully Defined Mechanism of H4py4pa

Before we get into the meat and potatoes, we need to define some of the terms here.

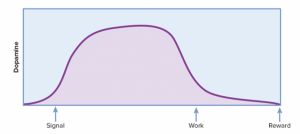

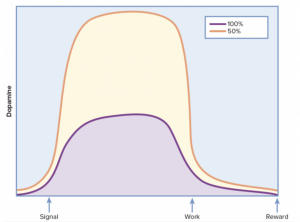

H4py4pa is a ligand, which is essentially a big molecule, that is able to bind to metal atoms. Ligands binding to metal atoms is a chemical phenomenon known as chelation. The metal atom in this case is Actinium-225 (225Ac). Essentially, chelation of H4py4pa to 225Ac can deliver four net alpha particles, which are a particulate form of concentrated radiation. Radiation is known to destroy cancer cells. In other words, radiation is tumoricidal. This process is known as targeted alpha therapy.

Starting from 2:50, you get a visualization of how the mechanism works. Source: STORYHIVE

The Body is ASTOUNDINGLY Large

We, as humans, are fairly large organisms. So the question becomes: How does the 225Ac-H4py4pa find the tumour site? 225Ac is normally bound to a specific antibody, called Trastuzumab, which is attached to the HER2+ protein. The HER2+ gene most notably contributes to breast cancer. This means that 225Ac already has a natural affinity for certain tumour sites in the body. However, 225Ac is still capable of unintentionally interacting with other areas of the complex body. This is where H4py4pa comes in. H4py4pa is a very big molecule that hugs around the 225Ac and prevents it from binding to anything other than the intended antibody.

A good way to visualize this mechanism is through a 4-component arrow mechanism. The 225Ac is the arrowhead that will do the damage to its target (in this case the tumour) while the shaft and the feathers of the arrow are the directional components that direct the 225Ac arrowhead to the right area of the body.

The issue with solely injecting 225Ac into the body, however, is that it’ll go straight to the bone instead of the cancerous area. As you may have heard, opposites attract, so because 225Ac is positively charged, it’ll be attracted to the bone, which has a lot of negatively-charged phosphate groups.

This is where the H4py4pa comes into play.

The fourth component of the arrow is H4py4pa, which acts as the linker for the arrowhead and shaft, binding to the 225Ac and holding it in place as the directional components of the arrow move the 225Ac arrowhead straight towards its target.

We’ve prepared a video in order to visualize this:

So what are the Discoveries of this Study?

Through many trials and tests, it was found that H4py4pa was very effective in conducting targeted alpha therapy with 225Ac. It was the most stable ligand that was bound to 225Ac relative to previously used ligands. There was much more control inside the body and it was less likely to spontaneously react with molecules other than the intended antibody. Furthermore, 225Ac maintained its radioactivity in the body. With some ligands, 225Ac did not release its alpha particles due to incompatible binding. However, with H4py4pa, 225Ac still had high radioactivity in tumour areas and low radioactivity in non-tumour areas such as the bones and the liver. This reduces the risk of untargeted toxicity in the body.

How Does it Differ from Chemotherapy?

Perhaps you’ve heard of chemotherapy before? It’s a fairly common and well-known treatment for cancer. While it is effective, there are drawbacks to chemotherapy as well; to quote Dr. Orvig:

“…the thing about cancer chemotherapy is just using conventional chemicals is that you basically poison the patient. And because the cancer is a little more susceptible, you’re poisoning the cancer a little bit more.”

- Dr. Chris Orvig, March 2022

Essentially, chemotherapy reduces cancer by targeting fast-growing cells in the body. Unfortunately, this includes the entire body, so while the fast-growing cancer cells are affected by chemotherapy, fast-growing healthy cells can be affected too.

On the other hand, targeted alpha therapy is able to directly target the tumorous areas of the body by binding to the antibody of the tumourous cell. This means that the 225Ac is able to directly destroy the cancerous cells without doing as much damage to the surrounding healthy cells.

Who were the people behind this all?

Well, the initial propagator of the idea behind H4py4pa was Dr. Lily Li who, at the time of the study, was a Ph.D. student with Dr. Orvig. After hearing about that idea, Dr. Chris Orvig, a UBC Chemistry Professor and an associate member of the faculty of Pharmaceutical Sciences, initiated the study. He also gathered the funding and the people for the study and helped conduct it as well.

For a bit more detail behind the history of this study and Dr.Orvig, we have a podcast prepared:

Now, that was all about the past, but what does this mean for our future?

Why is this so important?

Significance of H4py4pa and Targeted Alpha Therapy

H4py4pa and targeted alpha therapy could lead to many advancements in cancer treatment research.

As mentioned before, targeted alpha therapy is able to directly target tumours with less damage to the patient’s healthy cells; this could potentially reduce the fatigue most chemotherapy patients experience throughout their entire body. However, it does has limitations.

Before H4py4pa, the molecule used for target alpha therapy tended to bind to Actinium slower than preferred in its surrounding temperatures, which can lead it to be less effective.

This is why H4py4pa is so important.

Not only is it able to bind quicker to Actinium, but it’s also more stable than the previous molecules, all while remaining just as effective against cancer

In the future, this means that different companies can use Actinium and H4py4pa against different types of cancers. In reference to the arrow metaphor, they could adjust the directional components of the arrow (the shaft and the feathers) and direct the Actinium to different areas of the body.

“… I think it’s quite possible that we will see in the future Actinium used in different chemical constructs to treat a number of different cancers. And the hope would be that you give a patient one or two or three shots of this over a two or three-month period, and that we could drive many different cancers into remission. “

- Dr. Chris Orvig, March 2022

[Group 5: Bill Yang, Evelyn Zhong & Christine Sun]

from lets Talk science

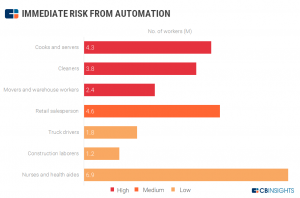

from lets Talk science from Walmart

from Walmart