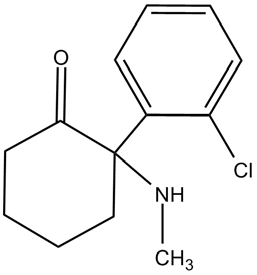

Well not really killing your gut, but the vast and diverse biome of microbes that live in your gut. That’s right, you have around one hundred TRILLION helpful bacteria living inside of you! They have many jobs, ranging from helping break down food, helping with the production of vitamins, are a key component in our immune system and much more.

Studies have shown that when humans migrate from less westernized cultures to North America, their gut’s microbiome was significantly reduced in diversity and was predominated by two bacteria, Bacteroides and Prevotella. This decrease in diversity is shown to increase the inflammation in your gut, leading to gut-related diseases that are skyrocketing in modern society. Some of these are obesity, diabetes, Crohn’s disease, ulcerative colitis, allergies, and asthma, to name a few. This change in our microbiome was shown to be due to the Westernized high protein/fat/sugar diet whereas more developing countries have diets of very high fibre with fewer meats and fats.

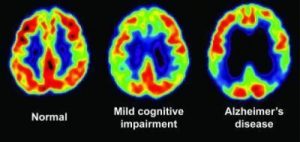

source: Phys.org

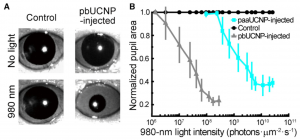

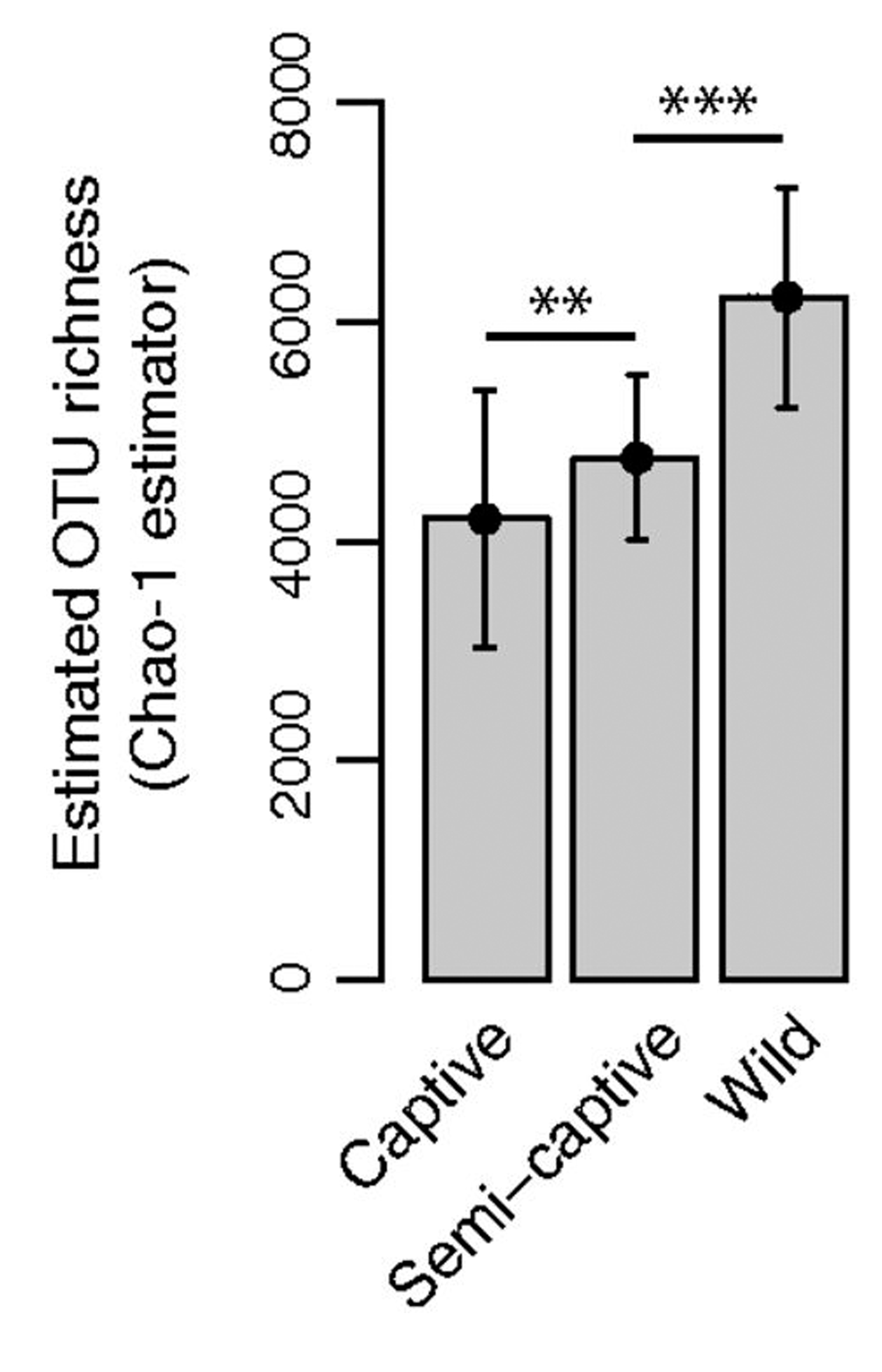

Dr. Dan Knights, an assistant professor at the University of Minnesota, has done research on this change of microbiomes using wild monkeys and their captive counterparts to see if there were any differences. He found that the monkeys had much higher microbe diversity in the wild than when they were confined in a zoo.

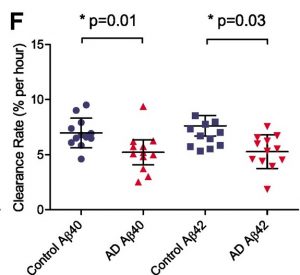

Shows that the diversity of the primates microbiome decreases significantly when removed from the wild. Error bars indicate SD, and asterisks denote significance at **P<0.01, and ***P<0.001. Source: PNAS

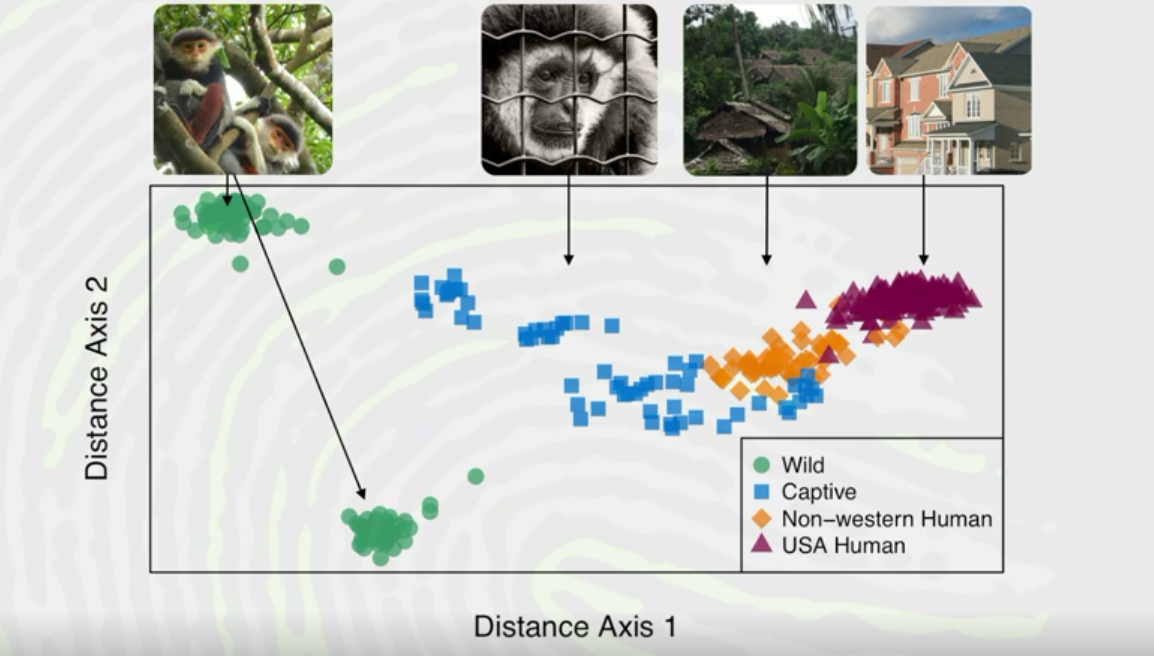

Another exciting result he found was that two different wild monkey species with very different gut microbiomes converged to similar lower diverse microbiomes when captive, even though they did not live in the same zoo, never mind the same continent. They were converging towards the microbiome that modern humans have today.

As primates move from wild to captive, their microbiomes converge in the direction of modern humans. Non-western humans also have higher gut microbiome diversity than humans living in westernized areas. Source: TED

The data also showed that non-western humans followed this trend of having higher microbe diversity and subsequently losing this diversity when moving to the USA. This results in these migrants increasing their risk of obesity, diabetes and other gut-related diseases. These results beg the questions on what this ultimately means for our health? Further, this really makes you wonder, are captive monkeys becoming more like modern-day humans or are we just an example of super-captive primates??

~ Amanda Fogh